티스토리 뷰

안녕하세요. CloudNet@ K8S Study를 진행하며 해당 내용을 이해하고 공유하기 위해 작성한 글입니다. 해당 내용은 EKS docs와 workshop을 기본으로 정리하였습니다.

AWS EKS

- Amazon Elastic Kubernetes Service는 자체 Kubernetes 컨트롤 플레인 또는 노드를 설치, 운영 및 유지 관리할 필요 없이 Kubernetes 실행에 사용할 수 있는 관리형 서비스

- 여러 AWS 서비스와 통합 : 컨테이너 이미지 저장소 Amazon ECR, 로드 분산을 위한 ELB, 인증 IAM, 격리를 위한 Amazon VPC

- 지원 버전 : 보통 4개의 마이너 버전 지원(현재 1.22~1.26), 평균 3개월마다 새 버전 제공, 각 버전은 12개월 정도 지원 — 링크

- v1.24.2 → Major.Minor.Patch ⇒ Major(Breaking Changes) . Minor(New Features) . Patch(Bug fixes Security)

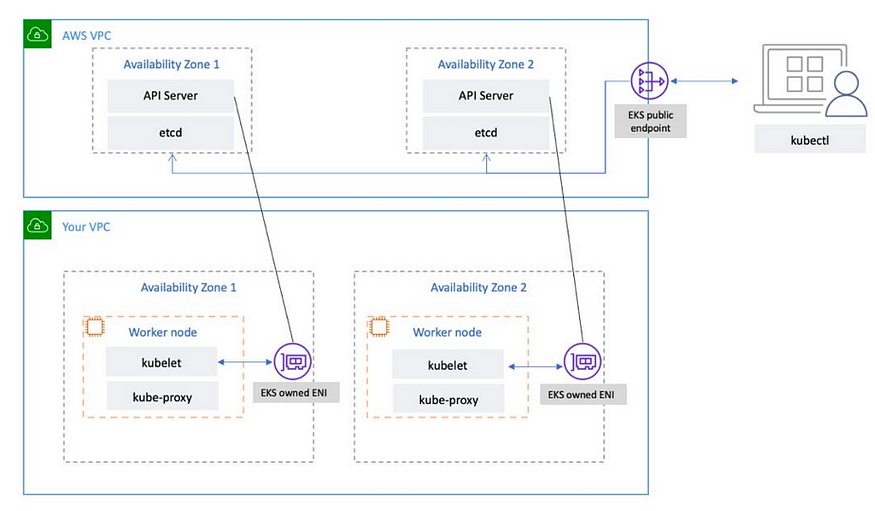

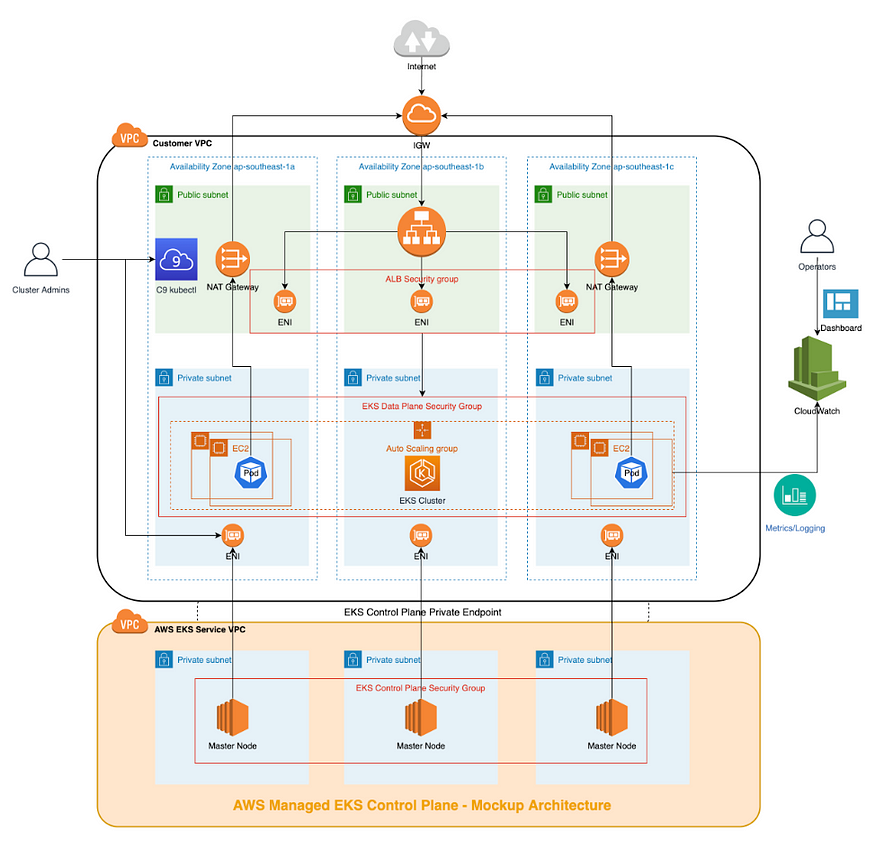

EKS 아키텍처

EKS Control Plane:

- EKS에서 관리하는 Kubernetes Control Plane은 EKS 관리 VPC 내에서 실행됩니다.

- EKS 컨트롤 플레인은 Kubernetes API 서버 노드, etcd 클러스터로 구성됩니다. API 서버, 스케줄러와 같은 구성 요소를 실행하고 kube-controller-manager자동 확장 그룹에서 실행되는 Kubernetes API 서버 노드입니다.

- EKS는 AWS 리전 내의 개별 가용 영역(AZ)에서 최소 2개의 API 서버 노드를 실행합니다. 마찬가지로 내구성을 위해 etcd 서버 노드는 3개의 AZ에 걸쳐 있는 자동 확장 그룹에서도 실행됩니다.

- EKS는 각 AZ에서 NAT 게이트웨이를 실행하고 API 서버 및 etcd 서버는 프라이빗 서브넷에서 실행합니다.

- 이 아키텍처는 단일 AZ의 이벤트가 EKS 클러스터의 가용성에 영향을 미치지 않도록 합니다. 관리형 끝점은 NLB를 사용하여 Kubernetes API 서버의 부하를 분산합니다.

- 또한 EKS는 작업자 노드와의 통신을 용이하게 하기 위해 서로 다른 AZ에 두 개의 ENI 를 프로비저닝합니다. 링크

EKS Data Plane : Kubernetes 노드는 컨테이너화된 애플리케이션을 실행하는 머신입니다. Amazon EKS 클러스터는 자체 관리형 노드 , Amazon EKS 관리형 노드 그룹 및 AWS Fargate 의 모든 조합에서 Pod를 예약할 수 있습니다 . 링크

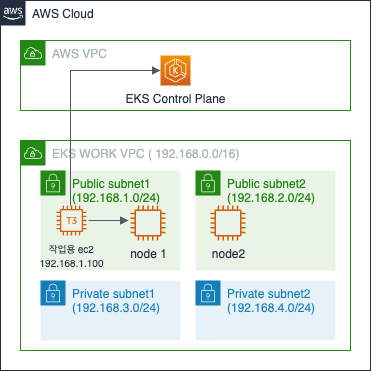

EKS 배포

- 아래와 같은 환경을 배포합니다. 약 20분 정도 소요됩니다.

# yaml 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/myeks-1week.yaml

# 배포

aws cloudformation deploy --template-file ~/Downloads/myeks-1week.yaml --stack-name myeks --parameter-overrides KeyName=<My SSH Keyname> SgIngressSshCidr=<My Home Public IP Address>/32 --region <리전>

# CloudFormation 스택 배포 완료 후 EC2 IP 출력

aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[*].OutputValue' --output text

예시) 3.35.137.31

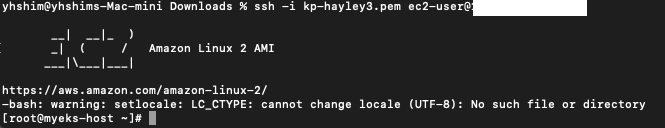

# ec2 에 SSH 접속

ssh -i <My SSH Keyfile> ec2-user@3.35.137.31

기본 정보 확인

# (옵션) cloud-init 실행 과정 로그 확인

$ sudo tail -f /var/log/cloud-init-output.log

|o.. + . |

| . o . E |

|. .. + . |

|. .o S |

| . ..+=o |

| + oBX*. |

| o o++=#= |

| . .BO=*. |

+----[SHA256]-----+

Cloud-init v. 19.3-46.amzn2 finished at Sat, 29 Apr 2023 05:05:33 +0000. Datasource DataSourceEc2. Up 61.77 seconds

# 사용자 확인

$ sudo su -

$ whoami

# 기본 툴 및 SSH 키 설치 등 확인

$ kubectl version --client=true -o yaml | yh

clientVersion:

buildDate: "2023-03-09T20:05:58Z"

compiler: gc

gitCommit: bde397a9c9f0d2ca03219bad43a4c2bef90f176f

gitTreeState: clean

gitVersion: v1.25.7-eks-a59e1f0

goVersion: go1.19.6

major: "1"

minor: 25+

platform: linux/amd64

kustomizeVersion: v4.5.7

$ eksctl version

0.139.0

$ aws --version

aws-cli/2.11.16 Python/3.11.3 Linux/4.14.311-233.529.amzn2.x86_64 exe/x86_64.amzn.2 prompt/off

$ ls /root/.ssh/id_rsa*

/root/.ssh/id_rsa /root/.ssh/id_rsa.pub

# 도커 엔진 설치 확인

$ docker infoIAM User 자격 증명 설정 및 VPC 확인 및 변수 지정

# 자격 구성 설정 없이 확인

$ aws ec2 describe-instances

# IAM User 자격 구성 : 실습 편리를 위해 administrator 권한을 가진 IAM User 의 자격 증명 입력

$ aws configure

AWS Access Key ID [None]: AKIA5...

AWS Secret Access Key [None]: CVNa2...

Default region name [None]: ap-northeast-2

Default output format [None]: json

# 자격 구성 적용 확인 : 노드 IP 확인

$ aws ec2 describe-instances

# EKS 배포할 VPC 정보 확인

$ aws ec2 describe-vpcs --filters "Name=tag:Name,Values=$CLUSTER_NAME-VPC" | jq

{

"Vpcs": [

{

"CidrBlock": "192.168.0.0/16",

"DhcpOptionsId": "dopt-de7a18b5",

"State": "available",

"VpcId": "vpc-0f993d1a320e9ee56",

"OwnerId": "9XXXXXXXXXX",

"InstanceTenancy": "default",

"CidrBlockAssociationSet": [

{

"AssociationId": "vpc-cidr-assoc-00fc9321a92199ed2",

"CidrBlock": "192.168.0.0/16",

"CidrBlockState": {

"State": "associated"

}

}

],

"IsDefault": false,

"Tags": [

{

"Key": "aws:cloudformation:logical-id",

"Value": "EksVPC"

},

{

"Key": "Name",

"Value": "myeks-VPC"

},

{

"Key": "aws:cloudformation:stack-id",

"Value": "arn:aws:cloudformation:ap-northeast-2:9XXXXXXXXX:stack/mykops/3d133cc0-e64b-11ed-b30d-06201494b854"

},

{

"Key": "aws:cloudformation:stack-name",

"Value": "mykops"

}

]

}

]

}

$ export VPCID=$(aws ec2 describe-vpcs --filters "Name=tag:Name,Values=$CLUSTER_NAME-VPC" | jq -r .Vpcs[].VpcId)

$ echo "export VPCID=$VPCID" >> /etc/profile

$ echo VPCID

# EKS 배포할 VPC에 속한 Subnet 정보 확인

$ aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --output json | jq

$ aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --output yaml | yh

## 퍼블릭 서브넷 ID 확인

$ aws ec2 describe-subnets --filters Name=tag:Name,Values="$CLUSTER_NAME-PublicSubnet1" | jq

$ aws ec2 describe-subnets --filters Name=tag:Name,Values="$CLUSTER_NAME-PublicSubnet1" --query "Subnets[0].[SubnetId]" --output text

$ export PubSubnet1=$(aws ec2 describe-subnets --filters Name=tag:Name,Values="$CLUSTER_NAME-PublicSubnet1" --query "Subnets[0].[SubnetId]" --output text)

$ export PubSubnet2=$(aws ec2 describe-subnets --filters Name=tag:Name,Values="$CLUSTER_NAME-PublicSubnet2" --query "Subnets[0].[SubnetId]" --output text)

$ echo "export PubSubnet1=$PubSubnet1" >> /etc/profile

$ echo "export PubSubnet2=$PubSubnet2" >> /etc/profile

$ echo $PubSubnet1

$ echo $PubSubnet2eksctl 사용 연습

# eksctl help

$ eksctl

$ eksctl create

$ eksctl create cluster --help

$ eksctl create nodegroup --help

# 현재 지원 버전 정보 확인

$ eksctl create cluster -h | grep version

--version string Kubernetes version (valid options: 1.22, 1.23, 1.24, 1.25, 1.26) (default "1.25")

# eks 클러스터 생성 + 노드그룹없이

$ eksctl create cluster --name myeks --region=ap-northeast-2 --without-nodegroup --dry-run | yh

apiVersion: eksctl.io/v1alpha5

availabilityZones:

- ap-northeast-2c

- ap-northeast-2b

- ap-northeast-2d

cloudWatch:

clusterLogging: {}

iam:

vpcResourceControllerPolicy: true

withOIDC: false

kind: ClusterConfig

kubernetesNetworkConfig:

ipFamily: IPv4

metadata:

name: myeks

region: ap-northeast-2

version: "1.25"

privateCluster:

enabled: false

skipEndpointCreation: false

vpc:

autoAllocateIPv6: false

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: false

publicAccess: true

manageSharedNodeSecurityGroupRules: true

nat:

gateway: Single

# eks 클러스터 생성 + 관리형노드그룹생성(이름, 인스턴스 타입, EBS볼륨사이즈, SSH접속허용) & 사용 가용영역(2a,2c) + VPC 대역 지정

$ eksctl create cluster --name myeks --region=ap-northeast-2 --nodegroup-name=mynodegroup --node-type=t3.medium --node-volume-size=30 \

--zones=ap-northeast-2a,ap-northeast-2c --vpc-cidr=172.20.0.0/16 --ssh-access --dry-run | yh

apiVersion: eksctl.io/v1alpha5

availabilityZones:

- ap-northeast-2a

- ap-northeast-2c

cloudWatch:

clusterLogging: {}

iam:

vpcResourceControllerPolicy: true

withOIDC: false

kind: ClusterConfig

kubernetesNetworkConfig:

ipFamily: IPv4

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 2

disableIMDSv1: false

disablePodIMDS: false

iam:

withAddonPolicies:

albIngress: false

appMesh: false

appMeshPreview: false

autoScaler: false

awsLoadBalancerController: false

certManager: false

cloudWatch: false

ebs: false

efs: false

externalDNS: false

fsx: false

imageBuilder: false

xRay: false

instanceSelector: {}

instanceType: t3.medium

labels:

alpha.eksctl.io/cluster-name: myeks

alpha.eksctl.io/nodegroup-name: mynodegroup

maxSize: 2

minSize: 2

name: mynodegroup

privateNetworking: false

releaseVersion: ""

securityGroups:

withLocal: null

withShared: null

ssh:

allow: true

publicKeyPath: ~/.ssh/id_rsa.pub

tags:

alpha.eksctl.io/nodegroup-name: mynodegroup

alpha.eksctl.io/nodegroup-type: managed

volumeIOPS: 3000

volumeSize: 30

volumeThroughput: 125

volumeType: gp3

metadata:

name: myeks

region: ap-northeast-2

version: "1.25"

privateCluster:

enabled: false

skipEndpointCreation: false

vpc:

autoAllocateIPv6: false

cidr: 172.20.0.0/16

clusterEndpoints:

privateAccess: false

publicAccess: true

manageSharedNodeSecurityGroupRules: true

nat:

gateway: Single

# 변수 확인

$ echo $AWS_DEFAULT_REGION

ap-northeast-2

$ echo $CLUSTER_NAME

myeks

$ echo $VPCID

vpc-0f993d1a320e9ee56

$ echo $PubSubnet1,$PubSubnet2

subnet-09fba7442865775ad,subnet-094d12dfb5e3c74ff

# 옵션 [터미널1] EC2 생성 모니터링

#while true; do aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output text ; echo "------------------------------" ; sleep 1; done

$ aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

----------------------------------------------------------------

| DescribeInstances |

+--------------+-----------------+------------------+----------+

| InstanceName | PrivateIPAdd | PublicIPAdd | Status |

+--------------+-----------------+------------------+----------+

| myeks-host | 192.168.1.100 | 13.124.130.243 | running |

+--------------+-----------------+------------------+----------+

# eks 클러스터 & 관리형노드그룹 배포 전 정보 확인

$ eksctl create cluster --name $CLUSTER_NAME --region=$AWS_DEFAULT_REGION --nodegroup-name=$CLUSTER_NAME-nodegroup --node-type=t3.medium \

--node-volume-size=30 --vpc-public-subnets "$PubSubnet1,$PubSubnet2" --version 1.24 --ssh-access --external-dns-access --dry-run | yh

# eks 클러스터 & 관리형노드그룹 배포: 총 16분(13분+3분) 소요

$ eksctl create cluster --name $CLUSTER_NAME --region=$AWS_DEFAULT_REGION --nodegroup-name=$CLUSTER_NAME-nodegroup --node-type=t3.medium \

--node-volume-size=30 --vpc-public-subnets "$PubSubnet1,$PubSubnet2" --version 1.24 --ssh-access --external-dns-access --verbose 4Amazon EKS 배포 실습

# 변수 확인

echo $AWS_DEFAULT_REGION

echo $CLUSTER_NAME

echo $VPCID

echo $PubSubnet1,$PubSubnet2

# 옵션 [터미널1] EC2 생성 모니터링

#while true; do aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output text ; echo "------------------------------" ; sleep 1; done

aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

# eks 클러스터 & 관리형노드그룹 배포 전 정보 확인

eksctl create cluster --name $CLUSTER_NAME --region=$AWS_DEFAULT_REGION --nodegroup-name=$CLUSTER_NAME-nodegroup --node-type=t3.medium \

--node-volume-size=30 --vpc-public-subnets "$PubSubnet1,$PubSubnet2" --version 1.24 --ssh-access --external-dns-access --dry-run | yh

...

vpc:

autoAllocateIPv6: false

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: false

publicAccess: true

id: vpc-0505d154771a3dfdf

manageSharedNodeSecurityGroupRules: true

nat:

gateway: Disable

subnets:

public:

ap-northeast-2a:

az: ap-northeast-2a

cidr: 192.168.1.0/24

id: subnet-0d98bee5a7c0dfcc6

ap-northeast-2c:

az: ap-northeast-2c

cidr: 192.168.2.0/24

id: subnet-09dc49de8d899aeb7

# eks 클러스터 & 관리형노드그룹 배포: 총 16분(13분+3분) 소요

eksctl create cluster --name $CLUSTER_NAME --region=$AWS_DEFAULT_REGION --nodegroup-name=$CLUSTER_NAME-nodegroup --node-type=t3.medium \

--node-volume-size=30 --vpc-public-subnets "$PubSubnet1,$PubSubnet2" --version 1.24 --ssh-access --external-dns-access --verbose 4

...

023-04-23 01:32:22 [▶] setting current-context to admin@myeks.ap-northeast-2.eksctl.io

2023-04-23 01:32:22 [✔] saved kubeconfig as "/root/.kube/config"

eks 정보 확인

# krew 플러그인 확인

$ kubectl krew list

PLUGIN VERSION

ctx v0.9.4

get-all v1.3.8

krew v0.4.3

ns v0.9.4

$ kubectl ctx: 쿠버네티스 컨텍스트를 보다 쉽게 관리해주는 플러그인

iam-root-account@myeks.ap-northeast-2.eksctl.io

$ kubectl ns

default

kube-node-lease

kube-public

kube-system

$ kubectl ns default

Context "iam-root-account@myeks.ap-northeast-2.eksctl.io" modified.

Active namespace is "default".

# eks 클러스터 정보 확인

$ kubectl cluster-info

Kubernetes control plane is running at https://7584FC246F06BDC505EAA7D17918544C.yl4.ap-northeast-2.eks.amazonaws.com

CoreDNS is running at https://7584FC246F06BDC505EAA7D17918544C.yl4.ap-northeast-2.eks.amazonaws.com/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

$ eksctl get cluster

NAME REGION EKSCTL CREATED

myeks ap-northeast-2 True

$ aws eks describe-cluster --name $CLUSTER_NAME | jq

$ aws eks describe-cluster --name $CLUSTER_NAME | jq -r .cluster.endpoint

https://7584FC246F06BDC505EAA7D17918544C.yl4.ap-northeast-2.eks.amazonaws.com

## dig 조회 : 해당 IP 소유 리소스는 어떤것일까요? NLB??

$ dig +short 7584FC246F06BDC505EAA7D17918544C.yl4.ap-northeast-2.eks.amazonaws.com

43.201.218.116

15.164.151.17

# eks API 접속 시도

$ curl -k -s $(aws eks describe-cluster --name $CLUSTER_NAME | jq -r .cluster.endpoint)

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {},

"code": 403

}

$ curl -k -s $(aws eks describe-cluster --name $CLUSTER_NAME | jq -r .cluster.endpoint)/version | jq

{

"major": "1",

"minor": "24+",

"gitVersion": "v1.24.12-eks-ec5523e",

"gitCommit": "3939bb9475d7f05c8b7b058eadbe679e6c9b5e2e",

"gitTreeState": "clean",

"buildDate": "2023-03-20T21:30:46Z",

"goVersion": "go1.19.7",

"compiler": "gc",

"platform": "linux/amd64"

}

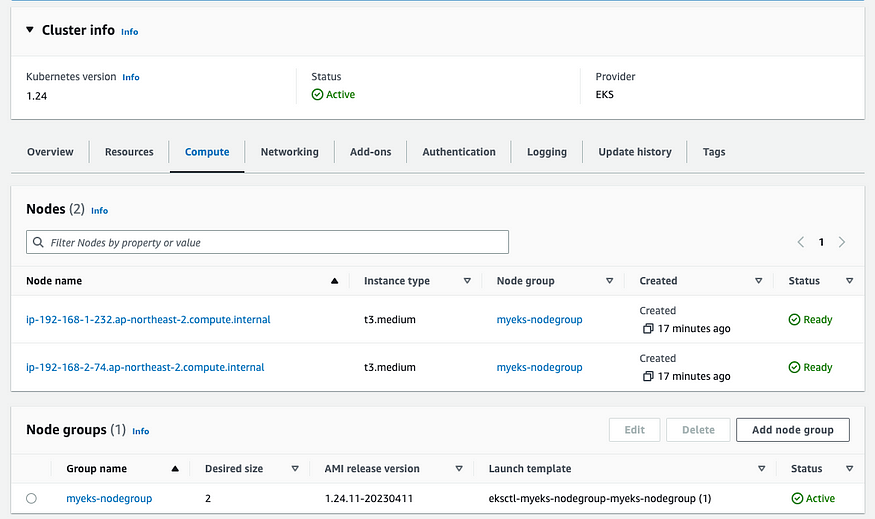

# eks 노드 그룹 정보 확인

$ eksctl get nodegroup --cluster $CLUSTER_NAME --name $CLUSTER_NAME-nodegroup

CLUSTER NODEGROUP STATUS CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID ASG NAME TYPE

myeks myeks-nodegroup ACTIVE 2023-04-29T06:09:19Z 2 2 2 t3.medium AL2_x86_64 eks-myeks-nodegroup-56c3e5d0-a0ce-f619-50ba-da0318115065 managed

$ aws eks describe-nodegroup --cluster-name $CLUSTER_NAME --nodegroup-name $CLUSTER_NAME-nodegroup | jq

# 노드 정보 확인 : OS와 컨테이너런타임 확인

$ kubectl describe nodes | grep "node.kubernetes.io/instance-type"

node.kubernetes.io/instance-type=t3.medium

node.kubernetes.io/instance-type=t3.medium

$ kubectl get node

NAME STATUS ROLES AGE VERSION

ip-192-168-1-232.ap-northeast-2.compute.internal Ready <none> 52m v1.24.11-eks-a59e1f0

ip-192-168-2-74.ap-northeast-2.compute.internal Ready <none> 52m v1.24.11-eks-a59e1f0

$ kubectl get node -owide

$ kubectl get node -v=6

I0429 16:03:50.992482 3123 loader.go:374] Config loaded from file: /root/.kube/config

I0429 16:03:51.921099 3123 round_trippers.go:553] GET https://7584FC246F06BDC505EAA7D17918544C.yl4.ap-northeast-2.eks.amazonaws.com/api/v1/nodes?limit=500 200 OK in 904 milliseconds

NAME STATUS ROLES AGE VERSION

ip-192-168-1-232.ap-northeast-2.compute.internal Ready <none> 53m v1.24.11-eks-a59e1f0

ip-192-168-2-74.ap-northeast-2.compute.internal Ready <none> 53m v1.24.11-eks-a59e1f0

# 인증 정보 확인 : 자세한 정보는 6주차(보안)에서 다룸

$ cat /root/.kube/config | yh

$ aws eks get-token --cluster-name $CLUSTER_NAME --region $AWS_DEFAULT_REGION

# 파드 정보 확인 : 온프레미스 쿠버네티스의 파드 배치와 다른점은? , 파드의 IP의 특징이 어떤가요? 자세한 네트워크는 2주차에서 다룸

# api server, cnotroller, schedule가 보이지 않음 -> AWS 관리 영역이므로

$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

aws-node-fnp9z 1/1 Running 0 53m

aws-node-sc28v 1/1 Running 0 53m

coredns-dc4979556-4q7sq 1/1 Running 0 61m

coredns-dc4979556-mmd2n 1/1 Running 0 61m

kube-proxy-ngbfv 1/1 Running 0 53m

kube-proxy-qdxsx 1/1 Running 0 53m

$ kubectl get pod -n kube-system -o wide

$ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system aws-node-fnp9z 1/1 Running 0 54m

kube-system aws-node-sc28v 1/1 Running 0 54m

kube-system coredns-dc4979556-4q7sq 1/1 Running 0 62m

kube-system coredns-dc4979556-mmd2n 1/1 Running 0 62m

kube-system kube-proxy-ngbfv 1/1 Running 0 54m

kube-system kube-proxy-qdxsx 1/1 Running 0 54m

# kube-system 네임스페이스에 모든 리소스 확인

$ kubectl get-all -n kube-system

# 모든 파드의 컨테이너 이미지 정보 확인

$ kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c

2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/amazon-k8s-cni:v1.11.4-eksbuild.1

2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/coredns:v1.8.7-eksbuild.3

2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/kube-proxy:v1.24.7-minimal-eksbuild.2

# AWS ECR에서 컨테이너 이미지 가져오기 시도

$ docker pull 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/coredns:v1.8.7-eksbuild.3

Error response from daemon: Head "https://602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/v2/eks/coredns/manifests/v1.8.7-eksbuild.3": no basic auth credentials노드 정보 상세 확인

# 노드 IP 확인 및 PrivateIP 변수 지정

$ aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

------------------------------------------------------------------------------

| DescribeInstances |

+-----------------------------+----------------+-----------------+-----------+

| InstanceName | PrivateIPAdd | PublicIPAdd | Status |

+-----------------------------+----------------+-----------------+-----------+

| myeks-myeks-nodegroup-Node | 192.168.2.74 | 13.209.99.65 | running |

| myeks-myeks-nodegroup-Node | 192.168.1.232 | 13.124.249.184 | running |

| myeks-host | 192.168.1.100 | 13.124.130.243 | running |

+-----------------------------+----------------+-----------------+-----------+

$ N1=192.168.1.232

$ N2=192.168.2.74

# eksctl-host 에서 노드의IP나 coredns 파드IP로 ping 테스트

$ ping <IP>

$ ping -c 2 $N1

$ ping -c 2 $N2

PING 192.168.2.74 (192.168.2.74) 56(84) bytes of data.

--- 192.168.2.74 ping statistics ---

2 packets transmitted, 0 received, 100% packet loss, time 1023ms

# 노드 보안그룹 ID 확인

$ aws ec2 describe-security-groups --filters Name=group-name,Values=*nodegroup* --query "SecurityGroups[*].[GroupId]" --output text

sg-08d62ba9f00567e34

$ NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*nodegroup* --query "SecurityGroups[*].[GroupId]" --output text)

$ echo $NGSGID

sg-08d62ba9f00567e34

# 노드 보안그룹에 eksctl-host 에서 노드(파드)에 접속 가능하게 룰(Rule) 추가 설정

$ aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.100/32

{

"Return": true,

"SecurityGroupRules": [

{

"SecurityGroupRuleId": "sgr-0b7b836b2429d13be",

"GroupId": "sg-08d62ba9f00567e34",

"GroupOwnerId": "9XXXXXXXXXXX",

"IsEgress": false,

"IpProtocol": "-1",

"FromPort": -1,

"ToPort": -1,

"CidrIpv4": "192.168.1.100/32"

}

]

}

# eksctl-host 에서 노드의IP나 coredns 파드IP로 ping 테스트

$ ping -c 2 $N1

PING 192.168.1.232 (192.168.1.232) 56(84) bytes of data.

64 bytes from 192.168.1.232: icmp_seq=1 ttl=255 time=0.433 ms

64 bytes from 192.168.1.232: icmp_seq=2 ttl=255 time=0.357 ms

--- 192.168.1.232 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1015ms

rtt min/avg/max/mdev = 0.357/0.395/0.433/0.038 ms

$ ping -c 2 $N2

PING 192.168.2.74 (192.168.2.74) 56(84) bytes of data.

64 bytes from 192.168.2.74: icmp_seq=1 ttl=255 time=1.02 ms

64 bytes from 192.168.2.74: icmp_seq=2 ttl=255 time=0.999 ms

--- 192.168.2.74 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.999/1.012/1.026/0.034 ms

# 워커 노드 SSH 접속

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 hostname

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 hostname

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1

Last login: Tue Apr 11 23:23:29 2023 from 205.251.233.109

__| __|_ )

_| ( / Amazon Linux 2 AMI

___|\___|___|

https://aws.amazon.com/amazon-linux-2/

No packages needed for security; 7 packages available

Run "sudo yum update" to apply all updates.

$ exit

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2

Warning: Permanently added '192.168.2.74' (ECDSA) to the list of known hosts.

Last login: Tue Apr 11 23:23:29 2023 from 205.251.233.109

__| __|_ )

_| ( / Amazon Linux 2 AMI

___|\___|___|

https://aws.amazon.com/amazon-linux-2/

No packages needed for security; 7 packages available

Run "sudo yum update" to apply all updates.

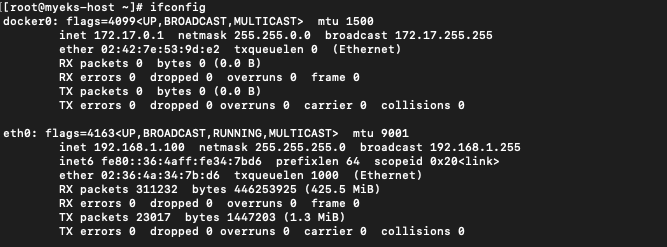

$ exit노드 네트워크 정보 확인

# AWS VPC CNI 사용 확인

$ kubectl -n kube-system get ds aws-node

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

aws-node 2 2 2 2 2 <none> 68m

$ kubectl describe daemonset aws-node --namespace kube-system | grep Image | cut -d "/" -f 2

amazon-k8s-cni-init:v1.11.4-eksbuild.1

amazon-k8s-cni:v1.11.4-eksbuild.1

# 파드 IP 확인

$ kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

aws-node-fnp9z 1/1 Running 0 61m 192.168.1.232 ip-192-168-1-232.ap-northeast-2.compute.internal <none> <none>

aws-node-sc28v 1/1 Running 0 61m 192.168.2.74 ip-192-168-2-74.ap-northeast-2.compute.internal <none> <none>

coredns-dc4979556-4q7sq 1/1 Running 0 68m 192.168.2.18 ip-192-168-2-74.ap-northeast-2.compute.internal <none> <none>

coredns-dc4979556-mmd2n 1/1 Running 0 68m 192.168.2.159 ip-192-168-2-74.ap-northeast-2.compute.internal <none> <none>

kube-proxy-ngbfv 1/1 Running 0 61m 192.168.2.74 ip-192-168-2-74.ap-northeast-2.compute.internal <none> <none>

kube-proxy-qdxsx 1/1 Running 0 61m 192.168.1.232 ip-192-168-1-232.ap-northeast-2.compute.internal <none> <none>

$ kubectl get pod -n kube-system -l k8s-app=kube-dns -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-dc4979556-4q7sq 1/1 Running 0 68m 192.168.2.18 ip-192-168-2-74.ap-northeast-2.compute.internal <none> <none>

coredns-dc4979556-mmd2n 1/1 Running 0 68m 192.168.2.159 ip-192-168-2-74.ap-northeast-2.compute.internal <none> <none>

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 sudo ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000

link/ether 02:4c:96:db:60:96 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.232/24 brd 192.168.1.255 scope global dynamic eth0

valid_lft 3253sec preferred_lft 3253sec

inet6 fe80::4c:96ff:fedb:6096/64 scope link

valid_lft forever preferred_lft forever

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 sudo ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000

link/ether 0a:80:f8:d8:a5:28 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.74/24 brd 192.168.2.255 scope global dynamic eth0

valid_lft 3056sec preferred_lft 3056sec

inet6 fe80::880:f8ff:fed8:a528/64 scope link

valid_lft forever preferred_lft forever

3: eni64e2d145f88@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether de:de:57:25:44:ae brd ff:ff:ff:ff:ff:ff link-netns cni-bb939253-a2cd-bb02-50dd-deda206c390c

inet6 fe80::dcde:57ff:fe25:44ae/64 scope link

valid_lft forever preferred_lft forever

4: eni950d59332cb@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether 0e:90:8e:ec:ec:dd brd ff:ff:ff:ff:ff:ff link-netns cni-5b7736dd-4f14-ef13-b0d6-f87c8c98c8a4

inet6 fe80::c90:8eff:feec:ecdd/64 scope link

valid_lft forever preferred_lft forever

5: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000

link/ether 0a:82:60:c2:ad:5a brd ff:ff:ff:ff:ff:ff

inet 192.168.2.86/24 brd 192.168.2.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::882:60ff:fec2:ad5a/64 scope link

valid_lft forever preferred_lft forever

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 sudo ip -c route

default via 192.168.1.1 dev eth0

169.254.169.254 dev eth0

192.168.1.0/24 dev eth0 proto kernel scope link src 192.168.1.232

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 sudo ip -c route

default via 192.168.2.1 dev eth0

169.254.169.254 dev eth0

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.74

192.168.2.18 dev eni950d59332cb scope link

192.168.2.159 dev eni64e2d145f88 scope link

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 sudo iptables -t nat -S

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-4ABEWC7E5O735MD7 -s 192.168.2.159/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-4ABEWC7E5O735MD7 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 192.168.2.159:53

-A KUBE-SEP-CKQF2Q5UF24APDQS -s 192.168.2.18/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-CKQF2Q5UF24APDQS -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 192.168.2.18:53

-A KUBE-SEP-HCNHW5P64TB52HDE -s 192.168.1.175/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-HCNHW5P64TB52HDE -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 192.168.1.175:443

-A KUBE-SEP-JKVKGPFFDHQML2DM -s 192.168.2.159/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-JKVKGPFFDHQML2DM -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 192.168.2.159:53

-A KUBE-SEP-NJHK6POUWAYAIE45 -s 192.168.2.18/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-NJHK6POUWAYAIE45 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 192.168.2.18:53

-A KUBE-SEP-ZFK2JWIBPJRUXRRJ -s 192.168.2.95/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-ZFK2JWIBPJRUXRRJ -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 192.168.2.95:443

-A KUBE-SERVICES -d 10.100.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -d 10.100.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 10.100.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 192.168.2.159:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-4ABEWC7E5O735MD7

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 192.168.2.18:53" -j KUBE-SEP-NJHK6POUWAYAIE45

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 192.168.1.175:443" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-HCNHW5P64TB52HDE

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 192.168.2.95:443" -j KUBE-SEP-ZFK2JWIBPJRUXRRJ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 192.168.2.159:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-JKVKGPFFDHQML2DM

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 192.168.2.18:53" -j KUBE-SEP-CKQF2Q5UF24APDQS

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 sudo iptables -t nat -S

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-4ABEWC7E5O735MD7 -s 192.168.2.159/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-4ABEWC7E5O735MD7 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 192.168.2.159:53

-A KUBE-SEP-CKQF2Q5UF24APDQS -s 192.168.2.18/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-CKQF2Q5UF24APDQS -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 192.168.2.18:53

-A KUBE-SEP-HCNHW5P64TB52HDE -s 192.168.1.175/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-HCNHW5P64TB52HDE -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 192.168.1.175:443

-A KUBE-SEP-JKVKGPFFDHQML2DM -s 192.168.2.159/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-JKVKGPFFDHQML2DM -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 192.168.2.159:53

-A KUBE-SEP-NJHK6POUWAYAIE45 -s 192.168.2.18/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-NJHK6POUWAYAIE45 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 192.168.2.18:53

-A KUBE-SEP-ZFK2JWIBPJRUXRRJ -s 192.168.2.95/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-ZFK2JWIBPJRUXRRJ -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 192.168.2.95:443

-A KUBE-SERVICES -d 10.100.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -d 10.100.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 10.100.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 192.168.2.159:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-4ABEWC7E5O735MD7

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 192.168.2.18:53" -j KUBE-SEP-NJHK6POUWAYAIE45

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 192.168.1.175:443" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-HCNHW5P64TB52HDE

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 192.168.2.95:443" -j KUBE-SEP-ZFK2JWIBPJRUXRRJ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 192.168.2.159:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-JKVKGPFFDHQML2DM

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 192.168.2.18:53" -j KUBE-SEP-CKQF2Q5UF24APDQS노드 프로세스 정보 확인

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 sudo systemctl status kubelet

● kubelet.service - Kubernetes Kubelet

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubelet-args.conf, 30-kubelet-extra-args.conf

Active: active (running) since Sat 2023-04-29 06:10:38 UTC; 1h 6min ago

Docs: https://github.com/kubernetes/kubernetes

Process: 2829 ExecStartPre=/sbin/iptables -P FORWARD ACCEPT -w 5 (code=exited, status=0/SUCCESS)

Main PID: 2839 (kubelet)

Tasks: 15

Memory: 77.9M

CGroup: /runtime.slice/kubelet.service

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 sudo pstree

systemd-+-2*[agetty]

|-amazon-ssm-agen-+-ssm-agent-worke---8*[{ssm-agent-worke}]

| `-8*[{amazon-ssm-agen}]

|-anacron

|-auditd---{auditd}

|-chronyd

|-containerd---17*[{containerd}]

|-containerd-shim-+-kube-proxy---5*[{kube-proxy}]

| |-pause

| `-11*[{containerd-shim}]

|-containerd-shim-+-bash-+-aws-k8s-agent---7*[{aws-k8s-agent}]

| | `-tee

| |-pause

| `-11*[{containerd-shim}]

|-crond

|-dbus-daemon

|-2*[dhclient]

|-gssproxy---5*[{gssproxy}]

|-irqbalance---{irqbalance}

|-kubelet---14*[{kubelet}]

|-lvmetad

|-master-+-pickup

| `-qmgr

|-rngd

|-rpcbind

|-rsyslogd---2*[{rsyslogd}]

|-sshd---sshd---sshd---sudo---pstree

|-systemd-journal

|-systemd-logind

`-systemd-udevd

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 sudo ps afxuwww

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 sudo ps axf |grep /usr/bin/containerd

2728 ? Ssl 0:23 /usr/bin/containerd

2985 ? Sl 0:00 /usr/bin/containerd-shim-runc-v2 -namespace k8s.io -id 5f2411ffbb969ce6c833a4ff39cee13ff894b027a68ee3b6aa81d6871e6ba526 -address /run/containerd/containerd.sock

2986 ? Sl 0:04 /usr/bin/containerd-shim-runc-v2 -namespace k8s.io -id 2e6084daed42c500356294430d93d77ba4105f65526ac30fe44c400d567b92f6 -address /run/containerd/containerd.sock

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 sudo ls /etc/kubernetes/manifests/

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 sudo systemctl status kubelet

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 sudo pstree

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 sudo ps afxuwww

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 sudo ps axf |grep /usr/bin/containerd

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 sudo ls /etc/kubernetes/manifests/노드 스토리지 정보 확인

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 30G 0 disk

├─nvme0n1p1 259:1 0 30G 0 part /

└─nvme0n1p128 259:2 0 1M 0 part

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 df -hT --type xfs

Filesystem Type Size Used Avail Use% Mounted on

/dev/nvme0n1p1 xfs 30G 2.8G 28G 10% /

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 lsblk

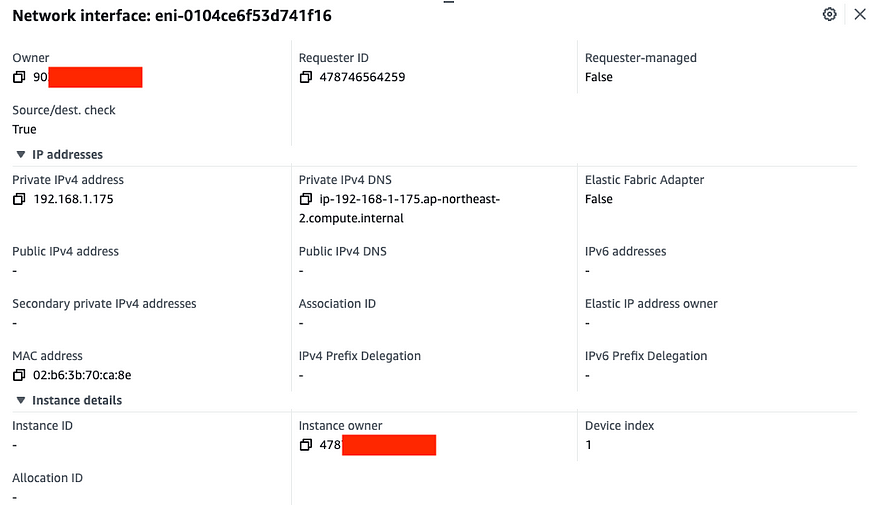

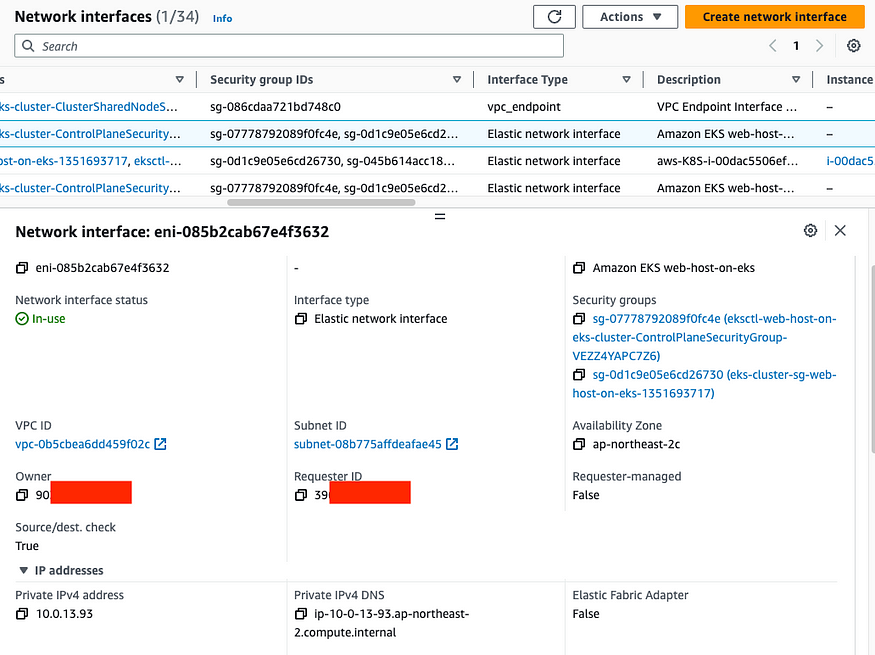

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 df -hT --type xfsEKS owned ENI 확인

# kubelet, kube-proxy 통신 Peer Address는 어딘인가요?

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 sudo ss -tnp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

ESTAB 0 0 192.168.1.232:49562 10.100.0.1:443 users:(("aws-k8s-agent",pid=3426,fd=7))

ESTAB 0 0 192.168.1.232:22 192.168.1.100:55494 users:(("sshd",pid=23210,fd=3),("sshd",pid=23178,fd=3))

ESTAB 0 0 192.168.1.232:49002 52.95.195.109:443 users:(("ssm-agent-worke",pid=2449,fd=16))

ESTAB 0 0 192.168.1.232:58762 15.164.151.17:443 users:(("kubelet",pid=2839,fd=17))

ESTAB 0 0 192.168.1.232:48450 52.95.194.65:443 users:(("ssm-agent-worke",pid=2449,fd=10))

ESTAB 0 0 192.168.1.232:52100 43.201.218.116:443 users:(("kube-proxy",pid=3101,fd=11))

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 sudo ss -tnp

# [터미널] aws-node 데몬셋 파드 1곳에 bash 실행해두기

$ kubectl exec daemonsets/aws-node -it -n kube-system -c aws-node -- bash

# exec 실행으로 추가된 연결 정보의 Peer Address는 어딘인가요? >> AWS 네트워크 인터페이스 ENI에서 해당 IP 정보 확인

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 sudo ss -tnp

ESTAB 0 0 192.168.1.232:49562 10.100.0.1:443 users:(("aws-k8s-agent",pid=3426,fd=7))

ESTAB 0 0 192.168.1.232:49002 52.95.195.109:443 users:(("ssm-agent-worke",pid=2449,fd=16))

ESTAB 0 56 192.168.1.232:22 192.168.1.100:50914 users:(("sshd",pid=32163,fd=3),("sshd",pid=32131,fd=3))

ESTAB 0 0 192.168.1.232:58762 15.164.151.17:443 users:(("kubelet",pid=2839,fd=17))

ESTAB 0 0 192.168.1.232:52100 43.201.218.116:443 users:(("kube-proxy",pid=3101,fd=11))

ESTAB 0 0 192.168.1.232:43538 52.95.194.65:443 users:(("ssm-agent-worke",pid=2449,fd=10))

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 sudo ss -tnp

# EKS owned ENI는 worker node에 붙어있고 이는 AWS가 관리하는 정보- EKS owned ENI : 관리형 노드 그룹의 워커 노드는 내 소유지만, 연결된 ENI(NIC)의 인스턴스(관리형 노드)는 AWS 소유입니다.

- 아래와 같이 owner 와 instance owner가 다른 것을 확인할 수 있습니다.

EC2 메타데이터 확인(IAM Role) : 상세한 내용은 보안에서 다룸 — 링크

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 curl -s http://169.254.169.254/latest/

dynamic

meta-data

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 curl -s http://169.254.169.254/latest/meta-data/iam/security-credentials/

eksctl-myeks-nodegroup-myeks-node-NodeInstanceRole-VXJ89AGRU0IC

$ ssh -i ~/.ssh/id_rsa ec2-user@$N1 curl -s http://169.254.169.254/latest/meta-data/iam/security-credentials/eksctl-myeks-nodegroup-myeks-node-NodeInstanceRole-VXJ89AGRU0IC | jq

{

"Code": "Success",

"LastUpdated": "2023-04-29T06:52:57Z",

"Type": "AWS-HMAC",

"AccessKeyId": "ASIA5EYKJMNUFZTNK4LO",

"SecretAccessKey": "/MFcNXoEFBUBoPrbKrmLuC+TEb/nqoO/qmP6RhXI",

"Token": "IQoJb3JpZ2luX2VjEGcaDmFwLW5vcnRoZWFzdC0yIkcwRQIhALjVAg6vrMH+weIVac/NdBmADOYLUynG1wKmoGxDNn28AiBhAW7O/4rGx+l4xmqg1FLBYxGQkvXkHDL+ZMx8psqbvSrMBQhwEAIaDDkwMzU3NTMzMTY4OCIM94qWPvlCtf0HnUEnKqkF7/55Ro5yYcRpDGL2DzwwCFxK5aEeuiIRwyGeSu6M7khqlM3uYQUQ4FVw/lpilAEY213VSD4bQLs/KySAm9crmw3de76U+NRg+Mnwqf24NhKUzouQo6DIjE8bcn96IEOOcepG+kaZ1NzVPeeLkSrOgzm7KvqHqJo7fVFN9GpuIlWek6UkCz74e/STA/3OVca4KGsyKC0StCfQs21BoLD15wk3Z29Vh5OMcAtFM3gsM3bxlO5iPgFyYnhhLVz+nA+uRpl/gMyIg8HBRcludImx4LNl1am5DKeotIa0lgHWMnF60yFdTzNzifhr0Y8oLeM93q+XxVrlBRIUCKhjdkRY4tohxXHU9jWv7tfA6Cm79IibVdqLmnyqTELw6olYxL5kLOT1y6P+VCgtcLkNCTd9L8WE5FrHp6X4lIxeF9tnB1uUQFPs9lACl2jl6oIAGi/BIZbsM0IUvZttyw8TBYosCy/DBq43uHXo/GcEHhMAitHCkEgfx1ZtO4mSvX5Pit+xKrtqGOz5NLPxIXuHmV4oVwT1MXHgjqDwaJNz2R8LIcurpvaqJNi1r4/G0rrbt1xRcB17ds2XSvtjWOuYzDsM4/23TmzWZdb0worm+hRGmeU7MR70wOhGDYvLaMOsqAqgNs/Hq4yBI6zzxhmIU0MeEfdZinkoFQCrHh9buWTd4kKe5tDTxe1d2lPNwyVxoSOesO6yMoPv2dfG1QscEXS2uF9IcALBj1fraRSh64L2vy7mFuF77eB8gsWsGhe0h0K/na/KsXgzwap96uG4m2K84eTdyzAl15yIEcpkOAhFQsYlMc5CTRSUaKTuiUXE7uf0xRbCOryyHJ0c0Tp41MDjPPtAQccKLm5PqcKOsRUGff4YwVWUze86X90kS9KmQd90x5z7v4b0pGgXMOL9sqIGOrEBRDWxMteODy3cvMW3w3pBmJpNH74kt///x61CBqLHQHqgP7kqd+4GEKtLu+/3WP5DU5Uvd8pvB8kNMmADoTPRwmZHD3YlngiLB71sGvf6X59PPpjJuwtidTEKk5OPxsPtzSyC2RaWnk9XAOqXnshdySznPA/qHrgunFyZqKGZQIo9oHtLeeQzkn6CUatqTaTRRmjQeIiPUNKOq20bkggGOmW18I0o4V0fIX1WwEYxiauW",

"Expiration": "2023-04-29T13:10:09Z"

}

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 curl -s http://169.254.169.254/latest/

dynamic

meta-data

$ssh -i ~/.ssh/id_rsa ec2-user@$N2 curl -s http://169.254.169.254/latest/meta-data/iam/security-credentials/

eksctl-myeks-nodegroup-myeks-node-NodeInstanceRole-VXJ89AGRU0IC

$ ssh -i ~/.ssh/id_rsa ec2-user@$N2 curl -s http://169.254.169.254/latest/meta-data/iam/security-credentials/eksctl-myeks-nodegroup-myeks-node-NodeInstanceRole-VXJ89AGRU0IC | jq

{

"Code": "Success",

"LastUpdated": "2023-04-29T06:52:44Z",

"Type": "AWS-HMAC",

"AccessKeyId": "ASIA5EYKJMNUIMIVN4F6",

"SecretAccessKey": "SCjT8WrdZgTbjTJEnDqlDKiws04sI9h4lr0QafAF",

"Token": "IQoJb3JpZ2luX2VjEGcaDmFwLW5vcnRoZWFzdC0yIkgwRgIhAK0tREIHI9Tk6ITNzcuSbmQCIzR0aMOkRd+jAn10QMDWAiEAt3SeAEnoLxMe2y+Zsvlb4F7IREEoBi3067wSrFCmK1YqywUIcBACGgw5MDM1NzUzMzE2ODgiDKkdLtex6fTUj56sOCqoBWujvcV0EeKz5c7AOo09qQFArZQps5y2E5+lXZx+entGZTEVIrLZwMXXl8JhvtrERDzQFiyyYj0ek+rRmst5meyjsvcy5zCdY6qQ0u8oDTNC1iqQz14CXqLZUKnepy33oqargXIfMu+EI874yhunYOzYUD9FPdwX4m482swoRou2Efbj/1cyZSqTBzJWKtwrikKIsGyaLmuAgkngrOVuK43nKYtnzUeX/coZX7nHL5479x8v3cOaIcC+Mtt5j7Y97vXd95/Riz4ioj4do1PYQD5AkwMv9hqPVOuMyqsVqtj/TxAD/GtYi1gpBLeuh46goW6OuTN10kBkLnAK5DA+YNyvKNH+oLO99l0/Ba249fV3WzEBbdDwz/fyWedL4dRsk9kfpQ+24c5qdoseTK6UTmUz0rSfo9cEMGOYP8SwmBsd0hu0G4FJpAtHnorSAxOK6mjKo80mduFB3d7WMZYtDL9wQvV8/A/7w0SA6ks9wH9fW+m/Vtr2oRma/M6AmHrx/LG5GUe3xbhGmgKFdDMbGRHmvU9V8vYWhaX3xuAd06EOaLlfAyNf2wpE4YBz4neFE+ziBRAhIcwjWXr1V4ekP9Ac455dKvwc5VQr4Fa+nmHhDAlClemWaqUn/w5SgoXO10Ah+g7HnGhYr84T3V8GRzt9lplRpC5cbJyHjUgH3Uqq9W00Sb05aLNDwG9YZBq4PmIYAe+RS8MJx8pxr74SxMzVdyql3N/GfYFBR73UrfAFDJrBu+bUkxPlgQEKFloBzbic6uSOJCfDnMxaJ/KnATBUpA/gdxSZo0/9g7UYCaR0YxvwABaq/4YXo2pkX07Z8HvJamLjC8/OKS9kgUTtyD3FUQwztlK+r8d65ypLYa1Lr40jg3y9KSelEL8ictXbIiTM6m6gK4d0MND9sqIGOrABz8yRDOjWsmk+0TH/9SBjsBXxjxDnDWlxU+Fc1vWNClT6kLGqK3sVa/9rPmypC+8f25EHrutL+48vsugH57mygWfdI7oRy65JkMnKxAzXWyIJdzDmbBAPLGUv6ffa9UHYLIjePTUj6VD6rwww+rNFxLbkMoAARFNk4AIo4jidcnjCXLP+7ETUjLVtuvsVRJ4WKMcgjKp3JFGIJRNDKuSaAcB1JAE90SXMJb91irvICs8=",

"Expiration": "2023-04-29T13:22:43Z"

}

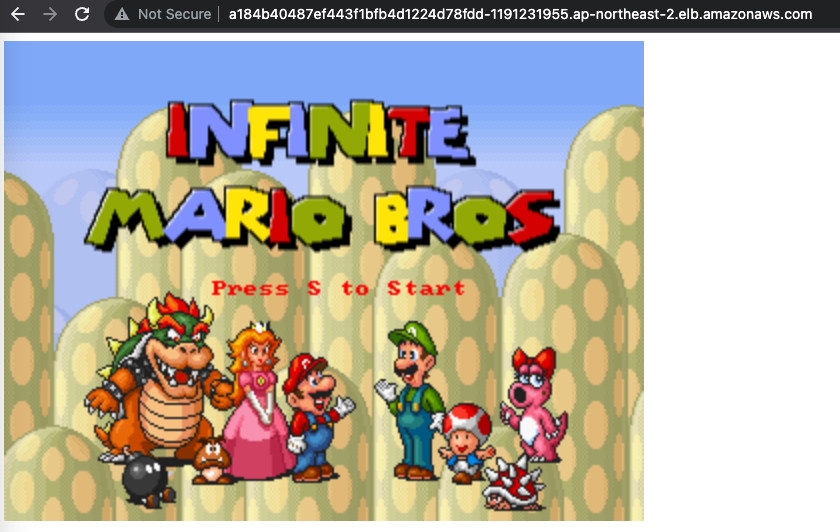

서비스/파드(mario 게임) 배포 테스트 with CLB

# 터미널1 (모니터링)

watch -d 'kubectl get pod,svc'

# 수퍼마리오 디플로이먼트 배포

$ curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/1/mario.yaml

$ kubectl apply -f mario.yaml

Every 2.0s: kubectl get pod,svc Sat Apr 29 16:34:28 2023

NAME READY STATUS RESTARTS AGE

pod/mario-687bcfc9cc-2fthp 1/1 Running 0 20s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 91m

service/mario LoadBalancer 10.100.95.231 a184b40487ef443f1bfb4d1224d78fdd-1191231955.ap-northeast-2.elb.amazonaws.com 80:31370/TCP 20s

$ cat mario.yaml | yh

apiVersion: apps/v1

kind: Deployment

metadata:

name: mario

labels:

app: mario

spec:

replicas: 1

selector:

matchLabels:

app: mario

template:

metadata:

labels:

app: mario

spec:

containers:

- name: mario

image: pengbai/docker-supermario

---

apiVersion: v1

kind: Service

metadata:

name: mario

spec:

selector:

app: mario

ports:

- port: 80

protocol: TCP

targetPort: 8080

type: LoadBalancer

# 배포 확인 : CLB 배포 확인

$ kubectl get deploy,svc,ep mario

mario

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/mario 1/1 1 1 62s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mario LoadBalancer 10.100.95.231 a184b40487ef443f1bfb4d1224d78fdd-1191231955.ap-northeast-2.elb.amazonaws.com 80:31370/TCP 62s

NAME ENDPOINTS AGE

endpoints/mario 192.168.1.155:8080 62s

# 마리오 게임 접속 : CLB 주소로 웹 접속

$ kubectl get svc mario -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "Maria URL = http://"$1 }'

Maria URL = http://a184b40487ef443f1bfb4d1224d78fdd-1191231955.ap-northeast-2.elb.amazonaws.com

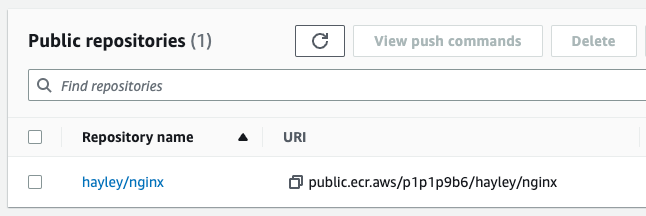

ECR 퍼블릭 Repository 사용

# 퍼블릭 ECR 인증

$ aws ecr-public get-login-password --region us-east-1 | docker login --username AWS --password-stdin public.ecr.aws

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

$ cat /root/.docker/config.json | jq

# 퍼블릭 Repo 기본 정보 확인

$ aws ecr-public describe-registries --region us-east-1 | jq

{

"registries": [

{

"registryId": "9XXXXXX",

"registryArn": "arn:aws:ecr-public::90XXXXXX:registry/90XXXXXXX",

"registryUri": "",

"verified": false,

"aliases": []

}

]

}

# 퍼블릭 Repo 생성

$ NICKNAME=<각자자신의닉네임>

$ NICKNAME=hayley

$ aws ecr-public create-repository --repository-name $NICKNAME/nginx --region us-east-1

{

"repository": {

"repositoryArn": "arn:aws:ecr-public::90XXXXXX:repository/hayley/nginx",

"registryId": "90XXXXXX",

"repositoryName": "hayley/nginx",

"repositoryUri": "public.ecr.aws/p1p1p9b6/hayley/nginx",

"createdAt": "2023-04-29T16:38:17.259000+09:00"

},

"catalogData": {}

}

# 생성된 퍼블릭 Repo 확인

$ aws ecr-public describe-repositories --region us-east-1 | jq

{

"repositories": [

{

"repositoryArn": "arn:aws:ecr-public::90XXXXXX:repository/hayley/nginx",

"registryId": "90XXXXXX",

"repositoryName": "hayley/nginx",

"repositoryUri": "public.ecr.aws/p1p1p9b6/hayley/nginx",

"createdAt": "2023-04-29T16:38:17.259000+09:00"

}

]

}

$ REPOURI=$(aws ecr-public describe-repositories --region us-east-1 | jq -r .repositories[].repositoryUri)

$ echo $REPOURI

public.ecr.aws/p1p1p9b6/hayley/nginx

# 이미지 태그

$ docker pull nginx:alpine

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx alpine 8e75cbc5b25c 4 weeks ago 41MB

$ docker tag nginx:alpine $REPOURI:latest

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx alpine 8e75cbc5b25c 4 weeks ago 41MB

public.ecr.aws/p1p1p9b6/hayley/nginx latest 8e75cbc5b25c 4 weeks ago 41MB

# 이미지 업로드

$ docker push $REPOURI:latest

# 파드 실행

$ kubectl run mynginx --image $REPOURI

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

mario-687bcfc9cc-2fthp 1/1 Running 0 7m27s

mynginx 1/1 Running 0 50s

$ kubectl delete pod mynginx

# 퍼블릭 이미지 삭제

$ aws ecr-public batch-delete-image \

--repository-name $NICKNAME/nginx \

--image-ids imageTag=latest \

--region us-east-1

# 퍼블릭 Repo 삭제

$ aws ecr-public delete-repository --repository-name $NICKNAME/nginx --force --region us-east-1

관리형노드에 노드 추가 및 삭제 Scale — 링크

# 옵션 [터미널1] EC2 생성 모니터링

#aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

$ while true; do aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output text ; echo "------------------------------" ; sleep 1; done

# eks 노드 그룹 정보 확인

$ eksctl get nodegroup --cluster $CLUSTER_NAME --name $CLUSTER_NAME-nodegroup

CLUSTER NODEGROUP STATUS CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID ASG NAME TYPE

myeks myeks-nodegroup ACTIVE 2023-04-29T06:09:19Z 2 2 2 t3.medium AL2_x86_64 eks-myeks-nodegroup-56c3e5d0-a0ce-f619-50ba-da0318115065 managed

# 노드 2개 → 3개 증가

$ eksctl scale nodegroup --cluster $CLUSTER_NAME --name $CLUSTER_NAME-nodegroup --nodes 3 --nodes-min 3 --nodes-max 6

2023-04-29 16:45:18 [ℹ] scaling nodegroup "myeks-nodegroup" in cluster myeks

2023-04-29 16:45:18 [ℹ] initiated scaling of nodegroup

2023-04-29 16:45:18 [ℹ] to see the status of the scaling run `eksctl get nodegroup --cluster myeks --region ap-northeast-2 --name myeks-nodegroup`

# 노드 확인

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ip-192-168-1-232.ap-northeast-2.compute.internal Ready <none> 96m v1.24.11-eks-a59e1f0 192.168.1.232 13.124.249.184 Amazon Linux 2 5.10.176-157.645.amzn2.x86_64 containerd://1.6.19

ip-192-168-2-37.ap-northeast-2.compute.internal Ready <none> 25s v1.24.11-eks-a59e1f0 192.168.2.37 54.180.114.127 Amazon Linux 2 5.10.176-157.645.amzn2.x86_64 containerd://1.6.19

ip-192-168-2-74.ap-northeast-2.compute.internal Ready <none> 96m v1.24.11-eks-a59e1f0 192.168.2.74 13.209.99.65 Amazon Linux 2 5.10.176-157.645.amzn2.x86_64 containerd://1.6.19

$ kubectl get nodes -l eks.amazonaws.com/nodegroup=$CLUSTER_NAME-nodegroup

NAME STATUS ROLES AGE VERSION

ip-192-168-1-232.ap-northeast-2.compute.internal Ready <none> 96m v1.24.11-eks-a59e1f0

ip-192-168-2-37.ap-northeast-2.compute.internal NotReady <none> 4s v1.24.11-eks-a59e1f0

ip-192-168-2-74.ap-northeast-2.compute.internal Ready <none> 96m v1.24.11-eks-a59e1f0

# 노드 3개 → 2개 감소 : 적용까지 시간이 소요됨

$ aws eks update-nodegroup-config --cluster-name $CLUSTER_NAME --nodegroup-name $CLUSTER_NAME-nodegroup --scaling-config minSize=2,maxSize=2,desiredSize=2AWS EKS Workshop : Fundamentals

Pod Affinity and Anti-Affinity : 링크

- podAffinity는 Pod가 배치될 때, 실행 중인 Pod들 중에 선호하는 Pod를 찾아 해당 Pod와 동일한 Node로 배치하도록 설정해줍니다.

- podAnitAffinity는 실행 중인 Pod들 중에, 선호하지 않은 Pod가 실행 중인 Node는 피해서 배치하도록 설정해줍니다.

$ k get pods -n checkout

NAME READY STATUS RESTARTS AGE

checkout-79db944cb8-cgf9x 1/1 Running 0 19s

checkout-redis-8654c9d578-tpbvw 1/1 Running 0 6s

checkout-6b94c594c4-99gn6 ip-192-168-1-232.ap-northeast-2.compute.internal

checkout-redis-79df97486b-vt4dt ip-192-168-1-232.ap-northeast-2.compute.internal

$ kubectl get pods -n checkout -o=jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.nodeName}{"\n"}'

checkout-6b94c594c4-99gn6 ip-192-168-1-232.ap-northeast-2.compute.internal

checkout-redis-6b96ccd7f9-xnfxm ip-192-168-1-232.ap-northeast-2.compute.internal

# deployment checkout에 podAffinity와 podAntiAffinity 추가

...........

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- checkout

topologyKey: kubernetes.io/hostname

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- service

topologyKey: kubernetes.io/hostname

...........

$ kubectl get pods -n checkout \

-o=jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.nodeName}{"\n"}'

checkout-5f89cb99d-4q6fk ip-192-168-2-37.ap-northeast-2.compute.internal

checkout-redis-6b96ccd7f9-xnfxm ip-192-168-1-232.ap-northeast-2.compute.internal

$ kubectl scale -n checkout deployment/checkout --replicas 2

$ kubectl get pods -n checkout -o=jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.nodeName}{"\n"}'

checkout-5f89cb99d-4q6fk ip-192-168-2-37.ap-northeast-2.compute.internal

checkout-5f89cb99d-f24s6 ip-192-168-2-37.ap-northeast-2.compute.internal

checkout-redis-6b96ccd7f9-xnfxm ip-192-168-1-232.ap-northeast-2.compute.internal

# deployment checkout-redis에 podAffinity podAntiAffinity 추가

...........

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- checkout

topologyKey: kubernetes.io/hostname

...........

$ kubectl scale -n checkout deployment/checkout-redis --replicas 2

$ kubectl get pods -n checkout \

-o=jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.nodeName}{"\n"}'

checkout-6b94c594c4-99gn6 ip-192-168-1-232.ap-northeast-2.compute.internal

checkout-6b94c594c4-dbxh6 ip-192-168-1-232.ap-northeast-2.compute.internal

checkout-redis-79df97486b-mg2zb ip-192-168-1-232.ap-northeast-2.compute.internal

checkout-redis-79df97486b-vt4dt ip-192-168-1-232.ap-northeast-2.compute.internalTaints : 링크

- taint가 설정된 노드에는 일반적인 pod는 배포될 수 없으며 taint가 지정된 노드에는 toleration을 적용하면 배포할 수 있습니다.

$ kubectl taint node ip-192-168-1-232.ap-northeast-2.compute.internal role=webserver:NoSchedule

node/ip-192-168-1-232.ap-northeast-2.compute.internal tainted

$ kubectl describe nodes | grep -i taint

Taints: role=webserver:NoSchedule

Taints: <none>

# toleration 적용 시 아래 내용 추가

..............

spec:

template:

spec:

tolerations:

- key: "role"

operator: "webserver"

effect: "NoSchedule"

..............EKS Cluster Endpoint — Private 직접 구성해보기 — 링크 링크2

- Networking Architecture

$ git clone https://github.com/leonli/terraform-vpc-networking

$ cd terraform-vpc-networking

$ cat > terraform.tfvars <<EOF

//AWS

region = "ap-northeast-2"

environment = "k8s"

/* module networking */

vpc_cidr = "10.0.0.0/16"

public_subnets_cidr = ["10.0.1.0/24", "10.0.2.0/24", "10.0.3.0/24"] //List of Public subnet cidr range

private_subnets_cidr = ["10.0.11.0/24", "10.0.12.0/24", "10.0.13.0/24"] //List of private subnet cidr range

EOF

$ terraform init

$ terraform plan

$ terraform apply

Outputs:

default_sg_id = "sg-0b5dbb6a6dba178e7"

private_subnets_id = [

"subnet-0014a1d91258145d6",

"subnet-0e27e9fd1c1a25610",

"subnet-08b775affdeafae45",

]

public_route_table = "rtb-044d47c21d43d825a"

public_subnets_id = [

"subnet-070bd1096a802de66",

"subnet-07903f295df79cffc",

"subnet-0a1ebc37245404f72",

]

security_groups_ids = "sg-0b5dbb6a6dba178e7"

vpc_id = "vpc-0b5cbea6dd459f02c"# export VPC

export VPC_ID=$(terraform output -json | jq -r .vpc_id.value)

# export private subnets

export PRIVATE_SUBNETS_ID_A=$(terraform output -json | jq -r .private_subnets_id.value[0])

export PRIVATE_SUBNETS_ID_B=$(terraform output -json | jq -r .private_subnets_id.value[1])

export PRIVATE_SUBNETS_ID_C=$(terraform output -json | jq -r .private_subnets_id.value[2])

# export public subnets

export PUBLIC_SUBNETS_ID_A=$(terraform output -json | jq -r .public_subnets_id.value[0])

export PUBLIC_SUBNETS_ID_B=$(terraform output -json | jq -r .public_subnets_id.value[1])

export PUBLIC_SUBNETS_ID_C=$(terraform output -json | jq -r .public_subnets_id.value[2])

echo "VPC_ID=$VPC_ID, \

PRIVATE_SUBNETS_ID_A=$PRIVATE_SUBNETS_ID_A, \

PRIVATE_SUBNETS_ID_B=$PRIVATE_SUBNETS_ID_B, \

PRIVATE_SUBNETS_ID_C=$PRIVATE_SUBNETS_ID_C, \

PUBLIC_SUBNETS_ID_A=$PUBLIC_SUBNETS_ID_A, \

PUBLIC_SUBNETS_ID_B=$PUBLIC_SUBNETS_ID_B, \

PUBLIC_SUBNETS_ID_C=$PUBLIC_SUBNETS_ID_C"- Create an EKS cluster configuration file

cat > /home/ec2-user/environment/eks-cluster.yaml <<EOF

---

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: web-host-on-eks

region: ap-norhteast-2 # Specify the aws region

version: "1.24"

privateCluster: # Allows configuring a fully-private cluster in which no node has outbound internet access, and private access to AWS services is enabled via VPC endpoints

enabled: true

additionalEndpointServices: # specifies additional endpoint services that must be enabled for private access. Valid entries are: "cloudformation", "autoscaling", "logs".

- cloudformation

- logs

- autoscaling

vpc:

id: "${VPC_ID}" # Specify the VPC_ID to the eksctl command

subnets: # Creating the EKS master nodes to a completely private environment

private:

private-ap-east-1a:

id: "${PRIVATE_SUBNETS_ID_A}"

private-ap-east-1b:

id: "${PRIVATE_SUBNETS_ID_B}"

private-ap-east-1c:

id: "${PRIVATE_SUBNETS_ID_C}"

managedNodeGroups: # Create a managed node group in private subnets

- name: managed

labels:

role: worker

instanceType: t3.small

minSize: 3

desiredCapacity: 3

maxSize: 10

privateNetworking: true

volumeSize: 50

volumeType: gp2

volumeEncrypted: true

iam:

withAddonPolicies:

autoScaler: true # enables IAM policy for cluster-autoscaler

albIngress: true

cloudWatch: true

# securityGroups:

# attachIDs: ["sg-1", "sg-2"]

ssh:

allow: true

publicKeyPath: ~/.ssh/id_rsa.pub

# new feature for restricting SSH access to certain AWS security group IDs

subnets:

- private-ap-east-1a

- private-ap-east-1b

- private-ap-east-1c

cloudWatch:

clusterLogging:

# enable specific types of cluster control plane logs

enableTypes: ["all"]

# all supported types: "api", "audit", "authenticator", "controllerManager", "scheduler"

# supported special values: "*" and "all"

addons: # explore more on doc about EKS addons: https://docs.aws.amazon.com/eks/latest/userguide/eks-add-ons.html

- name: vpc-cni # no version is specified so it deploys the default version

attachPolicyARNs:

- arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy

- name: coredns

version: latest # auto discovers the latest available

- name: kube-proxy

version: latest

iam:

withOIDC: true # Enable OIDC identity provider for plugins, explore more on doc: https://docs.aws.amazon.com/eks/latest/userguide/authenticate-oidc-identity-provider.html

serviceAccounts: # create k8s service accounts(https://kubernetes.io/docs/reference/access-authn-authz/service-accounts-admin/) and associate with IAM policy, see more on: https://docs.aws.amazon.com/eks/latest/userguide/iam-roles-for-service-accounts.html

- metadata:

name: aws-load-balancer-controller # create the needed service account for aws-load-balancer-controller while provisioning ALB/ELB by k8s ingress api

namespace: kube-system

wellKnownPolicies:

awsLoadBalancerController: true

- metadata:

name: cluster-autoscaler # create the CA needed service account and its IAM policy

namespace: kube-system

labels: {aws-usage: "cluster-ops"}

wellKnownPolicies:

autoScaler: true

EOF

$ eksctl create cluster -f /home/ec2-user/environment/eks-cluster.yaml- Creating a fully-private cluster

privateCluster:

enabled: true- Autoscaling 및 CloudWatch 로깅에 대한 비공개 액세스를 허용하려면 다음을 수행합니다.

privateCluster:

enabled: true

additionalEndpointServices:

# For Cluster Autoscaler

- "autoscaling"

# CloudWatch logging

- "logs"

'IT > Infra&Cloud' 카테고리의 다른 글

| [aws] EKS Storage & Node (0) | 2023.10.29 |

|---|---|

| [aws] EKS Networking (0) | 2023.10.29 |

| [aws] IAM identity providers(SAML) (1) | 2023.10.29 |

| [aws] AppStream 2.0 (0) | 2023.10.29 |

| [gcp] GKE troubleshooting (0) | 2023.10.29 |

최근에 올라온 글

최근에 달린 댓글

- Total

- Today

- Yesterday

TAG

- AI Engineering

- cloud

- IaC

- VPN

- EKS

- security

- SDWAN

- 혼공챌린지

- terraform

- k8s cni

- NW

- k8s calico

- 혼공파

- 도서

- operator

- ai 엔지니어링

- 혼공단

- autoscaling

- handson

- k8s

- cni

- AI

- PYTHON

- CICD

- GKE

- NFT

- GCP

- 파이썬

- AWS

- S3

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

글 보관함