티스토리 뷰

안녕하세요. AWS EKS Workshop Study (=AEWS) 3기 모임에서 스터디한 내용을 정리했습니다. 해당 글에서는 Amzaon EKS Networking에 대해 자세히 알아보겠습니다.

실습 환경 배포

- myeks-vpc 에 각기 AZ를 사용하는 퍼블릭/프라이빗 서브넷 배치

- operator-vpc 에 AZ1를 사용하는 퍼블릭/프라이빗 서브넷 배치

- 내부 통신을 위한 VPC Peering 배치

# yaml 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/myeks-2week.yaml

# 배포

# aws cloudformation deploy --template-file myeks-1week.yaml --stack-name mykops --parameter-overrides KeyName=<My SSH Keyname> SgIngressSshCidr=<My Home Public IP Address>/32 --region <리전>

# CloudFormation 스택 배포 완료 후 운영서버 EC2 IP 출력

aws cloudformation describe-tacks --stack-name myeks --query 'Stacks[*].Outputs[*].OutputValue' --output texts

# 마스터노드 SSH 접속

$ ssh -i ~/Downloads/kp-hayley3.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)

# 노드 정보 확인

$ aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table1. AWS VPC CNI

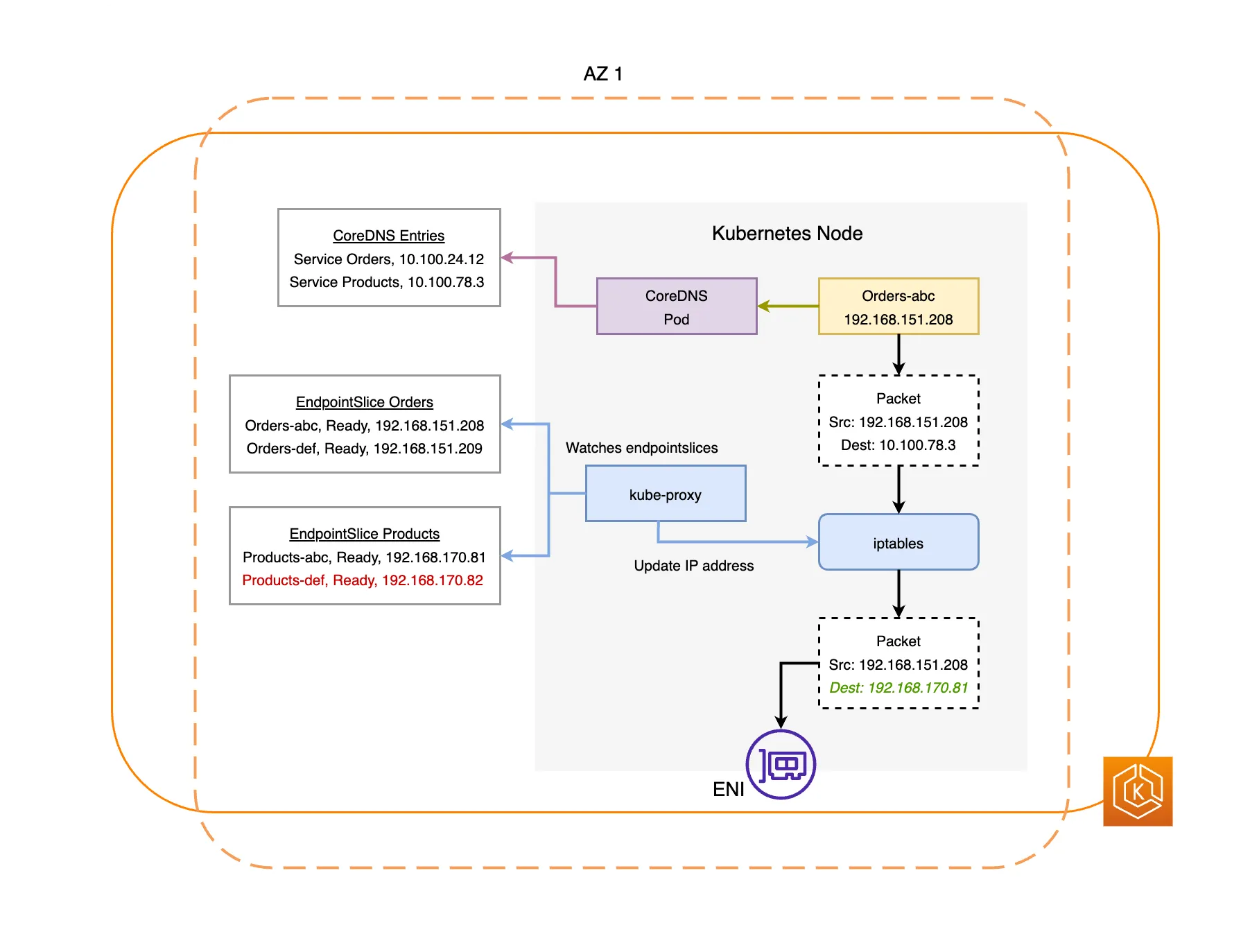

K8S CNI : Container Network Interface는 k8s 네트워크 환경을 구성해줍니다.

AWS VPC CNI : 파드의 IP를 할당해줍니다. 파드의 IP 네트워크 대역과 노드의 IP 대역이 같아서 직접 통신이 가능합니다.

- 파드간 통신 시 일반적으로 K8S CNI는 오버레이(VXLAN, IP-IP 등) 통신을 하고, AWS VPC CNI는 동일 대역으로 직접 통신을 합니다.

Calico CNI

- Calico CNI는 host networking namespace에 설치되고 각각의 노드는 virtual router로 역할을 합니다. AWS 네트워크 경우 ENI IP 주소만 알고 pod가 어디에 있는지 모릅니다. 기존 방식에서는 오버레이 네트워크를 통해 이를 해결하는데 Calico 경우 VXLAN, IP-IP 통신을 지원합니다.

- Cluster가 하나의 VPC에 존재할 경우, Calico CNI는 pod에서 pod 간 바로 통신되도록 지원합니다.

- 클러스터가 Multiple VPC에 존재할 경우 Calico CNI는 오버레이 네트워크를 통한 통신을 지원합니다.

AWS VPC CNI

- 다른 CNI(Calico 등)과 달리 AWS VPC CNI는 네트워크 통신의 최적화(성능, 지연)를 위해서 노드와 파드의 네트워크 대역을 동일하게 설정합니다.

- 각 pod 들은 underlaying VPC 서브넷의 IP 주소를 할당받고 pod ip는 instance의 ENI 상의 secondary IP로 설정됩니다. 노드 상의 pod의 수가 ENI에서 지원되는 secondary IP의 수를 초과하게된다면 ENI가 자동으로 node에 추가 할당됩니다.

- AWS VPC CNI는 오버레이 네트워크가 필요없이 이미 pod ip를 알고 있기 때문에 단일 VPC, 멀티 VPC에서 바로 pod 간 통신을 지원할 수 있게됩니다.

- Secondary IPv4 address : 인스턴스 유형에 최대 ENI 갯수와 할당 가능 IP 수를 조합하여 선정합니다.

# CNI 정보 확인

$ kubectl describe daemonset aws-node --namespace kube-system | grep Image | cut -d "/" -f 2

# kube-proxy config 확인 : 모드 iptables 사용

$ kubectl describe cm -n kube-system kube-proxy-config

...

mode: "iptables"

...

# 노드 IP 확인

$ aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

-------------------------------------------------------------------------

| DescribeInstances |

+-------------------------+----------------+----------------+-----------+

| InstanceName | PrivateIPAdd | PublicIPAdd | Status |

+-------------------------+----------------+----------------+-----------+

| eks-hayley-ng1-Node | 192.168.3.94 | 13.209.74.242 | running |

| eks-hayley-ng1-Node | 192.168.2.173 | 3.38.188.24 | running |

| eks-hayley-bastion-EC2 | 192.168.1.100 | 3.36.67.161 | running |

| eks-hayley-ng1-Node | 192.168.1.31 | 3.35.19.95 | running |

+-------------------------+----------------+----------------+-----------+

# 파드 IP 확인

$ kubectl get pod -n kube-system -o=custom-columns=NAME:.metadata.name,IP:.status.podIP,STATUS:.status.phase

NAME IP STATUS

aws-node-dggfs 192.168.3.94 Running

aws-node-mxwbl 192.168.1.31 Running

aws-node-vtdbn 192.168.2.173 Running

coredns-6777fcd775-kxjnm 192.168.1.243 Running

coredns-6777fcd775-v966q 192.168.3.212 Running

kube-proxy-cg6lr 192.168.2.173 Running

kube-proxy-fhl6z 192.168.1.31 Running

kube-proxy-qqwsl 192.168.3.94 Running

# 파드 이름 확인

$ kubectl get pod -A -o name

pod/aws-node-dggfs

pod/aws-node-mxwbl

pod/aws-node-vtdbn

pod/coredns-6777fcd775-kxjnm

pod/coredns-6777fcd775-v966q

pod/kube-proxy-cg6lr

pod/kube-proxy-fhl6z

pod/kube-proxy-qqwsl

# 파드 갯수 확인

$ kubectl get pod -A -o name | wc -l

8

# 노드에 툴 설치

$ ssh ec2-user@$N1 sudo yum install links tree jq tcpdump -y

$ ssh ec2-user@$N2 sudo yum install links tree jq tcpdump -y

$ ssh ec2-user@$N3 sudo yum install links tree jq tcpdump -y

# CNI 정보 확인

$ ssh ec2-user@$N1 tree /var/log/aws-routed-eni

/var/log/aws-routed-eni

├── egress-v4-plugin.log

├── ipamd.log

└── plugin.log

# 네트워크 정보 확인 : eniY는 pod network 네임스페이스와 veth pair

$ ssh ec2-user@$N1 sudo ip -br -c addr

o UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 192.168.1.31/24 fe80::1f:5dff:fe11:d78a/64

eni57775486648@if3 UP fe80::d401:8dff:fe4e:4ba8/64

eth1 UP 192.168.1.173/24 fe80::69:fcff:fe1f:12c6/64

$ ssh ec2-user@$N1 sudo ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000

link/ether 02:1f:5d:11:d7:8a brd ff:ff:ff:ff:ff:ff

inet 192.168.1.31/24 brd 192.168.1.255 scope global dynamic eth0

valid_lft 2145sec preferred_lft 2145sec

inet6 fe80::1f:5dff:fe11:d78a/64 scope link

valid_lft forever preferred_lft forever

3: eni57775486648@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether d6:01:8d:4e:4b:a8 brd ff:ff:ff:ff:ff:ff link-netns cni-20c5bd57-a3b1-c196-4e79-56da6212083b

inet6 fe80::d401:8dff:fe4e:4ba8/64 scope link

valid_lft forever preferred_lft forever

4: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000

link/ether 02:69:fc:1f:12:c6 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.173/24 brd 192.168.1.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::69:fcff:fe1f:12c6/64 scope link

valid_lft forever preferred_lft forever

$ ssh ec2-user@$N1 sudo ip -c route

default via 192.168.1.1 dev eth0

169.254.169.254 dev eth0

192.168.1.0/24 dev eth0 proto kernel scope link src 192.168.1.31

192.168.1.243 dev eni57775486648 scope link2. 노드에서 기본 네트워크 정보 확인

- network 네임스페이스는 호스트(root)와 파드별(per pod)로 구분됩니다.

- 특정한 pod(kube-proxy, aws-node)는 호스트(root)의 IP를 그대로 사용합니다.

- ENI0, ENI1 으로 2개의 ENI는 자신의 IP 외 추가적으로 5개의 보조 private IP를 가질 수 있습니다.

- coredns pod는 veth로 호스트에는 eniY@ifN 인터페이스와 pod에 eth0와 연결되어 있습니다.

# coredns 파드 IP 정보 확인

$ kubectl get pod -n kube-system -l k8s-app=kube-dns -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-6777fcd775-kxjnm 1/1 Running 0 53m 192.168.1.243 ip-192-168-1-31.ap-northeast-2.compute.internal <none> <none>

coredns-6777fcd775-v966q 1/1 Running 0 53m 192.168.3.212 ip-192-168-3-94.ap-northeast-2.compute.internal <none> <none>

# 노드의 라우팅 정보 확인 >> EC2 네트워크 정보의 Secondary private IPv4 addresses'와 비교

$ ssh ec2-user@$N1 sudo ip -c route

default via 192.168.1.1 dev eth0

169.254.169.254 dev eth0

192.168.1.0/24 dev eth0 proto kernel scope link src 192.168.1.31

192.168.1.243 dev eni57775486648 scope link

# [터미널1~3] 노드 모니터링

$ ssh ec2-user@$N1

$ watch -d "ip link | egrep 'eth|eni' ;echo;echo "[ROUTE TABLE]"; route -n | grep eni"

$ ssh ec2-user@$N2

$ watch -d "ip link | egrep 'eth|eni' ;echo;echo "[ROUTE TABLE]"; route -n | grep eni"

$ ssh ec2-user@$N3

$ watch -d "ip link | egrep 'eth|eni' ;echo;echo "[ROUTE TABLE]"; route -n | grep eni"

# 테스트용 파드 netshoot-pod 생성

$ cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: netshoot-pod

spec:

replicas: 3

selector:

matchLabels:

app: netshoot-pod

template:

metadata:

labels:

app: netshoot-pod

spec:

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 파드 이름 변수 지정

$ PODNAME1=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[0].metadata.name})

$ PODNAME2=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[1].metadata.name})

$ PODNAME3=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[2].metadata.name})

# 파드 확인

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

netshoot-pod-7757d5dd99-9jxxs 1/1 Running 0 99s 192.168.1.163 ip-192-168-1-31.ap-northeast-2.compute.internal <none> <none>

netshoot-pod-7757d5dd99-ndrbt 1/1 Running 0 99s 192.168.2.75 ip-192-168-2-173.ap-northeast-2.compute.internal <none> <none>

netshoot-pod-7757d5dd99-xqnkv 1/1 Running 0 99s 192.168.3.203 ip-192-168-3-94.ap-northeast-2.compute.internal <none> <none>

$ kubectl get pod -o=custom-columns=NAME:.metadata.name,IP:.status.podIP

NAME IP

netshoot-pod-7757d5dd99-9jxxs 192.168.1.163

netshoot-pod-7757d5dd99-ndrbt 192.168.2.75

netshoot-pod-7757d5dd99-xqnkv 192.168.3.203

- 파드가 생성되면, 워커 노드에 eniY@ifN 추가되고 라우팅 테이블에도 정보가 추가됩니다.

# 노드3에서 네트워크 인터페이스 정보 확인

$ ssh ec2-user@$N3

----------------

# ip -br -c addr show

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 192.168.3.94/24 fe80::8f0:dbff:fe30:6aea/64

eni05a6151560c@if3 UP fe80::b831:13ff:fe92:8e81/64

eth1 UP 192.168.3.131/24 fe80::83e:6ff:feb2:554a/64

enic4b76dc5b9f@if3 UP fe80::e426:7eff:fead:71c/64

# ip -c link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 0a:f0:db:30:6a:ea brd ff:ff:ff:ff:ff:ff

3: eni05a6151560c@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP mode DEFAULT group default

link/ether ba:31:13:92:8e:81 brd ff:ff:ff:ff:ff:ff link-netns cni-c03c7d67-b375-61e1-1ca0-b9331dd5a50a

4: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 0a:3e:06:b2:55:4a brd ff:ff:ff:ff:ff:ff

5: enic4b76dc5b9f@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP mode DEFAULT group default

link/ether e6:26:7e:ad:07:1c brd ff:ff:ff:ff:ff:ff link-netns cni-3212b379-e026-d013-b1da-0b64851f7c68

# ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000

link/ether 0a:f0:db:30:6a:ea brd ff:ff:ff:ff:ff:ff

inet 192.168.3.94/24 brd 192.168.3.255 scope global dynamic eth0

valid_lft 2762sec preferred_lft 2762sec

inet6 fe80::8f0:dbff:fe30:6aea/64 scope link

valid_lft forever preferred_lft forever

3: eni05a6151560c@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether ba:31:13:92:8e:81 brd ff:ff:ff:ff:ff:ff link-netns cni-c03c7d67-b375-61e1-1ca0-b9331dd5a50a

inet6 fe80::b831:13ff:fe92:8e81/64 scope link

valid_lft forever preferred_lft forever

4: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000

link/ether 0a:3e:06:b2:55:4a brd ff:ff:ff:ff:ff:ff

inet 192.168.3.131/24 brd 192.168.3.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::83e:6ff:feb2:554a/64 scope link

valid_lft forever preferred_lft forever

5: enic4b76dc5b9f@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether e6:26:7e:ad:07:1c brd ff:ff:ff:ff:ff:ff link-netns cni-3212b379-e026-d013-b1da-0b64851f7c68

inet6 fe80::e426:7eff:fead:71c/64 scope link

valid_lft forever preferred_lft forever

# ip route # 혹은 route -n

default via 192.168.3.1 dev eth0

169.254.169.254 dev eth0

192.168.3.0/24 dev eth0 proto kernel scope link src 192.168.3.94

192.168.3.203 dev enic4b76dc5b9f scope link

192.168.3.212 dev eni05a6151560c scope link

# 마지막 생성된 네임스페이스 정보 출력 -t net(네트워크 타입)

# sudo lsns -o PID,COMMAND -t net | awk 'NR>2 {print $1}' | tail -n 1

22648

# 마지막 생성된 네임스페이스 net PID 정보 출력 -t net(네트워크 타입)를 변수 지정

MyPID=$(sudo lsns -o PID,COMMAND -t net | awk 'NR>2 {print $1}' | tail -n 1)

# sudo nsenter -t $MyPID -n ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether 1a:d3:a4:14:85:28 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.3.203/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::18d3:a4ff:fe14:8528/64 scope link

valid_lft forever preferred_lft forever

# sudo nsenter -t $MyPID -n ip -c route

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

# 테스트용 파드 접속(exec) 후 Shell 실행

$ kubectl exec -it $PODNAME1 -- zsh

dP dP dP

88 88 88

88d888b. .d8888b. d8888P .d8888b. 88d888b. .d8888b. .d8888b. d8888P

88' `88 88ooood8 88 Y8ooooo. 88' `88 88' `88 88' `88 88

88 88 88. ... 88 88 88 88 88. .88 88. .88 88

dP dP `88888P' dP `88888P' dP dP `88888P' `88888P' dP

Welcome to Netshoot! (github.com/nicolaka/netshoot)

# netshoot-pod-7757d5dd99-9jxxs ~ ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether ce:c9:88:d3:48:ed brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.163/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ccc9:88ff:fed3:48ed/64 scope link

valid_lft forever preferred_lft forever

# netshoot-pod-7757d5dd99-9jxxs ~ ip -c route

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

# netshoot-pod-7757d5dd99-9jxxs ~ route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 169.254.1.1 0.0.0.0 UG 0 0 0 eth0

169.254.1.1 0.0.0.0 255.255.255.255 UH 0 0 0 eth0

# netshoot-pod-7757d5dd99-9jxxs ~ ping -c 1 192.168.2.75

PING 192.168.2.75 (192.168.2.75) 56(84) bytes of data.

64 bytes from 192.168.2.75: icmp_seq=1 ttl=62 time=1.15 ms

--- 192.168.2.75 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.153/1.153/1.153/0.000 ms

# netshoot-pod-7757d5dd99-9jxxs ~ ps

PID USER TIME COMMAND

1 root 0:00 tail -f /dev/null

7 root 0:01 zsh

115 root 0:00 ps

# netshoot-pod-7757d5dd99-9jxxs ~ cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local ap-northeast-2.compute.internal

nameserver 10.100.0.10

options ndots:5

# 파드2 Shell 실행

$ kubectl exec -it $PODNAME2 -- ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: eth0@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether fa:ad:9c:bf:1d:25 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.2.75/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::f8ad:9cff:febf:1d25/64 scope link

valid_lft forever preferred_lft forever

# 파드3 Shell 실행

$ kubectl exec -it $PODNAME3 -- ip -br -c addr

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0@if5 UP 192.168.3.203/32 fe80::18d3:a4ff:fe14:8528/64 3. 노드 간 파드 통신(오버헤드 없이 통신이 됨!!!)

- AWS VPC CNI 경우 별도의 오버레이(Overlay) 통신 기술 없이, VPC Native 하게 파드간 직접 통신이 가능합니다.

- 파드간 통신 테스트 및 확인 : 별도의 NAT 동작 없이 통신 가능 확인

# 파드 IP 변수 지정

PODIP1=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[0].status.podIP})

PODIP2=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[1].status.podIP})

PODIP3=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[2].status.podIP})

# 파드1 Shell 에서 파드2로 ping 테스트

$ kubectl exec -it $PODNAME1 -- ping -c 2 $PODIP2

PING 192.168.2.75 (192.168.2.75) 56(84) bytes of data.

64 bytes from 192.168.2.75: icmp_seq=1 ttl=62 time=0.929 ms

64 bytes from 192.168.2.75: icmp_seq=2 ttl=62 time=0.820 ms

--- 192.168.2.75 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1029ms

rtt min/avg/max/mdev = 0.820/0.874/0.929/0.054 ms

# 파드2 Shell 에서 파드3로 ping 테스트

$ kubectl exec -it $PODNAME2 -- ping -c 2 $PODIP3

PING 192.168.3.203 (192.168.3.203) 56(84) bytes of data.

64 bytes from 192.168.3.203: icmp_seq=1 ttl=62 time=1.53 ms

64 bytes from 192.168.3.203: icmp_seq=2 ttl=62 time=1.24 ms

--- 192.168.3.203 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 1.244/1.386/1.528/0.142 ms

# 파드3 Shell 에서 파드1로 ping 테스트

$ kubectl exec -it $PODNAME3 -- ping -c 2 $PODIP1

PING 192.168.1.163 (192.168.1.163) 56(84) bytes of data.

64 bytes from 192.168.1.163: icmp_seq=1 ttl=62 time=1.36 ms

64 bytes from 192.168.1.163: icmp_seq=2 ttl=62 time=1.13 ms

--- 192.168.1.163 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 1.133/1.244/1.355/0.111 ms

# 워커 노드 EC2 : TCPDUMP 확인

[ec2-user@ip-192-168-1-31 ~]$ sudo tcpdump -i any -nn icmp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on any, link-type LINUX_SLL (Linux cooked), capture size 262144 bytes

^C

0 packets captured

0 packets received by filter

0 packets dropped by kernel

[ec2-user@ip-192-168-1-31 ~]$ sudo tcpdump -i eth1 -nn icmp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth1, link-type EN10MB (Ethernet), capture size 262144 bytes

^C

0 packets captured

0 packets received by filter

0 packets dropped by kernel

[ec2-user@ip-192-168-1-31 ~]$ sudo tcpdump -i eth0 -nn icmp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

^C

0 packets captured

0 packets received by filter

0 packets dropped by kernel

[워커 노드1]

# routing policy database management 확인

[ec2-user@ip-192-168-1-31 ~]$ ip rule

0: from all lookup local

512: from all to 192.168.1.243 lookup main

512: from all to 192.168.1.163 lookup main

1024: from all fwmark 0x80/0x80 lookup main

1536: from 192.168.1.163 lookup 2

32766: from all lookup main

32767: from all lookup default

# routing table management 확인

[ec2-user@ip-192-168-1-31 ~]$ ip route show table local

broadcast 127.0.0.0 dev lo proto kernel scope link src 127.0.0.1

local 127.0.0.0/8 dev lo proto kernel scope host src 127.0.0.1

local 127.0.0.1 dev lo proto kernel scope host src 127.0.0.1

broadcast 127.255.255.255 dev lo proto kernel scope link src 127.0.0.1

broadcast 192.168.1.0 dev eth0 proto kernel scope link src 192.168.1.31

broadcast 192.168.1.0 dev eth1 proto kernel scope link src 192.168.1.173

local 192.168.1.31 dev eth0 proto kernel scope host src 192.168.1.31

local 192.168.1.173 dev eth1 proto kernel scope host src 192.168.1.173

broadcast 192.168.1.255 dev eth0 proto kernel scope link src 192.168.1.31

broadcast 192.168.1.255 dev eth1 proto kernel scope link src 192.168.1.173

# 디폴트 네트워크 정보를 eth0 을 통해서 빠져나간다

[ec2-user@ip-192-168-1-31 ~]$ ip route show table main

default via 192.168.1.1 dev eth0

169.254.169.254 dev eth0

192.168.1.0/24 dev eth0 proto kernel scope link src 192.168.1.31

192.168.1.163 dev eni04aaba534ed scope link

192.168.1.243 dev eni57775486648 scope link 4. 파드에서 외부 통신

iptable 에 SNAT 을 통하여 노드의 eth0 IP로 변경되어서 외부와 통신됩니다.

VPC CNI 의 External source network address translation (SNAT) 설정에 따라, 외부(인터넷) 통신 시 SNAT 하거나 혹은 SNAT 없이 통신을 할 수 있습니다. [참고]

# 작업용 EC2 : pod-1 Shell 에서 외부로 ping

$ kubectl exec -it $PODNAME1 -- ping -c 1 www.google.com

PING www.google.com (142.250.196.100) 56(84) bytes of data.

64 bytes from nrt12s35-in-f4.1e100.net (142.250.196.100): icmp_seq=1 ttl=104 time=28.5 ms

--- www.google.com ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 28.467/28.467/28.467/0.000 ms

$ kubectl exec -it $PODNAME1 -- ping -i 0.1 www.google.com

PING www.google.com (142.250.196.100) 56(84) bytes of data.

64 bytes from nrt12s35-in-f4.1e100.net (142.250.196.100): icmp_seq=1 ttl=104 time=28.6 ms

# 워커 노드 EC2 : TCPDUMP 확인

sudo tcpdump -i any -nn icmp

sudo tcpdump -i eth0 -nn icmp

# 워커 노드 EC2 : 퍼블릭IP 확인

$ curl -s ipinfo.io/ip ; echo

3.38.188.24

# 작업용 EC2 : pod-1 Shell 에서 외부 접속 확인 - 공인IP는 어떤 주소인가?

## The right way to check the weather - 링크

$ kubectl exec -it $PODNAME1 -- curl -s ipinfo.io/ip ; echo

3.35.19.95

$ kubectl exec -it $PODNAME1 -- curl -s wttr.in/seoul

┌─────────────┐

┌──────────────────────────────┬───────────────────────┤ Sat 06 May ├───────────────────────┬──────────────────────────────┐

│ Morning │ Noon └──────┬──────┘ Evening │ Night │

├──────────────────────────────┼──────────────────────────────┼──────────────────────────────┼──────────────────────────────┤

│ .-. Heavy rain │ .-. Moderate rain │ _`/"".-. Patchy light d…│ _`/"".-. Patchy rain po…│

│ ( ). +12(10) °C │ ( ). +12(10) °C │ ,\_( ). +12(11) °C │ ,\_( ). +12(11) °C │

│ (___(__) ↙ 22-28 km/h │ (___(__) ↙ 18-22 km/h │ /(___(__) ↙ 12-15 km/h │ /(___(__) ↙ 10-15 km/h │

│ ‚‘‚‘‚‘‚‘ 5 km │ ‚‘‚‘‚‘‚‘ 7 km │ ‘ ‘ ‘ ‘ 5 km │ ‘ ‘ ‘ ‘ 9 km │

│ ‚’‚’‚’‚’ 12.4 mm | 79% │ ‚’‚’‚’‚’ 6.4 mm | 80% │ ‘ ‘ ‘ ‘ 0.2 mm | 85% │ ‘ ‘ ‘ ‘ 0.2 mm | 77% │

└──────────────────────────────┴──────────────────────────────┴──────────────────────────────┴──────────────────────────────┘

$ kubectl exec -it $PODNAME1 -- curl -s wttr.in/seoul?format=3

seoul: 🌦 +13°C

$ kubectl exec -it $PODNAME1 -- curl -s wttr.in/Moon

.------------.

.--' o . . `--.

.-' . O . . `-.

.-'@ @@@@@@@ . @@@@@ `-

/@@@ @@@@@@@@@@@ @@@@@@@ . \

./ o @@@@@@@@@@@ @@@@@@@ . \

/@@ o @@@@@@@@@@@. @@@@@@@ O \

/@@@@ . @@@@@@@o @@@@@@@@@@ @@@

|@@@@@ . @@@@@@@@@@@@@ o @@@@|

/@@@@@ O `.-./ . @@@@@@@@@@@@ @@ Full Moon +

| @@@@ --`-' o @@@@@@@@ @@@@ | 0 13:23:08

|@ @@@ ` o . @@ . @@@@@@@ | Last Quarter -

| @@ @ .-. @@@ @@@@@@@ | 6 7:29:30

\ . @ @@@ `-' . @@@@ @@@@ o

| @@ @@@@@ . @@ . |

\ @@@@ @\@@ / . O . o .

\ o @@ \ \ / . . /

`\ . .\.-.___ . . .-. /

\ `-' `-' /

`-. o / | o O . .-

`-. / . . .-'

`--. . .--'

`------------'

$ kubectl exec -it $PODNAME1 -- curl -s wttr.in/:help

# 워커 노드 EC2

$ ip rule

0: from all lookup local

512: from all to 192.168.2.75 lookup main

1024: from all fwmark 0x80/0x80 lookup main

32766: from all lookup main

32767: from all lookup default

$ ip route show table main

default via 192.168.2.1 dev eth0

169.254.169.254 dev eth0

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.173

192.168.2.75 dev enie745cdee225 scope link

$ sudo iptables -L -n -v -t nat

$ sudo iptables -t nat -S

# 파드가 외부와 통신시에는 아래 처럼 'AWS-SNAT-CHAIN-0, AWS-SNAT-CHAIN-1' 룰(rule)에 의해서 SNAT 되어서 외부와 통신!

# 참고로 뒤 IP는 eth0(ENI 첫번째)의 IP 주소이다

# --random-fully 동작 - 링크1 링크2

sudo iptables -t nat -S | grep 'A AWS-SNAT-CHAIN'

-A AWS-SNAT-CHAIN-0 ! -d 192.168.0.0/16 -m comment --comment "AWS SNAT CHAIN" -j AWS-SNAT-CHAIN-1

-A AWS-SNAT-CHAIN-1 ! -o vlan+ -m comment --comment "AWS, SNAT" -m addrtype ! --dst-type LOCAL -j SNAT --to-source 192.168.2.173 --random-fully

## 아래 'mark 0x4000/0x4000' 매칭되지 않아서 RETURN 됨!

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

...

# 카운트 확인 시 AWS-SNAT-CHAIN-0, AWS-SNAT-CHAIN-1 에 매칭되어, 목적지가 192.168.0.0/16 아니고 외부 빠져나갈때 SNAT 192.168.1.251(EC2 노드1 IP) 변경되어 나간다!

$ sudo iptables -t filter --zero; sudo iptables -t nat --zero; sudo iptables -t mangle --zero; sudo iptables -t raw --zero

$ watch -d 'sudo iptables -v --numeric --table nat --list AWS-SNAT-CHAIN-0; echo ; sudo iptables -v --numeric --table nat --list AWS-SNAT-CHAIN-1; echo ; sudo iptables -v --numeric --table nat --list KUBE-POSTROUTING'

# conntrack 확인

$ sudo conntrack -L -n |grep -v '169.254.169'

tcp 6 89 TIME_WAIT src=192.168.2.173 dst=52.95.197.186 sport=33472 dport=443 src=52.95.197.186 dst=192.168.2.173 sport=443 dport=58782 [ASSURED] mark=128 use=1

udp 17 10 src=192.168.2.173 dst=121.162.54.1 sport=55936 dport=123 src=121.162.54.1 dst=192.168.2.173 sport=123 dport=20668 mark=128 use=1

tcp 6 431991 ESTABLISHED src=192.168.2.173 dst=52.95.194.65 sport=44400 dport=443 src=52.95.194.65 dst=192.168.2.173 sport=443 dport=37190 [ASSURED] mark=128 use=1

tcp 6 1 CLOSE src=192.168.2.173 dst=52.95.194.65 sport=37078 dport=443 src=52.95.194.65 dst=192.168.2.173 sport=443 dport=64516 [ASSURED] mark=128 use=1

tcp 6 431999 ESTABLISHED src=192.168.2.173 dst=3.37.247.124 sport=41790 dport=443 src=3.37.247.124 dst=192.168.2.173 sport=443 dport=18671 [ASSURED] mark=128 use=1

tcp 6 431985 ESTABLISHED src=192.168.2.173 dst=52.95.195.121 sport=57922 dport=443 src=52.95.195.121 dst=192.168.2.173 sport=443 dport=34794 [ASSURED] mark=128 use=1

conntrack v1.4.4 (conntrack-tools): 166 flow entries have been shown.

tcp 6 431999 ESTABLISHED src=192.168.2.173 dst=3.37.247.124 sport=34472 dport=443 src=3.37.247.124 dst=192.168.2.173 sport=443 dport=57051 [ASSURED] mark=128 use=1

5. 노드에 파드 생성 갯수 제한

- Secondary IPv4 addresses : 인스턴스 유형에 최대 ENI 갯수와 할당 가능 IP 수를 조합하여 선정합니다.

- 워커 노드의 인스턴스 타입 별 파드 생성 갯수가 제한됩니다. 인스턴스 타입 별 ENI 최대 갯수와 할당 가능한 최대 IP 갯수에 따라서 파드 배치 갯수가 결정됩니다. 단, aws-node 와 kube-proxy 파드는 호스트의 IP를 사용함으로 최대 갯수에서 제외합니다.

- 최대 파드 생성 갯수 : (Number of network interfaces for the instance type × (the number of IP addressess per network interface — 1)) + 2

노드의 인스턴스 정보 확인 : t3.medium 사용 시

# t3 타입의 정보(필터) 확인

$ aws ec2 describe-instance-types --filters Name=instance-type,Values=t3.* \

--query "InstanceTypes[].{Type: InstanceType, MaxENI: NetworkInfo.MaximumNetworkInterfaces, IPv4addr: NetworkInfo.Ipv4AddressesPerInterface}" \

--output table

--------------------------------------

| DescribeInstanceTypes |

+----------+----------+--------------+

| IPv4addr | MaxENI | Type |

+----------+----------+--------------+

| 15 | 4 | t3.2xlarge |

| 6 | 3 | t3.medium |

| 12 | 3 | t3.large |

| 15 | 4 | t3.xlarge |

| 2 | 2 | t3.micro |

| 2 | 2 | t3.nano |

| 4 | 3 | t3.small |

+----------+----------+--------------+

# c5 타입의 정보(필터) 확인

$ aws ec2 describe-instance-types --filters Name=instance-type,Values=c5*.* \

--query "InstanceTypes[].{Type: InstanceType, MaxENI: NetworkInfo.MaximumNetworkInterfaces, IPv4addr: NetworkInfo.Ipv4AddressesPerInterface}" \

--output table

# 파드 사용 가능 계산 예시 : aws-node 와 kube-proxy 파드는 host-networking 사용으로 IP 2개 남음

((MaxENI * (IPv4addr-1)) + 2)

t3.medium 경우 : ((3 * (6 - 1) + 2 ) = 17개 >> aws-node 와 kube-proxy 2개 제외하면 15개

# 워커노드 상세 정보 확인 : 노드 상세 정보의 Allocatable 에 pods 에 17개 정보 확인

$ kubectl describe node | grep Allocatable: -A7

Allocatable:

attachable-volumes-aws-ebs: 25

cpu: 1930m

ephemeral-storage: 27905944324

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3388364Ki

pods: 17최대 파드 생성 및 확인

# 워커 노드 EC2 - 모니터링

$ while true; do ip -br -c addr show && echo "--------------" ; date "+%Y-%m-%d %H:%M:%S" ; sleep 1; done

# 작업용 EC2 - 터미널1

$ watch -d 'kubectl get pods -o wide'

# 작업용 EC2 - 터미널2

# 디플로이먼트 생성

$ curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/2/nginx-dp.yaml

$ kubectl apply -f nginx-dp.yaml

# 파드 확인

$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-6fb79bc456-7x4nl 1/1 Running 0 22s 192.168.3.203 ip-192-168-3-94.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-6fb79bc456-l4nrr 1/1 Running 0 22s 192.168.2.235 ip-192-168-2-173.ap-northeast-2.compute.internal <none> <none>

$ kubectl get pod -o=custom-columns=NAME:.metadata.name,IP:.status.podIP

NAME IP

nginx-deployment-6fb79bc456-7x4nl 192.168.3.203

nginx-deployment-6fb79bc456-l4nrr 192.168.2.235

# 파드 증가 테스트 >> 파드 정상 생성 확인, 워커 노드에서 eth, eni 갯수 확인

$ kubectl scale deployment nginx-deployment --replicas=8

# 파드 증가 테스트 >> 파드 정상 생성 확인, 워커 노드에서 eth, eni 갯수 확인

$ kubectl scale deployment nginx-deployment --replicas=15

# 파드 증가 테스트 >> 파드 정상 생성 확인, 워커 노드에서 eth, eni 갯수 확인 >> 어떤일이 벌어졌는가?

$ kubectl scale deployment nginx-deployment --replicas=30

# 파드 증가 테스트 >> 파드 정상 생성 확인, 워커 노드에서 eth, eni 갯수 확인 >> 어떤일이 벌어졌는가?

$ kubectl scale deployment nginx-deployment --replicas=50

# 파드 생성 실패!

$ kubectl get pods | grep Pending

nginx-deployment-6fb79bc456-2gwnp 0/1 Pending 0 8s

nginx-deployment-6fb79bc456-7btkh 0/1 Pending 0 8s

nginx-deployment-6fb79bc456-gjpxx 0/1 Pending 0 8s

nginx-deployment-6fb79bc456-h27dj 0/1 Pending 0 8s

nginx-deployment-6fb79bc456-mvmsh 0/1 Pending 0 8s

nginx-deployment-6fb79bc456-nvl2s 0/1 Pending 0 8s

nginx-deployment-6fb79bc456-zv2dx 0/1 Pending 0 8s

$ kubectl describe pod <Pending 파드> | grep Events: -A5

$ kubectl describe pod nginx-deployment-6fb79bc456-zv2dx | grep Events: -A5

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 51s default-scheduler 0/3 nodes are available: 3 Too many pods. preemption: 0/3 nodes are available: 3 No preemption victims found for incoming pod.

# 디플로이먼트 삭제

kubectl delete deploy nginx-deployment- 해결 방안 : Prefix Delegation, WARM & MIN IP/Prefix Targets, Custom Network

[도전과제1] EKS Max pod 개수 증가 - Prefix Delegation + WARM & MIN IP/Prefix Targets : EKS에 직접 설정 후 파드 150대 생성해보기 - 링크 Workshop

[도전과제2] EKS Max pod 개수 증가 - Custom Network : EKS에 직접 설정 후 파드 150대 생성해보기 - 링크 Workshop

6. Service & AWS LoadBalancer Controller

crd 로 target group binding이 생성됩니다.

- crd? 쿠버네티스에서는 오브젝트를 직접 정의해 사용할수 있으며 소스코드를 따로 수정하지 않고도 API를 확장해 사용할 수 있는 인터페이스를 제공하고 있습니다. [참고 : custom resource]

# cr 사용 예

# 아래 yaml파일을 이용해 kubernetes api server에 생성해달라고 요청

---

apiVersion: "extension.example.com/v1"

kind: Hello

metadata:

name: hello-sample

size: 3

위와 같이 LB 생성이 가능한 이유는 IRSA 매핑으로 가능합니다.

AWS VPC CNI 사용으로 NLB에서 POD IP로 바로 통신이 됩니다.

AWS LoadBalancer Controller 배포 with IRSA

# OIDC 확인

$ aws eks describe-cluster --name $CLUSTER_NAME --query "cluster.identity.oidc.issuer" --output text

https://oidc.eks.ap-northeast-2.amazonaws.com/id/43383A69A54F954C2526319D6894E277

$ aws iam list-open-id-connect-providers | jq

# IAM Policy (AWSLoadBalancerControllerIAMPolicy) 생성

$ curl -o iam_policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.4.7/docs/install/iam_policy.json

$ aws iam create-policy --policy-name AWSLoadBalancerControllerIAMPolicy --policy-document file://iam_policy.json

# 생성된 IAM Policy Arn 확인

$ aws iam list-policies --scope Local

$ aws iam get-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy

$ aws iam get-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --query 'Policy.Arn'

# AWS Load Balancer Controller를 위한 ServiceAccount를 생성 >> 자동으로 매칭되는 IAM Role 을 CloudFormation 으로 생성됨!

# IAM 역할 생성. AWS Load Balancer Controller의 kube-system 네임스페이스에 aws-load-balancer-controller라는 Kubernetes 서비스 계정을 생성하고 IAM 역할의 이름으로 Kubernetes 서비스 계정에 주석을 답니다

$ eksctl create iamserviceaccount --cluster=$CLUSTER_NAME --namespace=kube-system --name=aws-load-balancer-controller \

--attach-policy-arn=arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --override-existing-serviceaccounts --approve

2023-05-06 16:22:24 [ℹ] 1 iamserviceaccount (kube-system/aws-load-balancer-controller) was included (based on the include/exclude rules)

2023-05-06 16:22:24 [!] metadata of serviceaccounts that exist in Kubernetes will be updated, as --override-existing-serviceaccounts was set

2023-05-06 16:22:24 [ℹ] 1 task: {

2 sequential sub-tasks: {

create IAM role for serviceaccount "kube-system/aws-load-balancer-controller",

create serviceaccount "kube-system/aws-load-balancer-controller",

} }2023-05-06 16:22:24 [ℹ] building iamserviceaccount stack "eksctl-eks-hayley-addon-iamserviceaccount-kube-system-aws-load-balancer-controller"

2023-05-06 16:22:25 [ℹ] deploying stack "eksctl-eks-hayley-addon-iamserviceaccount-kube-system-aws-load-balancer-controller"

2023-05-06 16:22:25 [ℹ] waiting for CloudFormation stack "eksctl-eks-hayley-addon-iamserviceaccount-kube-system-aws-load-balancer-controller"

2023-05-06 16:22:55 [ℹ] waiting for CloudFormation stack "eksctl-eks-hayley-addon-iamserviceaccount-kube-system-aws-load-balancer-controller"

2023-05-06 16:22:55 [ℹ] created serviceaccount "kube-system/aws-load-balancer-controller"

## 서비스 어카운트 확인

$ kubectl get serviceaccounts -n kube-system aws-load-balancer-controller -o yaml | yh

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::90XXXXXXX:role/eksctl-eks-hayley-addon-iamserviceaccount-ku-Role1-4VRJ2BM3XXJV

creationTimestamp: "2023-05-06T07:22:55Z"

labels:

app.kubernetes.io/managed-by: eksctl

name: aws-load-balancer-controller

namespace: kube-system

resourceVersion: "22700"

uid: 67ac4a9b-c606-4f45-a1f8-888ad3f2eabd

# Helm Chart 설치

$ helm repo add eks https://aws.github.io/eks-charts

$ helm repo update

$ helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

--set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

## 설치 확인

$ kubectl get crd

NAME CREATED AT

eniconfigs.crd.k8s.amazonaws.com 2023-05-06T05:05:53Z

ingressclassparams.elbv2.k8s.aws 2023-05-06T07:23:43Z

securitygrouppolicies.vpcresources.k8s.aws 2023-05-06T05:05:55Z

targetgroupbindings.elbv2.k8s.aws 2023-05-06T07:23:43Z

$ kubectl get deployment -n kube-system aws-load-balancer-controller

NAME READY UP-TO-DATE AVAILABLE AGE

aws-load-balancer-controller 2/2 2 2 19s

$ kubectl describe deploy -n kube-system aws-load-balancer-controller

$ kubectl describe deploy -n kube-system aws-load-balancer-controller | grep 'Service Account'

Service Account: aws-load-balancer-controller

# 클러스터롤, 롤 확인

$ kubectl describe clusterrolebindings.rbac.authorization.k8s.io aws-load-balancer-controller-rolebinding

Name: aws-load-balancer-controller-rolebinding

Labels: app.kubernetes.io/instance=aws-load-balancer-controller

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=aws-load-balancer-controller

app.kubernetes.io/version=v2.5.1

helm.sh/chart=aws-load-balancer-controller-1.5.2

Annotations: meta.helm.sh/release-name: aws-load-balancer-controller

meta.helm.sh/release-namespace: kube-system

Role:

Kind: ClusterRole

Name: aws-load-balancer-controller-role

Subjects:

Kind Name Namespace

---- ---- ---------

ServiceAccount aws-load-balancer-controller kube-system

$ kubectl describe clusterroles.rbac.authorization.k8s.io aws-load-balancer-controller-role

...

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

...

ingresses [] [] [get list patch update watch]

services [] [] [get list patch update watch]

...

pods [] [] [get list watch]

...

pods/status [] [] [update patch]

services/status [] [] [update patch]

targetgroupbindings/status [] [] [update patch]

ingresses.elbv2.k8s.aws/status [] [] [update patch]

...생성된 IAM Role 신뢰 관계 확인

서비스/파드 배포 테스트 with NLB

# 모니터링

$ watch -d kubectl get pod,svc,ep

# 작업용 EC2 - 디플로이먼트 & 서비스 생성

$ curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/2/echo-service-nlb.yaml

$ cat echo-service-nlb.yaml | yh

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-echo

spec:

replicas: 2

selector:

matchLabels:

app: deploy-websrv

template:

metadata:

labels:

app: deploy-websrv

spec:

terminationGracePeriodSeconds: 0

containers:

- name: akos-websrv

image: k8s.gcr.io/echoserver:1.5

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: svc-nlb-ip-type

annotations:

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-healthcheck-port: "8080"

service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

spec:

ports:

- port: 80

targetPort: 8080

protocol: TCP

type: LoadBalancer

loadBalancerClass: service.k8s.aws/nlb

selector:

app: deploy-websrv

$ kubectl apply -f echo-service-nlb.yaml

# 확인

$ kubectl get deploy,pod

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deploy-echo 2/2 2 2 16s

NAME READY STATUS RESTARTS AGE

pod/deploy-echo-5c4856dfd6-lwcbn 1/1 Running 0 16s

pod/deploy-echo-5c4856dfd6-qkv45 1/1 Running 0 16s

$ kubectl get svc,ep,ingressclassparams,targetgroupbindings

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 146m

service/svc-nlb-ip-type LoadBalancer 10.100.217.66 k8s-default-svcnlbip-5a2706bc39-26b84645c05d8e4b.elb.ap-northeast-2.amazonaws.com 80:30155/TCP 38s

NAME ENDPOINTS AGE

endpoints/kubernetes 192.168.2.56:443,192.168.3.99:443 146m

endpoints/svc-nlb-ip-type 192.168.1.248:8080,192.168.2.140:8080 38s

NAME GROUP-NAME SCHEME IP-ADDRESS-TYPE AGE

ingressclassparams.elbv2.k8s.aws/alb 8m9s

NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE

targetgroupbinding.elbv2.k8s.aws/k8s-default-svcnlbip-f1a8f7cbd7 svc-nlb-ip-type 80 ip 35s

$ kubectl get targetgroupbindings -o json | jq

# AWS ELB(NLB) 정보 확인

$ aws elbv2 describe-load-balancers | jq

$ aws elbv2 describe-load-balancers --query 'LoadBalancers[*].State.Code' --output text

# 웹 접속 주소 확인

$ kubectl get svc svc-nlb-ip-type -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "Pod Web URL = http://"$1 }'

Pod Web URL = http://k8s-default-svcnlbip-5a2706bc39-26b84645c05d8e4b.elb.ap-northeast-2.amazonaws.com

# 파드 로깅 모니터링

kubectl logs -l app=deploy-websrv -f

# 분산 접속 확인

$ NLB=$(kubectl get svc svc-nlb-ip-type -o jsonpath={.status.loadBalancer.ingress[0].hostname})

$ curl -s $NLB

$ for i in {1..100}; do curl -s $NLB | grep Hostname ; done | sort | uniq -c | sort -nr

59 Hostname: deploy-echo-5c4856dfd6-qkv45

41 Hostname: deploy-echo-5c4856dfd6-lwcbn

# 지속적인 접속 시도 : 아래 상세 동작 확인 시 유용(패킷 덤프 등)

$ while true; do curl -s --connect-timeout 1 $NLB | egrep 'Hostname|client_address'; echo "----------" ; date "+%Y-%m-%d %H:%M:%S" ; sleep 1; done

2023-05-06 16:35:14

Hostname: deploy-echo-5c4856dfd6-lwcbn

client_address=192.168.2.17

----------

2023-05-06 16:35:15

Hostname: deploy-echo-5c4856dfd6-lwcbn

client_address=192.168.2.17

----------

2023-05-06 16:35:16

Hostname: deploy-echo-5c4856dfd6-lwcbn

client_address=192.168.2.17

----------

2023-05-06 16:35:17

Hostname: deploy-echo-5c4856dfd6-lwcbn

client_address=192.168.2.17

----------

2023-05-06 16:35:18

Hostname: deploy-echo-5c4856dfd6-qkv45

client_address=192.168.2.17- AWS NLB의 대상 그룹 확인 : IP를 확인해보자

- 파드 2개 → 1개 → 3개 설정 시 동작 : auto discovery ← 어떻게 가능할까? aws lb controller가 IRSA로 적절한 권한을 가지고 있기 때문?!

# 작업용 EC2 - 파드 1개 설정

$ kubectl scale deployment deploy-echo --replicas=1

# 확인

$ kubectl get deploy,pod,svc,ep

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deploy-echo 1/1 1 1 4m34s

NAME READY STATUS RESTARTS AGE

pod/deploy-echo-5c4856dfd6-lwcbn 1/1 Running 0 4m34s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 150m

service/svc-nlb-ip-type LoadBalancer 10.100.217.66 k8s-default-svcnlbip-5a2706bc39-26b84645c05d8e4b.elb.ap-northeast-2.amazonaws.com 80:30155/TCP 4m34s

NAME ENDPOINTS AGE

endpoints/kubernetes 192.168.2.56:443,192.168.3.99:443 150m

endpoints/svc-nlb-ip-type 192.168.2.140:8080 4m34s

$ curl -s $NLB

$ for i in {1..100}; do curl -s --connect-timeout 1 $NLB | grep Hostname ; done | sort | uniq -c | sort -nr

# 작업용 EC2 - 파드 3개 설정

$ kubectl scale deployment deploy-echo --replicas=3

# 확인

$ kubectl get deploy,pod,svc,ep

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deploy-echo 2/3 3 2 5m40s

NAME READY STATUS RESTARTS AGE

pod/deploy-echo-5c4856dfd6-9rfr8 0/1 ContainerCreating 0 4s

pod/deploy-echo-5c4856dfd6-lwcbn 1/1 Running 0 5m40s

pod/deploy-echo-5c4856dfd6-pbtpz 1/1 Running 0 4s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 151m

service/svc-nlb-ip-type LoadBalancer 10.100.217.66 k8s-default-svcnlbip-5a2706bc39-26b84645c05d8e4b.elb.ap-northeast-2.amazonaws.com 80:30155/TCP 5m40s

NAME ENDPOINTS AGE

endpoints/kubernetes 192.168.2.56:443,192.168.3.99:443 151m

endpoints/svc-nlb-ip-type 192.168.1.143:8080,192.168.2.140:8080 5m40s

$ curl -s $NLB

$ for i in {1..100}; do curl -s --connect-timeout 1 $NLB | grep Hostname ; done | sort | uniq -c | sort -nr

#

$ kubectl describe deploy -n kube-system aws-load-balancer-controller | grep 'Service Account'

Service Account: aws-load-balancer-controller

# [AWS LB Ctrl] 클러스터 롤 바인딩 정보 확인

$ kubectl describe clusterrolebindings.rbac.authorization.k8s.io aws-load-balancer-controller-rolebinding

Name: aws-load-balancer-controller-rolebinding

Labels: app.kubernetes.io/instance=aws-load-balancer-controller

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=aws-load-balancer-controller

app.kubernetes.io/version=v2.5.1

helm.sh/chart=aws-load-balancer-controller-1.5.2

Annotations: meta.helm.sh/release-name: aws-load-balancer-controller

meta.helm.sh/release-namespace: kube-system

Role:

Kind: ClusterRole

Name: aws-load-balancer-controller-role

Subjects:

Kind Name Namespace

---- ---- ---------

ServiceAccount aws-load-balancer-controller kube-system

# [AWS LB Ctrl] 클러스터롤 확인

$ kubectl describe clusterroles.rbac.authorization.k8s.io aws-load-balancer-controller-roleNLB IP Target & Proxy Protocol v2 활성화 : NLB에서 바로 파드로 인입 및 ClientIP 확인 설정

# 생성

$ cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: gasida-web

spec:

replicas: 1

selector:

matchLabels:

app: gasida-web

template:

metadata:

labels:

app: gasida-web

spec:

terminationGracePeriodSeconds: 0

containers:

- name: gasida-web

image: gasida/httpd:pp

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc-nlb-ip-type-pp

annotations:

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-proxy-protocol: "*"

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

type: LoadBalancer

loadBalancerClass: service.k8s.aws/nlb

selector:

app: gasida-web

EOF

$ kubectl get svc,ep

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 156m

service/svc-nlb-ip-type LoadBalancer 10.100.217.66 k8s-default-svcnlbip-5a2706bc39-26b84645c05d8e4b.elb.ap-northeast-2.amazonaws.com 80:30155/TCP 11m

service/svc-nlb-ip-type-pp LoadBalancer 10.100.116.100 k8s-default-svcnlbip-0808796075-1eef7615b36dbd33.elb.ap-northeast-2.amazonaws.com 80:31065/TCP 5s

NAME ENDPOINTS AGE

endpoints/kubernetes 192.168.2.56:443,192.168.3.99:443 156m

endpoints/svc-nlb-ip-type 192.168.1.143:8080,192.168.2.140:8080,192.168.3.46:8080 11m

endpoints/svc-nlb-ip-type-pp <none> 5s

$ kubectl describe svc svc-nlb-ip-type-pp

Name: svc-nlb-ip-type-pp

Namespace: default

Labels: <none>

Annotations: service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: true

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-proxy-protocol: *

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

Selector: app=gasida-web

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.100.116.100

IPs: 10.100.116.100

LoadBalancer Ingress: k8s-default-svcnlbip-0808796075-1eef7615b36dbd33.elb.ap-northeast-2.amazonaws.com

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 31065/TCP

Endpoints: <none>

Session Affinity: None

External Traffic Policy: Cluster

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfullyReconciled 3s service Successfully reconciled

$ kubectl describe svc svc-nlb-ip-type-pp | grep Annotations: -A5

Annotations: service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: true

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-proxy-protocol: *

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

Selector: app=gasida-web

Type: LoadBalancer

# apache에 proxy protocol 활성화 확인

$ kubectl exec deploy/gasida-web -- apachectl -t -D DUMP_MODULES

Loaded Modules:

core_module (static)

so_module (static)

http_module (static)

mpm_event_module (shared)

authn_file_module (shared)

authn_core_module (shared)

authz_host_module (shared)

authz_groupfile_module (shared)

authz_user_module (shared)

authz_core_module (shared)

access_compat_module (shared)

auth_basic_module (shared)

reqtimeout_module (shared)

filter_module (shared)

mime_module (shared)

log_config_module (shared)

env_module (shared)

headers_module (shared)

setenvif_module (shared)

version_module (shared)

remoteip_module (shared)

unixd_module (shared)

status_module (shared)

autoindex_module (shared)

dir_module (shared)

alias_module (shared)

$ kubectl exec deploy/gasida-web -- cat /usr/local/apache2/conf/httpd.conf7. Ingress

- 인그레스 : 클러스터 내부의 서비스(ClusterIP, NodePort, Loadbalancer)를 외부로 노출(HTTP/HTTPS) — Web Proxy 역할

서비스/파드 배포 테스트 with Ingress(ALB)

# 게임 파드와 Service, Ingress 배포

$ curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ingress1.yaml

$ cat ingress1.yaml | yh

apiVersion: v1

kind: Namespace

metadata:

name: game-2048

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: game-2048

name: deployment-2048

spec:

selector:

matchLabels:

app.kubernetes.io/name: app-2048

replicas: 2

template:

metadata:

labels:

app.kubernetes.io/name: app-2048

spec:

containers:

- image: public.ecr.aws/l6m2t8p7/docker-2048:latest

imagePullPolicy: Always

name: app-2048

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

namespace: game-2048

name: service-2048

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

type: NodePort

selector:

app.kubernetes.io/name: app-2048

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

namespace: game-2048

name: ingress-2048

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

spec:

ingressClassName: alb

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: service-2048

port:

number: 80

$ kubectl apply -f ingress1.yaml

# 모니터링

$ watch -d kubectl get pod,ingress,svc,ep -n game-2048

# 생성 확인

$ kubectl get-all -n game-2048

NAME NAMESPACE AGE

configmap/kube-root-ca.crt game-2048 71s

endpoints/service-2048 game-2048 71s

pod/deployment-2048-6bc9fd6bf5-kxbn8 game-2048 71s

pod/deployment-2048-6bc9fd6bf5-q4hfg game-2048 71s

serviceaccount/default game-2048 71s

service/service-2048 game-2048 71s

deployment.apps/deployment-2048 game-2048 71s

replicaset.apps/deployment-2048-6bc9fd6bf5 game-2048 71s

endpointslice.discovery.k8s.io/service-2048-r9hz4 game-2048 71s

targetgroupbinding.elbv2.k8s.aws/k8s-game2048-service2-2f66a3a673 game-2048 67s

ingress.networking.k8s.io/ingress-2048 game-2048 71s

$ kubectl get ingress,svc,ep,pod -n game-2048

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/ingress-2048 alb * k8s-game2048-ingress2-5ebbd98053-468916540.ap-northeast-2.elb.amazonaws.com 80 91s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/service-2048 NodePort 10.100.220.43 <none> 80:31342/TCP 91s

NAME ENDPOINTS AGE

endpoints/service-2048 192.168.2.74:80,192.168.3.203:80 91s

NAME READY STATUS RESTARTS AGE

pod/deployment-2048-6bc9fd6bf5-kxbn8 1/1 Running 0 91s

pod/deployment-2048-6bc9fd6bf5-q4hfg 1/1 Running 0 91s

kubectl get targetgroupbindings -n game-2048

NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE

k8s-game2048-service2-e48050abac service-2048 80 ip 87s

# Ingress 확인

$ kubectl describe ingress -n game-2048 ingress-2048

Name: ingress-2048

Labels: <none>

Namespace: game-2048

Address: k8s-game2048-ingress2-5ebbd98053-468916540.ap-northeast-2.elb.amazonaws.com

Ingress Class: alb

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

*

/ service-2048:80 (192.168.2.74:80,192.168.3.203:80)

Annotations: alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfullyReconciled 103s ingress Successfully reconciled

# 게임 접속 : ALB 주소로 웹 접속

$ kubectl get ingress -n game-2048 ingress-2048 -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "Game URL = http://"$1 }'

Game URL = http://k8s-game2048-ingress2-5ebbd98053-468916540.ap-northeast-2.elb.amazonaws.com

# 파드 IP 확인

$ kubectl get pod -n game-2048 -owide

kubectl get pod -n game-2048 -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-2048-6bc9fd6bf5-kxbn8 1/1 Running 0 2m17s 192.168.2.74 ip-192-168-2-173.ap-northeast-2.compute.internal <none> <none>

deployment-2048-6bc9fd6bf5-q4hfg 1/1 Running 0 2m17s 192.168.3.203 ip-192-168-3-94.ap-northeast-2.compute.internal <none> <none>- ALB 대상 그룹에 등록된 대상 확인 : ALB에서 파드 IP로 직접 전달

- 파드 3개로 증가

# 터미널1

$ watch kubectl get pod -n game-2048

# 터미널2 : 파드 3개로 증가

$ kubectl scale deployment -n game-2048 deployment-2048 --replicas 3

$ kubectl get pod -n game-2048 -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-2048-6bc9fd6bf5-kxbn8 1/1 Running 0 2m51s 192.168.2.74 ip-192-168-2-173.ap-northeast-2.compute.internal <none> <none>

deployment-2048-6bc9fd6bf5-q4hfg 1/1 Running 0 2m51s 192.168.3.203 ip-192-168-3-94.ap-northeast-2.compute.internal <none> <none>

deployment-2048-6bc9fd6bf5-qsqnk 0/1 ContainerCreating 0 4s <none> ip-192-168-1-31.ap-northeast-2.compute.internal <none> <none>8. ExternalDNS : 참고

- K8S 서비스/인그레스 생성 시 도메인을 설정하면, AWS(Route 53), Azure(DNS), GCP(Cloud DNS) 에 A 레코드(TXT 레코드)로 자동 생성/삭제됩니다.

9. Amazon EKS now supports Amazon Application Recovery Controller

Learn about Amazon Application Recovery Controller’s (ARC) Zonal Shift in Amazon EKS - Link

자원 삭제

- Amazon EKS 클러스터 삭제(10분 정도 소요)

eksctl delete cluster --name $CLUSTER_NAME- (클러스터 삭제 완료 확인 후) AWS CloudFormation 스택 삭제

aws cloudformation delete-stack --stack-name myeks'IT > Infra&Cloud' 카테고리의 다른 글

| [aws] EKS Observability (0) | 2025.03.01 |

|---|---|

| [aws] EKS Storage, Managed Node Groups (2) | 2025.02.23 |

| [aws] Amzaon EKS 설치 및 기본 사용 (0) | 2025.02.09 |

| [gcp] Gemini (1) | 2024.08.04 |

| [gcp] Security Command Center(Detective Controls) (0) | 2024.07.15 |

- Total

- Today

- Yesterday

- CICD

- IaC

- terraform

- cloud

- k8s calico

- GCP

- NW

- VPN

- 파이썬

- SDWAN

- S3

- operator

- PYTHON

- k8s

- ai 엔지니어링

- AI

- handson

- 도서

- autoscaling

- 혼공챌린지

- GKE

- cni

- AI Engineering

- 혼공단

- security

- k8s cni

- AWS

- NFT

- EKS

- 혼공파

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |