티스토리 뷰

안녕하세요. CloudNet@ K8S Study를 진행하며 해당 내용을 이해하고 공유하기 위해 작성한 글입니다. 해당 내용은 EKS docs와 workshop을 기본으로 정리하였습니다.

실습 환경

# YAML 파일 다운로드

$ curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/eks-oneclick6.yaml# CloudFormation 스택 배포

예시) aws cloudformation deploy --template-file eks-oneclick6.yaml --stack-name myeks2 --parameter-overrides KeyName=kp-hayley3 SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 MyIamUserAccessKeyID=AKIA5... MyIamUserSecretAccessKey='CVNa2...' ClusterBaseName=myeks --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 작업용 EC2 IP 출력

$ aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text

# 작업용 EC2 SSH 접속

$ ssh -i ~/.ssh/kp-hayley3.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)기본 설정

# default 네임스페이스 적용

$ kubectl ns default

# (옵션) context 이름 변경

# NICK=<각자 자신의 닉네임>

$ NICK=hayley

$ kubectl ctx

iam-root-account@eks-hayley.ap-northeast-2.eksctl.io

$ kubectl config rename-context admin@myeks.ap-northeast-2.eksctl.io $NICK

# ExternalDNS

# MyDomain=<자신의 도메인>

# echo "export MyDomain=<자신의 도메인>" >> /etc/profile

$ MyDomain=wellbeconnected.com

$ echo "export MyDomain=wellbeconnected.com" >> /etc/profile

$ MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

$ echo $MyDomain, $MyDnzHostedZoneId

wellbeconnected.com, /hostedzone/Z06204681HPKV2R55I4OR

$ curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

$ MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

serviceaccount/external-dns created

clusterrole.rbac.authorization.k8s.io/external-dns created

clusterrolebinding.rbac.authorization.k8s.io/external-dns-viewer created

deployment.apps/external-dns created

# AWS LB Controller

$ helm repo add eks https://aws.github.io/eks-charts

$ helm repo update

$ helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

--set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

# 노드 IP 확인 및 PrivateIP 변수 지정

$ N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

$ N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2b -o jsonpath={.items[0].status.addresses[0].address})

$ N3=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

$ echo "export N1=$N1" >> /etc/profile

$ echo "export N2=$N2" >> /etc/profile

$ echo "export N3=$N3" >> /etc/profile

$ echo $N1, $N2, $N3

192.168.1.96, 192.168.2.73, 192.168.3.122

# 노드 보안그룹 ID 확인

$ NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values='*ng1*' --query "SecurityGroups[*].[GroupId]" --output text)

$ aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.0/24

{

"Return": true,

"SecurityGroupRules": [

{

"SecurityGroupRuleId": "sgr-079740a4d01fb8d55",

"GroupId": "sg-05b26e567964086be",

"GroupOwnerId": "90XXXXXXXX",

"IsEgress": false,

"IpProtocol": "-1",

"FromPort": -1,

"ToPort": -1,

"CidrIpv4": "192.168.1.0/24"

}

]

}

# 워커 노드 SSH 접속

$ for node in $N1 $N2 $N3; do ssh ec2-user@$node hostname; done프로메테우스 & 그라파나(admin / prom-operator) 설치

# 사용 리전의 인증서 ARN 확인

$ CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

$ echo $CERT_ARN

arn:aws:acm:ap-northeast-2:9XXXXXXXX:certificate/3f025fab-XXXXXX

# repo 추가

$ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

# 파라미터 파일 생성

$ cat <<EOT > monitor-values.yaml

prometheus:

prometheusSpec:

podMonitorSelectorNilUsesHelmValues: false

serviceMonitorSelectorNilUsesHelmValues: false

retention: 5d

retentionSize: "10GiB"

ingress:

enabled: true

ingressClassName: alb

hosts:

- prometheus.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

grafana:

defaultDashboardsTimezone: Asia/Seoul

adminPassword: prom-operator

ingress:

enabled: true

ingressClassName: alb

hosts:

- grafana.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

defaultRules:

create: false

kubeControllerManager:

enabled: false

kubeEtcd:

enabled: false

kubeScheduler:

enabled: false

alertmanager:

enabled: false

EOT

# 배포

$ kubectl create ns monitoring

$ helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 45.27.2 \

--set prometheus.prometheusSpec.scrapeInterval='15s' --set prometheus.prometheusSpec.evaluationInterval='15s' \

-f monitor-values.yaml --namespace monitoring

# Metrics-server 배포

$ kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

$ k get po -n monitoring

NAME READY STATUS RESTARTS AGE

kube-prometheus-stack-grafana-778f55f4cd-mfpgx 3/3 Running 0 9m44s

kube-prometheus-stack-kube-state-metrics-5d6578867c-7wm7d 1/1 Running 0 9m44s

kube-prometheus-stack-operator-74d474b47b-v7wd2 1/1 Running 0 9m44s

kube-prometheus-stack-prometheus-node-exporter-lch9x 1/1 Running 0 9m44s

kube-prometheus-stack-prometheus-node-exporter-lxbnt 1/1 Running 0 9m44s

kube-prometheus-stack-prometheus-node-exporter-ql8lw 1/1 Running 0 9m44s

prometheus-kube-prometheus-stack-prometheus-0 2/2 Running 0 9m39sAWS Controller for Kubernetes (ACK)

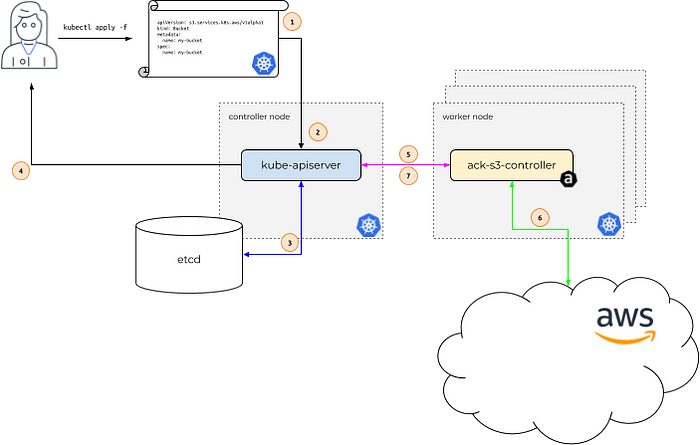

https://aws-controllers-k8s.github.io/community/docs/community/how-it-works/

- aws 서비스 리소스를 k8s 에서 직접 정의하고 사용 할 수 있습니다.

- 예를 들어, 쿠버네티스 api 는 ack-s3-controller 에 요청을 전달하고, ack-s3-controller(IRSA)이 AWS S3 API 를 통해 버킷을 생성하게 됩니다.

ACK 가 지원하는 AWS 서비스 : 링크

- Maintenance Phases 관리 단계 : PREVIEW (테스트 단계, 상용 서비스 비권장) , GENERAL AVAILABILITY (상용 서비스 권장), DEPRECATED, NOT SUPPORTED

- GA 서비스 : ApiGatewayV2, CloudTrail, DynamoDB, EC2, ECR, EKS, IAM, KMS, Lambda, MemoryDB, RDS, S3, SageMaker…

- Preview 서비스 : ACM, ElastiCache, EventBridge, MQ, Route 53, SNS, SQS…

Permissions : k8s api 와 aws api 의 2개의 RBAC 시스템 확인, 각 서비스 컨트롤러 파드는 AWS 서비스 권한 필요 ← IRSA role for ACK Service Controller

https://aws-controllers-k8s.github.io/community/docs/user-docs/authorization/

S3

ACK S3 Controller 설치 with Helm — 링크 Public-Repo Github

# 서비스명 변수 지정

$ export SERVICE=s3

# helm 차트 다운로드

# aws ecr-public get-login-password --region us-east-1 | helm registry login --username AWS --password-stdin public.ecr.aws

$ export RELEASE_VERSION=$(curl -sL https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-)

$ helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION

$ tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz

# helm chart 확인

$ tree ~/$SERVICE-chart

# ACK S3 Controller 설치

$ export ACK_SYSTEM_NAMESPACE=ack-system

$ export AWS_REGION=ap-northeast-2

$ helm install --create-namespace -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart

# 설치 확인

$ helm list --namespace $ACK_SYSTEM_NAMESPACE

$ kubectl -n ack-system get pods

$ kubectl get crd | grep $SERVICE

buckets.s3.services.k8s.aws 2023-06-06T13:46:37Z

$ kubectl get all -n ack-system

NAME READY STATUS RESTARTS AGE

pod/ack-s3-controller-s3-chart-7c55c6657d-f228s 1/1 Running 0 33s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ack-s3-controller-s3-chart 1/1 1 1 33s

NAME DESIRED CURRENT READY AGE

replicaset.apps/ack-s3-controller-s3-chart-7c55c6657d 1 1 1 33s

$ kubectl get-all -n ack-system

NAME NAMESPACE AGE

configmap/kube-root-ca.crt ack-system 61s

pod/ack-s3-controller-s3-chart-7c55c6657d-f228s ack-system 61s

secret/sh.helm.release.v1.ack-s3-controller.v1 ack-system 61s

serviceaccount/ack-s3-controller ack-system 61s

serviceaccount/default ack-system 61s

deployment.apps/ack-s3-controller-s3-chart ack-system 61s

replicaset.apps/ack-s3-controller-s3-chart-7c55c6657d ack-system 61s

role.rbac.authorization.k8s.io/ack-s3-reader ack-system 61s

role.rbac.authorization.k8s.io/ack-s3-writer ack-system 61s

$ kubectl describe sa -n ack-system ack-s3-controllerIRSA 설정 : 권장 정책 AmazonS3FullAccess — 링크

# Create an iamserviceaccount - AWS IAM role bound to a Kubernetes service account

$ eksctl create iamserviceaccount \

--name ack-$SERVICE-controller \

--namespace ack-system \

--cluster $CLUSTER_NAME \

--attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`AmazonS3FullAccess`].Arn' --output text) \

--override-existing-serviceaccounts --approve

# 확인 >> 웹 관리 콘솔에서 CloudFormation Stack >> IAM Role 확인

$ eksctl get iamserviceaccount --cluster $CLUSTER_NAME

NAMESPACE NAME ROLE ARN

ack-system ack-s3-controller arn:aws:iam::90XXXXXXX:role/eksctl-eks-hayley-addon-iamserviceaccount-ac-Role1-YGXXXXX

kube-system aws-load-balancer-controller arn:aws:iam::90XXXXXXX:role/eksctl-eks-hayley-addon-iamserviceaccount-ku-Role1-1XXXXX

# Inspecting the newly created Kubernetes Service Account, we can see the role we want it to assume in our pod.

$ kubectl get sa -n ack-system

NAME SECRETS AGE

ack-s3-controller 0 42m

default 0 42m

$ kubectl describe sa ack-$SERVICE-controller -n ack-system

# Restart ACK service controller deployment using the following commands.

$ kubectl -n ack-system rollout restart deploy ack-$SERVICE-controller-$SERVICE-chart

deployment.apps/ack-s3-controller-s3-chart restarted

# IRSA 적용으로 Env, Volume 추가 확인

$ kubectl describe pod -n ack-system -l k8s-app=$SERVICE-chartS3 버킷 생성, 업데이트, 삭제 — 링크

# [터미널1] 모니터링

$ watch -d aws s3 ls

# S3 버킷 생성을 위한 설정 파일 생성

$ export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text)

$ export BUCKET_NAME=my-ack-s3-bucket-$AWS_ACCOUNT_ID

$ read -r -d '' BUCKET_MANIFEST <<EOF

apiVersion: s3.services.k8s.aws/v1alpha1

kind: Bucket

metadata:

name: $BUCKET_NAME

spec:

name: $BUCKET_NAME

EOF

$ echo "${BUCKET_MANIFEST}" > bucket.yaml

$ cat bucket.yaml | yh

apiVersion: s3.services.k8s.aws/v1alpha1

kind: Bucket

metadata:

name: my-ack-s3-bucket-90XXXXXXXXXX

spec:

name: my-ack-s3-bucket-90XXXXXXXXXX

# S3 버킷 생성

$ aws s3 ls

2023-03-05 19:05:52 hayley-bucket-test

$ kubectl create -f bucket.yaml

bucket.s3.services.k8s.aws/my-ack-s3-bucket-<my account id> created

# S3 버킷 확인

$ aws s3 ls

2023-03-05 19:05:52 hayley-bucket-test

2023-06-06 23:34:53 my-ack-s3-bucket-903575331688

$ kubectl get buckets

NAME AGE

my-ack-s3-bucket-903575331688 15s

$ kubectl describe bucket/$BUCKET_NAME | head -6

Name: my-ack-s3-bucket-<my account id>

Namespace: default

Labels: <none>

Annotations: <none>

API Version: s3.services.k8s.aws/v1alpha1

Kind: Bucket

$ aws s3 ls | grep $BUCKET_NAME

2023-06-06 23:34:53 my-ack-s3-bucket-<my account id>

# S3 버킷 업데이트 : 태그 정보 입력

$ read -r -d '' BUCKET_MANIFEST <<EOF

apiVersion: s3.services.k8s.aws/v1alpha1

kind: Bucket

metadata:

name: $BUCKET_NAME

spec:

name: $BUCKET_NAME

tagging:

tagSet:

- key: myTagKey

value: myTagValue

EOF

$ echo "${BUCKET_MANIFEST}" > bucket.yaml

# S3 버킷 설정 업데이트 실행 : 필요 주석 자동 업뎃 내용이니 무시해도됨!

$ kubectl apply -f bucket.yaml

# S3 버킷 업데이트 확인

$ kubectl describe bucket/$BUCKET_NAME | grep Spec: -A5

Spec:

Name: my-ack-s3-bucket-<my account id>

Tagging:

Tag Set:

Key: myTagKey

Value: myTagValue

# S3 버킷 삭제

$ kubectl delete -f bucket.yaml

# verify the bucket no longer exists

$ kubectl get bucket/$BUCKET_NAME

Error from server (NotFound): buckets.s3.services.k8s.aws "my-ack-s3-bucket-903575331688" not found

$ aws s3 ls | grep $BUCKET_NAMEACK S3 Controller 삭제 — 링크

# helm uninstall

$ export SERVICE=s3

$ helm uninstall -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller

release "ack-s3-controller" uninstalled

# ACK S3 Controller 관련 crd 삭제

$ kubectl delete -f ~/$SERVICE-chart/crds

# IRSA 삭제

$ eksctl delete iamserviceaccount --cluster myeks2 --name ack-$SERVICE-controller --namespace ack-system

# namespace 삭제 >> ACK 모든 실습 후 삭제

$ kubectl delete namespace $ACK_K8S_NAMESPACEEC2 & VPC

ACK EC2-Controller 설치 with Helm — 링크 Public-Repo Github

# 서비스명 변수 지정 및 helm 차트 다운로드

$ export SERVICE=ec2

$ export RELEASE_VERSION=$(curl -sL https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-)

$ helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION

$ tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz

# helm chart 확인

$ tree ~/$SERVICE-chart

/root/ec2-chart

├── Chart.yaml

├── crds

│ ├── ec2.services.k8s.aws_dhcpoptions.yaml

│ ├── ec2.services.k8s.aws_elasticipaddresses.yaml

│ ├── ec2.services.k8s.aws_instances.yaml

│ ├── ec2.services.k8s.aws_internetgateways.yaml

│ ├── ec2.services.k8s.aws_natgateways.yaml

│ ├── ec2.services.k8s.aws_routetables.yaml

│ ├── ec2.services.k8s.aws_securitygroups.yaml

│ ├── ec2.services.k8s.aws_subnets.yaml

│ ├── ec2.services.k8s.aws_transitgateways.yaml

│ ├── ec2.services.k8s.aws_vpcendpoints.yaml

│ ├── ec2.services.k8s.aws_vpcs.yaml

│ ├── services.k8s.aws_adoptedresources.yaml

│ └── services.k8s.aws_fieldexports.yaml

├── templates

│ ├── cluster-role-binding.yaml

│ ├── cluster-role-controller.yaml

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── metrics-service.yaml

│ ├── NOTES.txt

│ ├── role-reader.yaml

│ ├── role-writer.yaml

│ └── service-account.yaml

├── values.schema.json

└── values.yaml

2 directories, 25 files

# ACK EC2-Controller 설치

$ export ACK_SYSTEM_NAMESPACE=ack-system

$ export AWS_REGION=ap-northeast-2

$ helm install -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart

# 설치 확인

$ helm list --namespace $ACK_SYSTEM_NAMESPACE

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

ack-ec2-controller ack-system 1 2023-06-06 23:43:07.430794345 +0900 KST deployed ec2-chart-1.0.3 1.0.3

$ kubectl -n $ACK_SYSTEM_NAMESPACE get pods -l "app.kubernetes.io/instance=ack-$SERVICE-controller"

NAME READY STATUS RESTARTS AGE

ack-ec2-controller-ec2-chart-777567ff4c-pc86z 1/1 Running 0 53s

$ kubectl get crd | grep $SERVICE

dhcpoptions.ec2.services.k8s.aws 2023-06-06T14:43:06Z

elasticipaddresses.ec2.services.k8s.aws 2023-06-06T14:43:06Z

instances.ec2.services.k8s.aws 2023-06-06T14:43:06Z

internetgateways.ec2.services.k8s.aws 2023-06-06T14:43:06Z

natgateways.ec2.services.k8s.aws 2023-06-06T14:43:06Z

routetables.ec2.services.k8s.aws 2023-06-06T14:43:06Z

securitygroups.ec2.services.k8s.aws 2023-06-06T14:43:07Z

subnets.ec2.services.k8s.aws 2023-06-06T14:43:07Z

transitgateways.ec2.services.k8s.aws 2023-06-06T14:43:07Z

vpcendpoints.ec2.services.k8s.aws 2023-06-06T14:43:07Z

vpcs.ec2.services.k8s.aws 2023-06-06T14:43:07ZIRSA 설정 : 권장 정책 AmazonEC2FullAccess — ARN

# Create an iamserviceaccount - AWS IAM role bound to a Kubernetes service account

$ eksctl create iamserviceaccount \

--name ack-$SERVICE-controller \

--namespace $ACK_SYSTEM_NAMESPACE \

--cluster $CLUSTER_NAME \

--attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`AmazonEC2FullAccess`].Arn' --output text) \

--override-existing-serviceaccounts --approve

# 확인 >> 웹 관리 콘솔에서 CloudFormation Stack >> IAM Role 확인

# Inspecting the newly created Kubernetes Service Account, we can see the role we want it to assume in our pod.

$ kubectl get sa -n $ACK_SYSTEM_NAMESPACE

NAME SECRETS AGE

ack-ec2-controller 0 4m23s

default 0 60m

$ kubectl describe sa ack-$SERVICE-controller -n $ACK_SYSTEM_NAMESPACE

# Restart ACK service controller deployment using the following commands.

$ kubectl -n $ACK_SYSTEM_NAMESPACE rollout restart deploy ack-$SERVICE-controller-$SERVICE-chart

deployment.apps/ack-ec2-controller-ec2-chart restarted

# IRSA 적용으로 Env, Volume 추가 확인

$ kubectl describe pod -n $ACK_SYSTEM_NAMESPACE -l k8s-app=$SERVICE-chartVPC, Subnet 생성 및 삭제 — 링크

# [터미널1] 모니터링

$ while true; do aws ec2 describe-vpcs --query 'Vpcs[*].{VPCId:VpcId, CidrBlock:CidrBlock}' --output text; echo "-----"; sleep 1; done

# VPC 생성

$ cat <<EOF > vpc.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: VPC

metadata:

name: vpc-tutorial-test

spec:

cidrBlocks:

- 10.0.0.0/16

enableDNSSupport: true

enableDNSHostnames: true

EOF

$ kubectl apply -f vpc.yaml

vpc.ec2.services.k8s.aws/vpc-tutorial-test created

# VPC 생성 확인

$ kubectl get vpcs

NAME ID STATE

vpc-tutorial-test vpc-0964518428ce242ca available

$ kubectl describe vpcs

$ aws ec2 describe-vpcs --query 'Vpcs[*].{VPCId:VpcId, CidrBlock:CidrBlock}' --output text

172.31.0.0/16 vpc-0dc6bc54bbf096999

192.168.0.0/16 vpc-0f7cb497caee52091

192.168.0.0/16 vpc-0603b40ae6693c7cf

10.0.0.0/16 vpc-0964518428ce242ca

# [터미널1] 모니터링

$ VPCID=$(kubectl get vpcs vpc-tutorial-test -o jsonpath={.status.vpcID})

$ while true; do aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --query 'Subnets[*].{SubnetId:SubnetId, CidrBlock:CidrBlock}' --output text; echo "-----"; sleep 1 ; done

# 서브넷 생성

$ VPCID=$(kubectl get vpcs vpc-tutorial-test -o jsonpath={.status.vpcID})

$ cat <<EOF > subnet.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: Subnet

metadata:

name: subnet-tutorial-test

spec:

cidrBlock: 10.0.0.0/20

vpcID: $VPCID

EOF

$ kubectl apply -f subnet.yaml

# 서브넷 생성 확인

$ kubectl get subnets

NAME ID STATE

subnet-tutorial-test subnet-03d0e948254ce28ae available

$ kubectl describe subnets

$ aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --query 'Subnets[*].{SubnetId:SubnetId, CidrBlock:CidrBlock}' --output text

10.0.0.0/20 subnet-03d0e948254ce28ae

# 리소스 삭제

$ kubectl delete -f subnet.yaml && kubectl delete -f vpc.yaml

subnet.ec2.services.k8s.aws "subnet-tutorial-test" deleted

vpc.ec2.services.k8s.aws "vpc-tutorial-test" deletedCreate a VPC Workflow : VPC, Subnet, SG, RT, EIP, IGW, NATGW, Instance 생성 — 링크

vpc-workflow.yaml 파일 생성

$ cat <<EOF > vpc-workflow.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: VPC

metadata:

name: tutorial-vpc

spec:

cidrBlocks:

- 10.0.0.0/16

enableDNSSupport: true

enableDNSHostnames: true

tags:

- key: name

value: vpc-tutorial

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: InternetGateway

metadata:

name: tutorial-igw

spec:

vpcRef:

from:

name: tutorial-vpc

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: NATGateway

metadata:

name: tutorial-natgateway1

spec:

subnetRef:

from:

name: tutorial-public-subnet1

allocationRef:

from:

name: tutorial-eip1

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: ElasticIPAddress

metadata:

name: tutorial-eip1

spec:

tags:

- key: name

value: eip-tutorial

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: RouteTable

metadata:

name: tutorial-public-route-table

spec:

vpcRef:

from:

name: tutorial-vpc

routes:

- destinationCIDRBlock: 0.0.0.0/0

gatewayRef:

from:

name: tutorial-igw

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: RouteTable

metadata:

name: tutorial-private-route-table-az1

spec:

vpcRef:

from:

name: tutorial-vpc

routes:

- destinationCIDRBlock: 0.0.0.0/0

natGatewayRef:

from:

name: tutorial-natgateway1

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: Subnet

metadata:

name: tutorial-public-subnet1

spec:

availabilityZone: ap-northeast-2a

cidrBlock: 10.0.0.0/20

mapPublicIPOnLaunch: true

vpcRef:

from:

name: tutorial-vpc

routeTableRefs:

- from:

name: tutorial-public-route-table

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: Subnet

metadata:

name: tutorial-private-subnet1

spec:

availabilityZone: ap-northeast-2a

cidrBlock: 10.0.128.0/20

vpcRef:

from:

name: tutorial-vpc

routeTableRefs:

- from:

name: tutorial-private-route-table-az1

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: SecurityGroup

metadata:

name: tutorial-security-group

spec:

description: "ack security group"

name: tutorial-sg

vpcRef:

from:

name: tutorial-vpc

ingressRules:

- ipProtocol: tcp

fromPort: 22

toPort: 22

ipRanges:

- cidrIP: "0.0.0.0/0"

description: "ingress"

EOF# VPC 환경 생성

$ kubectl apply -f vpc-workflow.yaml

# [터미널1] NATGW 생성 완료 후 tutorial-private-route-table-az1 라우팅 테이블 ID가 확인되고 그후 tutorial-private-subnet1 서브넷ID가 확인됨 > 5분 정도 시간 소요

$ watch -d kubectl get routetables,subnet

# VPC 환경 생성 확인

$ kubectl describe vpcs

$ kubectl describe internetgateways

$ kubectl describe routetables

$ kubectl describe natgateways

$ kubectl describe elasticipaddresses

$ kubectl describe securitygroups

# 배포 도중 2개의 서브넷 상태 정보 비교 해보자

$ kubectl describe subnets

...

Status:

Conditions:

Last Transition Time: 2023-06-06T15:17:24Z

Message: Reference resolution failed

Reason: the referenced resource is not synced yet. resource:RouteTable, namespace:default, name:tutorial-private-route-table-az1

Status: Unknown

Type: ACK.ReferencesResolved

Events: <none>

...

Status:

Ack Resource Metadata:

Arn: arn:aws:ec2:ap-northeast-2:90XXXXXXX:subnet/subnet-0a674cfe893286278

Owner Account ID: 90XXXXXXX

Region: ap-northeast-2

Available IP Address Count: 4091

Conditions:

Last Transition Time: 2023-06-06T15:17:04Z

Status: True

Type: ACK.ReferencesResolved

Last Transition Time: 2023-06-06T15:17:05Z

Message: Resource synced successfully

Reason:

Status: True

Type: ACK.ResourceSynced

...퍼블릭 서브넷에 인스턴스 생성

# public 서브넷 ID 확인

$ PUBSUB1=$(kubectl get subnets tutorial-public-subnet1 -o jsonpath={.status.subnetID})

$ echo $PUBSUB1

# 보안그룹 ID 확인

$ TSG=$(kubectl get securitygroups tutorial-security-group -o jsonpath={.status.id})

$ echo $TSG

# Amazon Linux 2 최신 AMI ID 확인

$ AL2AMI=$(aws ec2 describe-images --owners amazon --filters "Name=name,Values=amzn2-ami-hvm-2.0.*-x86_64-gp2" --query 'Images[0].ImageId' --output text)

$ echo $AL2AMI

# 각자 자신의 SSH 키페어 이름 변수 지정

# MYKEYPAIR=<각자 자신의 SSH 키페어 이름>

$ MYKEYPAIR=kp-hayley3

# 변수 확인 > 특히 서브넷 ID가 확인되었는지 꼭 확인하자!

$ echo $PUBSUB1 , $TSG , $AL2AMI , $MYKEYPAIR

subnet-0a674cfe893286278 , sg-00549ae56f5c6ffd3 , ami-09fee16f759d3c3a2 , kp-hayley3

# [터미널1] 모니터링

$ while true; do aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table; date ; sleep 1 ; done

-----------------------------------------------------------------------

| DescribeInstances |

+----------------------+----------------+------------------+----------+

| InstanceName | PrivateIPAdd | PublicIPAdd | Status |

+----------------------+----------------+------------------+----------+

| eks-hayley-ng1-Node | 192.168.3.122 | 3.37.129.232 | running |

| eks-hayley-ng1-Node | 192.168.2.73 | 15.165.228.253 | running |

| eks-hayley-bastion | 192.168.1.100 | 54.180.9.88 | running |

| eks-hayley-ng1-Node | 192.168.1.96 | 13.124.82.255 | running |

+----------------------+----------------+------------------+----------+

Wed Jun 7 00:21:22 KST 2023

# public 서브넷에 인스턴스 생성

$ cat <<EOF > tutorial-bastion-host.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: Instance

metadata:

name: tutorial-bastion-host

spec:

imageID: $AL2AMI # AL2 AMI ID - ap-northeast-2

instanceType: t3.medium

subnetID: $PUBSUB1

securityGroupIDs:

- $TSG

keyName: $MYKEYPAIR

tags:

- key: producer

value: ack

EOF

$ kubectl apply -f tutorial-bastion-host.yaml

instance.ec2.services.k8s.aws/tutorial-bastion-host created

# 인스턴스 생성 확인

$ kubectl get instance

NAME ID

tutorial-bastion-host i-069f63bcfde11935e

$ kubectl describe instance

$ aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output tablepublic 서브넷에 인스턴스 접속

$ ssh -i <자신의 키페어파일> ec2-user@<public 서브넷에 인스턴스 퍼블릭IP>

------

# public 서브넷에 인스턴스 접속 후 외부 인터넷 통신 여부 확인

ping -c 2 8.8.8.8

exit

------보안 그룹 정책 수정 : egress 규칙 추가

$ cat <<EOF > modify-sg.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: SecurityGroup

metadata:

name: tutorial-security-group

spec:

description: "ack security group"

name: tutorial-sg

vpcRef:

from:

name: tutorial-vpc

ingressRules:

- ipProtocol: tcp

fromPort: 22

toPort: 22

ipRanges:

- cidrIP: "0.0.0.0/0"

description: "ingress"

egressRules:

- ipProtocol: '-1'

ipRanges:

- cidrIP: "0.0.0.0/0"

description: "egress"

EOF

$ kubectl apply -f modify-sg.yaml

securitygroup.ec2.services.k8s.aws/tutorial-security-group configured

# 변경 확인 >> 보안그룹에 아웃바운드 규칙 확인

$ kubectl logs -n $ACK_SYSTEM_NAMESPACE -l k8s-app=ec2-chart -fpublic 서브넷에 인스턴스 접속 후 외부 인터넷 통신 확인

$ ssh -i <자신의 키페어파일> ec2-user@<public 서브넷에 인스턴스 퍼블릭IP>

------

# public 서브넷에 인스턴스 접속 후 외부 인터넷 통신 여부 확인

ping -c 10 8.8.8.8

curl ipinfo.io/ip ; echo # 출력되는 공인IP는 무엇인가?

exit

------프라이빗 서브넷에 인스턴스 생성

# private 서브넷 ID 확인 >> NATGW 생성 완료 후 RT/SubnetID가 확인되어 다소 시간 필요함

$ PRISUB1=$(kubectl get subnets tutorial-private-subnet1 -o jsonpath={.status.subnetID})

$ echo $PRISUB1

# 변수 확인 > 특히 private 서브넷 ID가 확인되었는지 꼭 확인하자!

$ echo $PRISUB1 , $TSG , $AL2AMI , $MYKEYPAIR

subnet-0a7ab8b02c500aed1 , sg-00549ae56f5c6ffd3 , ami-09fee16f759d3c3a2 , kp-hayley3

# private 서브넷에 인스턴스 생성

$ cat <<EOF > tutorial-instance-private.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: Instance

metadata:

name: tutorial-instance-private

spec:

imageID: $AL2AMI # AL2 AMI ID - ap-northeast-2

instanceType: t3.medium

subnetID: $PRISUB1

securityGroupIDs:

- $TSG

keyName: $MYKEYPAIR

tags:

- key: producer

value: ack

EOF

$ kubectl apply -f tutorial-instance-private.yaml

instance.ec2.services.k8s.aws/tutorial-instance-private created

# 인스턴스 생성 확인

$ kubectl get instance

NAME ID

tutorial-bastion-host i-069f63bcfde11935e

tutorial-instance-private i-017d6292417fa213f

$ kubectl describe instance

$ aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

-----------------------------------------------------------------------

| DescribeInstances |

+----------------------+----------------+------------------+----------+

| InstanceName | PrivateIPAdd | PublicIPAdd | Status |

+----------------------+----------------+------------------+----------+

| eks-hayley-ng1-Node | 192.168.3.122 | 3.37.129.232 | running |

| eks-hayley-ng1-Node | 192.168.2.73 | 15.165.228.253 | running |

| eks-hayley-bastion | 192.168.1.100 | 54.180.9.88 | running |

| None | 10.0.130.184 | None | running |

| eks-hayley-ng1-Node | 192.168.1.96 | 13.124.82.255 | running |

| None | 10.0.9.66 | 43.202.67.216 | running |

+----------------------+----------------+------------------+----------+public 서브넷에 인스턴스에 SSH 터널링 설정

$ ssh -i <자신의 키페어파일> -L <자신의 임의 로컬 포트>:<private 서브넷의 인스턴스의 private ip 주소>:22 ec2-user@<public 서브넷에 인스턴스 퍼블릭IP> -v

$ ssh -i ~/.ssh/kp-hayley3.pem -L 9999:10.0.129.196:22 ec2-user@3.34.96.12 -v

---

접속 후 그냥 두기

---자신의 임의 로컬 포트로 SSH 접속 시, private 서브넷에 인스턴스 접속됨

$ ssh -i <자신의 키페어파일> -p <자신의 임의 로컬 포트> ec2-user@localhost

$ ssh -i ~/.ssh/kp-hayley3.pem -p 9999 ec2-user@localhost

---

# IP 및 네트워크 정보 확인

ip -c addr

sudo ss -tnp

ping -c 2 8.8.8.8

curl ipinfo.io/ip ; echo # 출력되는 공인IP는 무엇인가?

exit

---실습 후 리소스 삭제

$ kubectl delete -f tutorial-bastion-host.yaml && kubectl delete -f tutorial-instance-private.yaml

$ kubectl delete -f vpc-workflow.yaml # vpc 관련 모든 리소스들 삭제에는 다소 시간이 소요됨RDS

ACK RDS Controller 설치 with Helm — 링크 Public-Repo Github

# 서비스명 변수 지정 및 helm 차트 다운로드

$ export SERVICE=rds

$ export RELEASE_VERSION=$(curl -sL https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-)

$ helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION

tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz

# helm chart 확인

$ tree ~/$SERVICE-chart

/root/rds-chart

├── Chart.yaml

├── crds

│ ├── rds.services.k8s.aws_dbclusterparametergroups.yaml

│ ├── rds.services.k8s.aws_dbclusters.yaml

│ ├── rds.services.k8s.aws_dbinstances.yaml

│ ├── rds.services.k8s.aws_dbparametergroups.yaml

│ ├── rds.services.k8s.aws_dbproxies.yaml

│ ├── rds.services.k8s.aws_dbsubnetgroups.yaml

│ ├── rds.services.k8s.aws_globalclusters.yaml

│ ├── services.k8s.aws_adoptedresources.yaml

│ └── services.k8s.aws_fieldexports.yaml

├── templates

│ ├── cluster-role-binding.yaml

│ ├── cluster-role-controller.yaml

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── metrics-service.yaml

│ ├── NOTES.txt

│ ├── role-reader.yaml

│ ├── role-writer.yaml

│ └── service-account.yaml

├── values.schema.json

└── values.yaml

2 directories, 21 files

# ACK EC2-Controller 설치

$ export ACK_SYSTEM_NAMESPACE=ack-system

$ export AWS_REGION=ap-northeast-2

$ helm install -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart

# 설치 확인

$ helm list --namespace $ACK_SYSTEM_NAMESPACE

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

ack-ec2-controller ack-system 1 2023-06-06 23:43:07.430794345 +0900 KST deployed ec2-chart-1.0.3 1.0.3

ack-rds-controller ack-system 1 2023-06-07 00:33:25.1594095 +0900 KST deployed rds-chart-1.1.4 1.1.4

$ kubectl -n $ACK_SYSTEM_NAMESPACE get pods -l "app.kubernetes.io/instance=ack-$SERVICE-controller"

NAME READY STATUS RESTARTS AGE

ack-rds-controller-rds-chart-6d59dfdfd7-wwfwk 1/1 Running 0 9s

$ kubectl get crd | grep $SERVICE

dbclusterparametergroups.rds.services.k8s.aws 2023-06-06T15:33:24Z

dbclusters.rds.services.k8s.aws 2023-06-06T15:33:24Z

dbinstances.rds.services.k8s.aws 2023-06-06T15:33:24Z

dbparametergroups.rds.services.k8s.aws 2023-06-06T15:33:24Z

dbproxies.rds.services.k8s.aws 2023-06-06T15:33:24Z

dbsubnetgroups.rds.services.k8s.aws 2023-06-06T15:33:24Z

globalclusters.rds.services.k8s.aws 2023-06-06T15:33:25ZIRSA 설정 : 권장 정책 AmazonRDSFullAccess — ARN

# Create an iamserviceaccount - AWS IAM role bound to a Kubernetes service account

$ eksctl create iamserviceaccount \

--name ack-$SERVICE-controller \

--namespace $ACK_SYSTEM_NAMESPACE \

--cluster $CLUSTER_NAME \

--attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`AmazonRDSFullAccess`].Arn' --output text) \

--override-existing-serviceaccounts --approve

# 확인 >> 웹 관리 콘솔에서 CloudFormation Stack >> IAM Role 확인

$ eksctl get iamserviceaccount --cluster $CLUSTER_NAME

NAMESPACE NAME ROLE ARN

ack-system ack-ec2-controller arn:aws:iam::90XXXXXXXX:role/eksctl-eks-hayley-addon-iamserviceaccount-ac-Role1-M7S7J3TY8GJ6

ack-system ack-rds-controller arn:aws:iam::90XXXXXXXX:role/eksctl-eks-hayley-addon-iamserviceaccount-ac-Role1-B9NAGDYAOV00

ack-system ack-s3-controller arn:aws:iam::90XXXXXXXX:role/eksctl-eks-hayley-addon-iamserviceaccount-ac-Role1-YGYJ3A40ECQ2

kube-system aws-load-balancer-controller arn:aws:iam::90XXXXXXXX:role/eksctl-eks-hayley-addon-iamserviceaccount-ku-Role1-1ILL3U143PX5R

# Inspecting the newly created Kubernetes Service Account, we can see the role we want it to assume in our pod.

$ kubectl get sa -n $ACK_SYSTEM_NAMESPACE

NAME SECRETS AGE

ack-ec2-controller 0 63m

ack-rds-controller 0 13m

default 0 119m

$ kubectl describe sa ack-$SERVICE-controller -n $ACK_SYSTEM_NAMESPACE

# Restart ACK service controller deployment using the following commands.

$ kubectl -n $ACK_SYSTEM_NAMESPACE rollout restart deploy ack-$SERVICE-controller-$SERVICE-chart

deployment.apps/ack-rds-controller-rds-chart restarted

# IRSA 적용으로 Env, Volume 추가 확인

$ kubectl describe pod -n $ACK_SYSTEM_NAMESPACE -l k8s-app=$SERVICE-chart

...AWS RDS for MariaDB 생성 및 삭제 — 링크

# DB 암호를 위한 secret 생성

$ RDS_INSTANCE_NAME="<your instance name>"

$ RDS_INSTANCE_PASSWORD="<your instance password>"

$ RDS_INSTANCE_NAME=myrds

$ RDS_INSTANCE_PASSWORD=qwe12345

$ kubectl create secret generic "${RDS_INSTANCE_NAME}-password" --from-literal=password="${RDS_INSTANCE_PASSWORD}"

# 확인

$ kubectl get secret $RDS_INSTANCE_NAME-password

NAME TYPE DATA AGE

myrds-password Opaque 1 10s

# [터미널1] 모니터링

$ RDS_INSTANCE_NAME=myrds

$ watch -d "kubectl describe dbinstance "${RDS_INSTANCE_NAME}" | grep 'Db Instance Status'"

# RDS 배포 생성 : 15분 이내 시간 소요 >> 보안그룹, 서브넷 등 필요한 옵션들은 추가해서 설정해보자!

$ cat <<EOF > rds-mariadb.yaml

apiVersion: rds.services.k8s.aws/v1alpha1

kind: DBInstance

metadata:

name: "${RDS_INSTANCE_NAME}"

spec:

allocatedStorage: 20

dbInstanceClass: db.t4g.micro

dbInstanceIdentifier: "${RDS_INSTANCE_NAME}"

engine: mariadb

engineVersion: "10.6"

masterUsername: "admin"

masterUserPassword:

namespace: default

name: "${RDS_INSTANCE_NAME}-password"

key: password

EOF

$ kubectl apply -f rds-mariadb.yaml

# 생성 확인

$ kubectl get dbinstances ${RDS_INSTANCE_NAME}

NAME STATUS

myrds creating

$ kubectl describe dbinstance "${RDS_INSTANCE_NAME}"

$ aws rds describe-db-instances --db-instance-identifier $RDS_INSTANCE_NAME | jq

$ kubectl describe dbinstance "${RDS_INSTANCE_NAME}" | grep 'Db Instance Status'

Db Instance Status: creating

$ kubectl describe dbinstance "${RDS_INSTANCE_NAME}" | grep 'Db Instance Status'

Db Instance Status: backing-up

$ kubectl describe dbinstance "${RDS_INSTANCE_NAME}" | grep 'Db Instance Status'

Db Instance Status: available

# 생성 완료 대기 : for 지정 상태가 완료되면 정상 종료됨

$ kubectl wait dbinstances ${RDS_INSTANCE_NAME} --for=condition=ACK.ResourceSynced --timeout=15m

dbinstance.rds.services.k8s.aws/myrds condition metMariaDB 접속 — 링크 FieldExport

fieldexport 생성

$ RDS_INSTANCE_CONN_CM="${RDS_INSTANCE_NAME}-conn-cm"

$ cat <<EOF > rds-field-exports.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: ${RDS_INSTANCE_CONN_CM}

data: {}

---

apiVersion: services.k8s.aws/v1alpha1

kind: FieldExport

metadata:

name: ${RDS_INSTANCE_NAME}-host

spec:

to:

name: ${RDS_INSTANCE_CONN_CM}

kind: configmap

from:

path: ".status.endpoint.address"

resource:

group: rds.services.k8s.aws

kind: DBInstance

name: ${RDS_INSTANCE_NAME}

---

apiVersion: services.k8s.aws/v1alpha1

kind: FieldExport

metadata:

name: ${RDS_INSTANCE_NAME}-port

spec:

to:

name: ${RDS_INSTANCE_CONN_CM}

kind: configmap

from:

path: ".status.endpoint.port"

resource:

group: rds.services.k8s.aws

kind: DBInstance

name: ${RDS_INSTANCE_NAME}

---

apiVersion: services.k8s.aws/v1alpha1

kind: FieldExport

metadata:

name: ${RDS_INSTANCE_NAME}-user

spec:

to:

name: ${RDS_INSTANCE_CONN_CM}

kind: configmap

from:

path: ".spec.masterUsername"

resource:

group: rds.services.k8s.aws

kind: DBInstance

name: ${RDS_INSTANCE_NAME}

EOF

$ kubectl apply -f rds-field-exports.yaml

fieldexport.services.k8s.aws/myrds-host created

fieldexport.services.k8s.aws/myrds-port created

fieldexport.services.k8s.aws/myrds-user created# 상태 정보 확인 : address 와 port 정보

$ kubectl get dbinstances myrds -o jsonpath={.status.endpoint} | jq

{

"address": "myrds.cjzeahiftlk0.ap-northeast-2.rds.amazonaws.com",

"hostedZoneID": "ZLA2NUCOLGUUR",

"port": 3306

}

# 상태 정보 확인 : masterUsername 확인

$ kubectl get dbinstances myrds -o jsonpath={.spec.masterUsername} ; echo

admin

# 컨피그맵 확인

$ kubectl get cm myrds-conn-cm -o yaml | kubectl neat | yh

apiVersion: v1

kind: ConfigMap

metadata:

name: myrds-conn-cm

namespace: default

# fieldexport 정보 확인

$ kubectl get crd | grep fieldexport

fieldexports.services.k8s.aws 2023-06-06T14:43:07Z

$ kubectl get fieldexport

NAME AGE

myrds-host 93s

myrds-port 93s

myrds-user 93s

$ kubectl get fieldexport myrds-host -o yaml | k neat | yh

apiVersion: services.k8s.aws/v1alpha1

kind: FieldExport

metadata:

name: myrds-host

namespace: default

spec:

from:

path: .status.endpoint.address

resource:

group: rds.services.k8s.aws

kind: DBInstance

name: myrds

to:

kind: configmap

name: myrds-conn-cmRDS 사용하는 파드를 생성

$ APP_NAMESPACE=default

$ cat <<EOF > rds-pods.yaml

apiVersion: v1

kind: Pod

metadata:

name: app

namespace: ${APP_NAMESPACE}

spec:

containers:

- image: busybox

name: myapp

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

env:

- name: DBHOST

valueFrom:

configMapKeyRef:

name: ${RDS_INSTANCE_CONN_CM}

key: "${APP_NAMESPACE}.${RDS_INSTANCE_NAME}-host"

- name: DBPORT

valueFrom:

configMapKeyRef:

name: ${RDS_INSTANCE_CONN_CM}

key: "${APP_NAMESPACE}.${RDS_INSTANCE_NAME}-port"

- name: DBUSER

valueFrom:

configMapKeyRef:

name: ${RDS_INSTANCE_CONN_CM}

key: "${APP_NAMESPACE}.${RDS_INSTANCE_NAME}-user"

- name: DBPASSWORD

valueFrom:

secretKeyRef:

name: "${RDS_INSTANCE_NAME}-password"

key: password

EOF

$ kubectl apply -f rds-pods.yaml

pod/app created

# 생성 확인

$ kubectl get pod app

NAME READY STATUS RESTARTS AGE

app 1/1 Running 0 10s

# 파드의 환경 변수 확인

$ kubectl exec -it app -- env | grep DB

DBHOST=myrds.cb79jlim4dyq.ap-northeast-2.rds.amazonaws.com

DBPORT=3306

DBUSER=admin

DBPASSWORD=qwe12345RDS 의 master 암호를 변경해보고 확인!

# [터미널]

$ watch -d "kubectl get dbinstance; echo; kubectl get cm myrds-conn-cm -o yaml | kubectl neat"

# 아래 처럼 RDS 에서 직접 변경 할 경우 rds controller 를 별도 추적을 하지 않아서, k8s 상태와 aws 상태 정보가 깨져버럼

# DB 식별자 변경 : studyend

$ aws rds modify-db-instance --db-instance-identifier $RDS_INSTANCE_NAME --new-db-instance-identifier studyend --apply-immediately

# DB 식별자를 업데이트 >> 어떤 현상이 발생하는가?

$ kubectl patch dbinstance myrds --type=merge -p '{"spec":{"dbInstanceIdentifier":"studyend"}}'

dbinstance.rds.services.k8s.aws/myrds patched

# 확인

$ kubectl get dbinstance myrds

NAME STATUS

myrds available

$ kubectl describe dbinstance myrds최종적으로 변경된 status 정보가 반영되었는지 확인해보자

# 상태 정보 확인 : address 변경 확인!

$ kubectl get dbinstances myrds -o jsonpath={.status.endpoint} | jq

{

"address": "myrds.cjzeahiftlk0.ap-northeast-2.rds.amazonaws.com",

"hostedZoneID": "ZLA2NUCOLGUUR",

"port": 3306

}

# 파드의 환경 변수 확인 >> 파드의 경우 환경 변수 env로 정보를 주입했기 때문에 변경된 정보를 확인 할 수 없다

$ kubectl exec -it app -- env | grep DB

DBHOST=myrds.cjzeahiftlk0.ap-northeast-2.rds.amazonaws.com

DBPORT=3306

DBUSER=admin

DBPASSWORD=qwe12345

# 파드 삭제 후 재생성 후 확인

$ kubectl delete pod app && kubectl apply -f rds-pods.yaml

# 파드의 환경 변수 확인 >> 변경 정보 확인!

# 즉 deployments, daemonsets, statefulsets 의 경우 rollout 으로 env 변경 적용을 할 수 는 있겠다!

$ kubectl exec -it app -- env | grep DB

DBHOST=myrds.cjzeahiftlk0.ap-northeast-2.rds.amazonaws.com

DBPORT=3306

DBUSER=admin

DBPASSWORD=qwe12345RDS 삭제 > AWS 웹 관리 콘솔에서 myrds 는 직접 삭제 할 것

# 파드 삭제

$ kubectl delete pod app

# RDS 삭제

$ kubectl delete -f rds-mariadb.yaml[도전과제1] AWS ACK Tutorials 의 다른 서비스들을 직접 실습해보세요 — 링크

Flux

- flux는 쿠버네티스를 위한 gitops 도구입니다.[참고]

Flux CLI 설치 및 Bootstrap

# Flux CLI 설치

$ curl -s https://fluxcd.io/install.sh | sudo bash

$ . <(flux completion bash)

# 버전 확인

$ flux --version

flux version 2.0.0-rc.5

# 자신의 Github 토큰과 유저이름 변수 지정

$ export GITHUB_TOKEN=<your-token>

$ export GITHUB_USER=<your-username>

$ export GITHUB_TOKEN=ghp_###

$ export GITHUB_USER=hayley

# Bootstrap

## Creates a git repository fleet-infra on your GitHub account.

## Adds Flux component manifests to the repository.

## Deploys Flux Components to your Kubernetes Cluster.

## Configures Flux components to track the path /clusters/my-cluster/ in the repository.

$ flux bootstrap github \

--owner=$GITHUB_USER \

--repository=fleet-infra \

--branch=main \

--path=./clusters/my-cluster \

--personal

# 설치 확인

$ kubectl get pods -n flux-system

$ kubectl get-all -n flux-system

$ kubectl get crd | grep fluxc

$ kubectl get gitrepository -n flux-system

NAME URL AGE READY STATUS

flux-system ssh://git@github.com/gasida/fleet-infra 4m6s True stored artifact for revision 'main@sha1:4172548433a9f4e089758c3512b0b24d289e9702'gitops 도구 설치 — 링크 → flux 대시보드 설치 : admin / password

# gitops 도구 설치

$ curl --silent --location "https://github.com/weaveworks/weave-gitops/releases/download/v0.24.0/gitops-$(uname)-$(uname -m).tar.gz" | tar xz -C /tmp

$ sudo mv /tmp/gitops /usr/local/bin

$ gitops version

# flux 대시보드 설치

$ PASSWORD="password"

$ gitops create dashboard ww-gitops --password=$PASSWORD

# 확인

$ flux -n flux-system get helmrelease

$ kubectl -n flux-system get pod,svcIngress 설정

$ CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

$ echo $CERT_ARN

# Ingress 설정

$ cat <<EOT > gitops-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: gitops-ingress

annotations:

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/ssl-redirect: "443"

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/target-type: ip

spec:

ingressClassName: alb

rules:

- host: gitops.$MyDomain

http:

paths:

- backend:

service:

name: ww-gitops-weave-gitops

port:

number: 9001

path: /

pathType: Prefix

EOT

$ kubectl apply -f gitops-ingress.yaml -n flux-system

# 배포 확인

$ kubectl get ingress -n flux-system

# GitOps 접속 정보 확인 >> 웹 접속 후 정보 확인

$ echo -e "GitOps Web https://gitops.$MyDomain"hello world (kubstomize) — Github(악분님)

github에 있는 nginx manifest를 쿠버네티스에 배포합니다. 배포할 때 kusotmize를 사용

# 소스 생성 : 유형 - git, helm, oci, bucket

# flux create source {소스 유형}

# 악분(최성욱)님이 준비한 repo로 git 소스 생성

$ GITURL="https://github.com/sungwook-practice/fluxcd-test.git"

$ flux create source git nginx-example1 --url=$GITURL --branch=main --interval=30s

# 소스 확인

$ flux get sources git

$ kubectl -n flux-system get gitrepositoriesflux 애플리케이션 생성 : 유형(kustomization) , 깃 소스 경로( — path ./nginx) → gitops 웹 대시보드에서 확인

# [터미널] 모니터링

$ watch -d kubectl get pod,svc nginx-example1

# flux 애플리케이션 생성 : nginx-example1

$ flux create kustomization nginx-example1 --target-namespace=default --interval=1m --source=nginx-example1 --path="./nginx" --health-check-timeout=2m

# 확인

$ kubectl get pod,svc nginx-example1

$ kubectl get kustomizations -n flux-system

$ flux get kustomizations애플리케이션 삭제

# [터미널] 모니터링

$ watch -d kubectl get pod,svc nginx-example1

# flux 애플리케이션 삭제 >> 파드와 서비스는? flux 애플리케이션 생성 시 --prune 옵션 false(default 값)

$ flux delete kustomization nginx-example1

$ flux get kustomizations

$ kubectl get pod,svc nginx-example1

# flux 애플리케이션 다시 생성 : --prune 옵션 true

$ flux create kustomization nginx-example1 \

--target-namespace=default \

--prune=true \

--interval=1m \

--source=nginx-example1 \

--path="./nginx" \

--health-check-timeout=2m

# 확인

$ flux get kustomizations

$ kubectl get pod,svc nginx-example1

# flux 애플리케이션 삭제 >> 파드와 서비스는?

$ flux delete kustomization nginx-example1

$ flux get kustomizations

$ kubectl get pod,svc nginx-example1

# flux 소스 삭제

$ flux delete source git nginx-example1

# 소스 확인

$ flux get sources git

$ kubectl -n flux-system get gitrepositories공식 Docs 샘플 실습 — 링크

Clone the git repository

# Clone the git repository : 자신의 Github 의 Username, Token 입력

$ git clone https://github.com/$GITHUB_USER/fleet-infra

Username for 'https://github.com': <자신의 Github 의 Username>

Password for 'https://gasida@github.com': <자신의 Github의 Token>

# 폴더 이동

$ cd fleet-infra

$ treeAdd podinfo repository to Flux — 샘플

# GitRepository yaml 파일 생성

$ flux create source git podinfo \

--url=https://github.com/stefanprodan/podinfo \

--branch=master \

--interval=30s \

--export > ./clusters/my-cluster/podinfo-source.yaml

# GitRepository yaml 파일 확인

$ cat ./clusters/my-cluster/podinfo-source.yaml | yh

# Commit and push the podinfo-source.yaml file to the fleet-infra repository >> Github 확인

$ git config --global user.name "Your Name"

$ git config --global user.email "you@example.com"

$ git add -A && git commit -m "Add podinfo GitRepository"

$ git push

Username for 'https://github.com': <자신의 Github 의 Username>

Password for 'https://gasida@github.com': <자신의 Github의 Token>

# 소스 확인

$ flux get sources git

$ kubectl -n flux-system get gitrepositoriesDeploy podinfo application : Configure Flux to build and apply the kustomize directory located in the podinfo repository — 링크

# [터미널]

$ watch -d kubectl get pod,svc

# Use the flux create command to create a Kustomization that applies the podinfo deployment.

$ flux create kustomization podinfo \

--target-namespace=default \

--source=podinfo \

--path="./kustomize" \

--prune=true \

--interval=5m \

--export > ./clusters/my-cluster/podinfo-kustomization.yaml

# 파일 확인

$ cat ./clusters/my-cluster/podinfo-kustomization.yaml | yh

# Commit and push the Kustomization manifest to the repository:

$ git add -A && git commit -m "Add podinfo Kustomization"

$ git push

# 확인

$ kubectl get pod,svc

$ kubectl get kustomizations -n flux-system

$ flux get kustomizations

$ treeWatch Flux sync the application

# [터미널]

$ watch -d kubectl get pod,svc

# 파드 갯수 변경 시도 >> 어떻게 되는가?

$ kubectl scale deployment podinfo --replicas 1

...

$ kubectl scale deployment podinfo --replicas 3삭제

#

$ flux delete kustomization podinfo

$ flux delete source git podinfo

#

$ flux uninstall --namespace=flux-systemGithub에 fleet-infra Repo 제거하기

참고 : GitOps with Amazon EKS Workshop | Flux and ArgoCD — Youtube

자원 삭제

# Flux 실습 리소스 삭제

$ flux uninstall --namespace=flux-system

# Helm Chart 삭제

$ helm uninstall -n monitoring kube-prometheus-stack

# EKS 클러스터 삭제

$ eksctl delete cluster --name $CLUSTER_NAME && aws cloudformation delete-stack --sta

blog migration project

written in 2023.6.11

https://medium.com/techblog-hayleyshim/aws-eks-automation-b8f14d9b1bb5

'IT > Infra&Cloud' 카테고리의 다른 글

| [gcp] Cloud ID로 조직 생성&테라폼 배포 (0) | 2023.10.30 |

|---|---|

| [aws] Control Tower (0) | 2023.10.30 |

| [aws] EKS Security (0) | 2023.10.30 |

| [aws] EKS Autoscaling (0) | 2023.10.30 |

| [aws] EKS Observability (0) | 2023.10.29 |

- Total

- Today

- Yesterday

- 혼공단

- autoscaling

- AWS

- AI

- NFT

- AI Engineering

- k8s calico

- GKE

- operator

- 혼공챌린지

- cni

- 파이썬

- NW

- security

- PYTHON

- ai 엔지니어링

- 도서

- k8s

- S3

- VPN

- EKS

- SDWAN

- handson

- terraform

- CICD

- cloud

- GCP

- 혼공파

- IaC

- k8s cni

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |