티스토리 뷰

안녕하세요. Kubernetes Advanced Networking Study(=KANS) 3기 모임에서 스터디한 내용을 정리했습니다. 해당 글에서는 Calico CNI 구성요소에 대해 자세히 알아보겠습니다.

[사전작업]

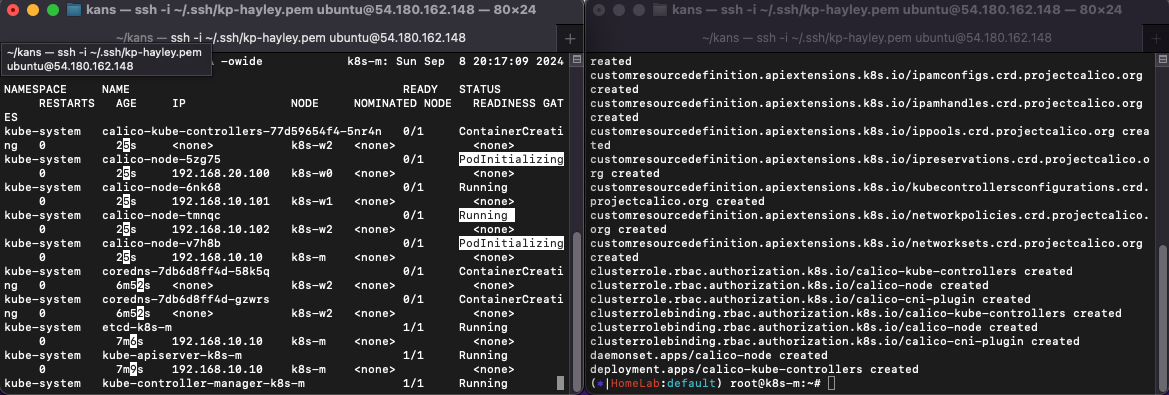

Calico 실습을 위한 K8S 배포

- 실습 환경 : K8S v1.30.X, 노드 OS(Ubuntu 22.04 LTS) , CNI(Calico v3.28.1, IPIP, NAT enable) , IPTABLES proxy mode

# YAML 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/kans/kans-3w.yaml

# CloudFormation 스택 배포

# aws cloudformation deploy --template-file kans-3w.yaml --stack-name mylab --parameter-overrides KeyName=<My SSH Keyname> SgIngressSshCidr=<My Home Public IP Address>/32 --region ap-northeast-2

예시) aws cloudformation deploy --template-file kans-3w.yaml --stack-name mylab --parameter-overrides KeyName=kp-hayley SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 --region ap-northeast-2

## Tip. 인스턴스 타입 변경 : MyInstanceType=t2.micro

예시) aws cloudformation deploy --template-file kans-3w.yaml --stack-name mylab --parameter-overrides MyInstanceType=t2.micro KeyName=kp-hayley SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 k8s-m EC2 IP 출력

aws cloudformation describe-stacks --stack-name mylab --query 'Stacks[*].Outputs[0].OutputValue' --output text --region ap-northeast-2

# [모니터링] CloudFormation 스택 상태 : 생성 완료 확인

while true; do

date

AWS_PAGER="" aws cloudformation list-stacks \

--stack-status-filter CREATE_IN_PROGRESS CREATE_COMPLETE CREATE_FAILED DELETE_IN_PROGRESS DELETE_FAILED \

--query "StackSummaries[*].{StackName:StackName, StackStatus:StackStatus}" \

--output table

sleep 1

done

# k8s-m EC2 SSH 접속

ssh -i ~/.ssh/kp-hayley.pem ubuntu@$(aws cloudformation describe-stacks --stack-name mylab --query 'Stacks[*].Outputs[0].OutputValue' --output text --region ap-northeast-2)

AWSTemplateFormatVersion: '2010-09-09'

Metadata:

AWS::CloudFormation::Interface:

ParameterGroups:

- Label:

default: "<<<<< Deploy EC2 >>>>>"

Parameters:

- KeyName

- SgIngressSshCidr

- MyInstanceType

- LatestAmiId

- Label:

default: "<<<<< Region AZ >>>>>"

Parameters:

- TargetRegion

- AvailabilityZone1

- AvailabilityZone2

- Label:

default: "<<<<< VPC Subnet >>>>>"

Parameters:

- VpcBlock

- PublicSubnet1Block

- PublicSubnet2Block

Parameters:

KeyName:

Description: Name of an existing EC2 KeyPair to enable SSH access to the instances.

Type: AWS::EC2::KeyPair::KeyName

ConstraintDescription: must be the name of an existing EC2 KeyPair.

SgIngressSshCidr:

Description: The IP address range that can be used to communicate to the EC2 instances.

Type: String

MinLength: '9'

MaxLength: '18'

Default: 0.0.0.0/0

AllowedPattern: (\d{1,3})\.(\d{1,3})\.(\d{1,3})\.(\d{1,3})/(\d{1,2})

ConstraintDescription: must be a valid IP CIDR range of the form x.x.x.x/x.

MyInstanceType:

Description: Enter EC2 Type(Spec) Ex) t2.micro.

Type: String

Default: t3.medium

LatestAmiId:

Description: (DO NOT CHANGE)

Type: 'AWS::SSM::Parameter::Value<AWS::EC2::Image::Id>'

Default: '/aws/service/canonical/ubuntu/server/22.04/stable/current/amd64/hvm/ebs-gp2/ami-id'

AllowedValues:

- /aws/service/canonical/ubuntu/server/22.04/stable/current/amd64/hvm/ebs-gp2/ami-id

TargetRegion:

Type: String

Default: ap-northeast-2

AvailabilityZone1:

Type: String

Default: ap-northeast-2a

AvailabilityZone2:

Type: String

Default: ap-northeast-2c

VpcBlock:

Type: String

Default: 192.168.0.0/16

PublicSubnet1Block:

Type: String

Default: 192.168.10.0/24

PublicSubnet2Block:

Type: String

Default: 192.168.20.0/24

Resources:

# VPC

KansVPC:

Type: AWS::EC2::VPC

Properties:

CidrBlock: !Ref VpcBlock

EnableDnsSupport: true

EnableDnsHostnames: true

Tags:

- Key: Name

Value: Kans-VPC

# PublicSubnets

PublicSubnet1:

Type: AWS::EC2::Subnet

Properties:

AvailabilityZone: !Ref AvailabilityZone1

CidrBlock: !Ref PublicSubnet1Block

VpcId: !Ref KansVPC

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: Kans-PublicSubnet1

PublicSubnet2:

Type: AWS::EC2::Subnet

Properties:

AvailabilityZone: !Ref AvailabilityZone2

CidrBlock: !Ref PublicSubnet2Block

VpcId: !Ref KansVPC

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: Kans-PublicSubnet2

InternetGateway:

Type: AWS::EC2::InternetGateway

VPCGatewayAttachment:

Type: AWS::EC2::VPCGatewayAttachment

Properties:

InternetGatewayId: !Ref InternetGateway

VpcId: !Ref KansVPC

PublicSubnetRouteTable:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref KansVPC

Tags:

- Key: Name

Value: Kans-PublicSubnetRouteTable

PublicSubnetRoute:

Type: AWS::EC2::Route

Properties:

RouteTableId: !Ref PublicSubnetRouteTable

DestinationCidrBlock: 0.0.0.0/0

GatewayId: !Ref InternetGateway

PublicSubnet1RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref PublicSubnet1

RouteTableId: !Ref PublicSubnetRouteTable

PublicSubnet2RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref PublicSubnet2

RouteTableId: !Ref PublicSubnetRouteTable

# EC2 Hosts

EC2SG:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Kans EC2 Security Group

VpcId: !Ref KansVPC

Tags:

- Key: Name

Value: Kans-SG

SecurityGroupIngress:

- IpProtocol: '-1'

CidrIp: !Ref SgIngressSshCidr

- IpProtocol: '-1'

CidrIp: !Ref VpcBlock

- IpProtocol: '-1'

CidrIp: 172.16.0.0/16

- IpProtocol: '-1'

CidrIp: 10.200.1.0/24

EC21ENI1:

Type: AWS::EC2::NetworkInterface

Properties:

SubnetId: !Ref PublicSubnet1

Description: !Sub Kans-EC21-ENI1

GroupSet:

- !Ref EC2SG

PrivateIpAddress: 192.168.10.10

SourceDestCheck: false

Tags:

- Key: Name

Value: !Sub Kans-EC21-ENI1

EC22ENI1:

Type: AWS::EC2::NetworkInterface

Properties:

SubnetId: !Ref PublicSubnet1

Description: !Sub Kans-EC22-ENI1

GroupSet:

- !Ref EC2SG

PrivateIpAddress: 192.168.10.101

SourceDestCheck: false

Tags:

- Key: Name

Value: !Sub Kans-EC22-ENI1

EC23ENI1:

Type: AWS::EC2::NetworkInterface

Properties:

SubnetId: !Ref PublicSubnet1

Description: !Sub Kans-EC23-ENI1

GroupSet:

- !Ref EC2SG

PrivateIpAddress: 192.168.10.102

SourceDestCheck: false

Tags:

- Key: Name

Value: !Sub Kans-EC23-ENI1

EC24ENI1:

Type: AWS::EC2::NetworkInterface

Properties:

SubnetId: !Ref PublicSubnet2

Description: !Sub Kans-EC24-ENI1

GroupSet:

- !Ref EC2SG

PrivateIpAddress: 192.168.20.100

SourceDestCheck: false

Tags:

- Key: Name

Value: !Sub Kans-EC24-ENI1

EC21:

Type: AWS::EC2::Instance

Properties:

InstanceType: !Ref MyInstanceType

ImageId: !Ref LatestAmiId

KeyName: !Ref KeyName

Tags:

- Key: Name

Value: k8s-m

NetworkInterfaces:

- NetworkInterfaceId: !Ref EC21ENI1

DeviceIndex: '0'

BlockDeviceMappings:

- DeviceName: /dev/sda1

Ebs:

VolumeType: gp3

VolumeSize: 30

DeleteOnTermination: true

UserData:

Fn::Base64:

!Sub |

#!/bin/bash

hostnamectl --static set-hostname k8s-m

# Config convenience

echo 'alias vi=vim' >> /etc/profile

ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime

# Disable ufw & AppArmor

systemctl stop ufw && systemctl disable ufw

systemctl stop apparmor && systemctl disable apparmor

# ubuntu user -> root user

echo "sudo su -" >> /home/ubuntu/.bashrc

# Setting Local DNS Using Hosts file

echo "192.168.10.10 k8s-m" >> /etc/hosts

echo "192.168.10.101 k8s-w1" >> /etc/hosts

echo "192.168.10.102 k8s-w2" >> /etc/hosts

echo "192.168.20.100 k8s-w0" >> /etc/hosts

# add kubernetes repo

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

# add docker-ce repo with containerd

curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

# packets traversing the bridge are processed by iptables for filtering

echo 1 > /proc/sys/net/ipv4/ip_forward

# enable br_filter for iptables

modprobe br_netfilter

# Update the apt package index, install kubelet, kubeadm and kubectl, and pin their version

apt update && apt-get install -y kubelet kubectl kubeadm containerd.io && apt-mark hold kubelet kubeadm kubectl

# containerd configure to default and cgroup managed by systemd

containerd config default > /etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

# avoid WARN&ERRO(default endpoints) when crictl run

cat <<EOF > /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

EOF

# ready to install for k8s

systemctl restart containerd && systemctl enable containerd

systemctl enable --now kubelet

# init kubernetes (w/ containerd)

kubeadm init --token 123456.1234567890123456 --token-ttl 0 --pod-network-cidr=172.16.0.0/16 --apiserver-advertise-address=192.168.10.10 --service-cidr 10.200.1.0/24 --cri-socket=unix:///run/containerd/containerd.sock

# Install packages

apt install tree jq bridge-utils net-tools conntrack ipset wireguard -y

# Install Helm

curl -s https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash

# config for master node only

mkdir -p /root/.kube

cp -i /etc/kubernetes/admin.conf /root/.kube/config

#chown $(id -u):$(id -g) /root/.kube/config

# kubectl completion on bash-completion dir

kubectl completion bash > /etc/bash_completion.d/kubectl

# alias kubectl to k

echo 'alias k=kubectl' >> /etc/profile

echo 'complete -F __start_kubectl k' >> /etc/profile

# Install Kubectx & Kubens"

git clone https://github.com/ahmetb/kubectx /opt/kubectx

ln -s /opt/kubectx/kubens /usr/local/bin/kubens

ln -s /opt/kubectx/kubectx /usr/local/bin/kubectx

# Install Kubeps

git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1

cat <<"EOT" >> /root/.bash_profile

source /root/kube-ps1/kube-ps1.sh

function get_cluster_short() {

echo "$1" | cut -d . -f1

}

KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short

KUBE_PS1_SUFFIX=') '

PS1='$(kube_ps1)'$PS1

EOT

kubectl config rename-context "kubernetes-admin@kubernetes" "HomeLab"

EC22:

Type: AWS::EC2::Instance

DependsOn: EC21

Properties:

InstanceType: !Ref MyInstanceType

ImageId: !Ref LatestAmiId

KeyName: !Ref KeyName

Tags:

- Key: Name

Value: k8s-w1

NetworkInterfaces:

- NetworkInterfaceId: !Ref EC22ENI1

DeviceIndex: '0'

BlockDeviceMappings:

- DeviceName: /dev/sda1

Ebs:

VolumeType: gp3

VolumeSize: 30

DeleteOnTermination: true

UserData:

Fn::Base64:

!Sub |

#!/bin/bash

hostnamectl --static set-hostname k8s-w1

# Config convenience

echo 'alias vi=vim' >> /etc/profile

ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime

# Disable ufw & AppArmor

systemctl stop ufw && systemctl disable ufw

systemctl stop apparmor && systemctl disable apparmor

# ubuntu user -> root user

echo "sudo su -" >> /home/ubuntu/.bashrc

# Setting Local DNS Using Hosts file

echo "192.168.10.10 k8s-m" >> /etc/hosts

echo "192.168.10.101 k8s-w1" >> /etc/hosts

echo "192.168.10.102 k8s-w2" >> /etc/hosts

echo "192.168.20.100 k8s-w0" >> /etc/hosts

# add kubernetes repo

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

# add docker-ce repo with containerd

curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

# packets traversing the bridge are processed by iptables for filtering

echo 1 > /proc/sys/net/ipv4/ip_forward

# enable br_filter for iptables

modprobe br_netfilter

# Update the apt package index, install kubelet, kubeadm and kubectl, and pin their version

apt update && apt-get install -y kubelet kubectl kubeadm containerd.io && apt-mark hold kubelet kubeadm kubectl

# containerd configure to default and cgroup managed by systemd

containerd config default > /etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

# avoid WARN&ERRO(default endpoints) when crictl run

cat <<EOF > /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

EOF

# ready to install for k8s

systemctl restart containerd && systemctl enable containerd

systemctl enable --now kubelet

# Install packages

apt install tree jq bridge-utils net-tools conntrack ipset wireguard -y

# k8s Controlplane Join - API Server 192.168.10.10"

kubeadm join --token 123456.1234567890123456 --discovery-token-unsafe-skip-ca-verification 192.168.10.10:6443

EC23:

Type: AWS::EC2::Instance

DependsOn: EC21

Properties:

InstanceType: !Ref MyInstanceType

ImageId: !Ref LatestAmiId

KeyName: !Ref KeyName

Tags:

- Key: Name

Value: k8s-w2

NetworkInterfaces:

- NetworkInterfaceId: !Ref EC23ENI1

DeviceIndex: '0'

BlockDeviceMappings:

- DeviceName: /dev/sda1

Ebs:

VolumeType: gp3

VolumeSize: 30

DeleteOnTermination: true

UserData:

Fn::Base64:

!Sub |

#!/bin/bash

hostnamectl --static set-hostname k8s-w2

# Config convenience

echo 'alias vi=vim' >> /etc/profile

ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime

# Disable ufw & AppArmor

systemctl stop ufw && systemctl disable ufw

systemctl stop apparmor && systemctl disable apparmor

# ubuntu user -> root user

echo "sudo su -" >> /home/ubuntu/.bashrc

# Setting Local DNS Using Hosts file

echo "192.168.10.10 k8s-m" >> /etc/hosts

echo "192.168.10.101 k8s-w1" >> /etc/hosts

echo "192.168.10.102 k8s-w2" >> /etc/hosts

echo "192.168.20.100 k8s-w0" >> /etc/hosts

# add kubernetes repo

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

# add docker-ce repo with containerd

curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

# packets traversing the bridge are processed by iptables for filtering

echo 1 > /proc/sys/net/ipv4/ip_forward

# enable br_filter for iptables

modprobe br_netfilter

# Update the apt package index, install kubelet, kubeadm and kubectl, and pin their version

apt update && apt-get install -y kubelet kubectl kubeadm containerd.io && apt-mark hold kubelet kubeadm kubectl

# containerd configure to default and cgroup managed by systemd

containerd config default > /etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

# avoid WARN&ERRO(default endpoints) when crictl run

cat <<EOF > /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

EOF

# ready to install for k8s

systemctl restart containerd && systemctl enable containerd

systemctl enable --now kubelet

# Install packages

apt install tree jq bridge-utils net-tools conntrack ipset wireguard -y

# k8s Controlplane Join - API Server 192.168.10.10"

kubeadm join --token 123456.1234567890123456 --discovery-token-unsafe-skip-ca-verification 192.168.10.10:6443

EC24:

Type: AWS::EC2::Instance

DependsOn: EC21

Properties:

InstanceType: !Ref MyInstanceType

ImageId: !Ref LatestAmiId

KeyName: !Ref KeyName

Tags:

- Key: Name

Value: k8s-w0

NetworkInterfaces:

- NetworkInterfaceId: !Ref EC24ENI1

DeviceIndex: '0'

BlockDeviceMappings:

- DeviceName: /dev/sda1

Ebs:

VolumeType: gp3

VolumeSize: 30

DeleteOnTermination: true

UserData:

Fn::Base64:

!Sub |

#!/bin/bash

hostnamectl --static set-hostname k8s-w0

# Config convenience

echo 'alias vi=vim' >> /etc/profile

ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime

# Disable ufw & AppArmor

systemctl stop ufw && systemctl disable ufw

systemctl stop apparmor && systemctl disable apparmor

# ubuntu user -> root user

echo "sudo su -" >> /home/ubuntu/.bashrc

# Setting Local DNS Using Hosts file

echo "192.168.10.10 k8s-m" >> /etc/hosts

echo "192.168.10.101 k8s-w1" >> /etc/hosts

echo "192.168.10.102 k8s-w2" >> /etc/hosts

echo "192.168.20.100 k8s-w0" >> /etc/hosts

# add kubernetes repo

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

# add docker-ce repo with containerd

curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

# packets traversing the bridge are processed by iptables for filtering

echo 1 > /proc/sys/net/ipv4/ip_forward

# enable br_filter for iptables

modprobe br_netfilter

# Update the apt package index, install kubelet, kubeadm and kubectl, and pin their version

apt update && apt-get install -y kubelet kubectl kubeadm containerd.io && apt-mark hold kubelet kubeadm kubectl

# containerd configure to default and cgroup managed by systemd

containerd config default > /etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

# avoid WARN&ERRO(default endpoints) when crictl run

cat <<EOF > /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

EOF

# ready to install for k8s

systemctl restart containerd && systemctl enable containerd

systemctl enable --now kubelet

# Install packages

apt install tree jq bridge-utils net-tools conntrack ipset wireguard -y

# k8s Controlplane Join - API Server 192.168.10.10"

kubeadm join --token 123456.1234567890123456 --discovery-token-unsafe-skip-ca-verification 192.168.10.10:6443

Outputs:

Serverhost:

Value: !GetAtt EC21.PublicIp

기본 설정 확인

# (참고) control-plane

## kubeadm init --token 123456.1234567890123456 --token-ttl 0 --pod-network-cidr=172.16.0.0/16 --apiserver-advertise-address=192.168.10.10 --service-cidr 10.200.1.0/24 --cri-socket=unix:///run/containerd/containerd.sock

# worker

## kubeadm join --token 123456.1234567890123456 --discovery-token-unsafe-skip-ca-verification 192.168.10.10:6443

#

kubectl config rename-context "kubernetes-admin@kubernetes" "HomeLab"

kubens default

#

kubectl cluster-info

kubectl get node -owide

kubectl get service,ep

kubectl get pod -A -owide

#

tree /opt/cni/bin/

ls -l /opt/cni/bin/

#

ip -c route

ip -c addr

iptables -t filter -L

iptables -t nat -L

iptables -t filter -L | wc -l

iptables -t nat -L | wc -l

Calico CNI v3.28.1 설치 - Install , Release , IP pool(subnet)

# 모니터링

watch -d 'kubectl get pod -A -owide'

# calico cni install

## kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.1/manifests/calico.yaml - 서브넷 24bit 추가

# 기본 yaml 에 4946줄 이동 후 아래 내용 추가 해둠

vi calico.yaml

...

# Block size to use for the IPv4 POOL created at startup. Block size for IPv4 should be in the range 20-32. default 24

- name: CALICO_IPV4POOL_BLOCK_SIZE

value: "24"

kubectl apply -f https://raw.githubusercontent.com/gasida/KANS/main/kans3/calico-kans.yaml

#

tree /opt/cni/bin/

ls -l /opt/cni/bin/

ip -c route

ip -c addr

iptables -t filter -L

iptables -t nat -L

iptables -t filter -L | wc -l

iptables -t nat -L | wc -l

# calicoctl install

curl -L https://github.com/projectcalico/calico/releases/download/v3.28.1/calicoctl-linux-amd64 -o calicoctl

chmod +x calicoctl && mv calicoctl /usr/bin

calicoctl version

# CNI 설치 후 파드 상태 확인

kubectl get pod -A -o wide

Calico 기본 통신 이해

Calico : Calico is a networking and security solution that enables Kubernetes workloads and non-Kubernetes/legacy workloads to communicate seamlessly and securely.

Calico components - Docs

Datastore plugin : calico 설정 정보를 저장하는 곳 - k8s API datastore(kdd) 혹은 etcd 중 선택

BIRD (버드): 오픈 소스 소프트웨어 라우팅 데몬 프로그램. 해당 노드의 파드 네트워크 대역(podCIDR)을 BGP 라우팅 프로토콜을 통해서 광고함. 이를 통해 각 노드는 다른 모든 노드의 파드 대역과 통신할 수 있음. BGP Peer 에 라우팅 정보 전파 및 수신, BGP RR(Route Reflector)

Felix (필릭스) : 버드로 학습한 상대방 노드의 파드 네트워크 대역을 호스트의 라우팅 테이블에 최종적으로 업데이트하는 역할을 하며 IPtables 규칙을 설정 관리함. 인터페이스 관리, 라우팅 정보 관리, ACL 관리, 상태 체크

Confd : calico global 설정과 BGP 설정 변경 시(트리거) BIRD 에 적용해줌

Calico IPAM plugin : 클러스터 내에서 파드에 할당할 IP 대역

calico-kube-controllers : calico 동작 관련 감시(watch)

calicoctl : calico 오브젝트를 CRUD 할 수 있다, 즉 datastore 접근 가능

Calico 구성요소 확인

# 버전 확인 - 링크

## kdd 의미는 쿠버네티스 API 를 데이터저장소로 사용

calicoctl version

# calico 관련 정보 확인

kubectl get daemonset -n kube-system

kubectl get pod -n kube-system -l k8s-app=calico-node -owide

kubectl get deploy -n kube-system calico-kube-controllers

kubectl get pod -n kube-system -l k8s-app=calico-kube-controllers -owide

# 칼리코 IPAM 정보 확인 : 칼리코 CNI 를 사용한 파드가 생성된 노드에 podCIDR 네트워크 대역 확인 - 링크

calicoctl ipam show

# Block 는 각 노드에 할당된 podCIDR 정보

calicoctl ipam show --show-blocks

calicoctl ipam show --show-borrowed

calicoctl ipam show --show-configuration

# host-local IPAM 정보 확인 : k8s-m 노드의 podCIDR 은 host-local 대신 칼리코 IPAM 를 사용함

## 워커 노드마다 할당된 dedicated subnet (podCIDR) 확인

kubectl get nodes -o jsonpath='{.items[*].spec.podCIDR}' ;echo

kubectl get node k8s-m -o json | jq '.spec.podCIDR'

# CNI Plugin 정보 확인 - 링크

tree /etc/cni/net.d/

cat /etc/cni/net.d/10-calico.conflist | jq

...

"datastore_type": "kubernetes", # 칼리코 데이터저장소는 쿠버네티스 API 를 사용

"ipam": {

"type": "calico-ipam" # IPAM 은 칼리코 자체 IPAM 을 사용

},

...

# calicoctl node 정보 확인 : Bird 데몬(BGP)을 통한 BGP 네이버 연결 정보(bgp peer 는 노드의 IP로 연결) - 링크

calicoctl node status

calicoctl node checksystem

# ippool 정보 확인 : 클러스터가 사용하는 IP 대역 정보와 칼리코 모드 정보 확인

calicoctl get ippool -o wide

# 파드와 서비스 사용 네트워크 대역 정보 확인

kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range"

"--service-cluster-ip-range=10.200.1.0/24",

"--cluster-cidr=172.16.0.0/16",

kubectl get cm -n kube-system kubeadm-config -oyaml | grep -i subnet

podSubnet: 172.16.0.0/16

serviceSubnet: 10.96.0.0/12

# calico endpoint (파드)의 정보 확인 : WORKLOAD 는 파드 이름이며, 어떤 노드에 배포되었고 IP 와 cali 인터페이스와 연결됨을 확인

calicoctl get workloadEndpoint

calicoctl get workloadEndpoint -A

calicoctl get workloadEndpoint -o wide -A

# 노드에서 컨테이너(프로세스) 확인 : pstree

ps axf

4405 ? Sl 0:09 /usr/bin/containerd-shim-runc-v2 -namespace k8s.io -id dd532e5efaad436ebe7d10cdd3bb2ffe5a2873a0604ce3b

4425 ? Ss 0:00 \_ /pause

4740 ? Ss 0:00 \_ /usr/local/bin/runsvdir -P /etc/service/enabled

4811 ? Ss 0:00 \_ runsv allocate-tunnel-addrs

4819 ? Sl 0:00 | \_ calico-node -allocate-tunnel-addrs

4812 ? Ss 0:00 \_ runsv bird

4994 ? S 0:00 | \_ bird -R -s /var/run/calico/bird.ctl -d -c /etc/calico/confd/config/bird.cfg

4813 ? Ss 0:00 \_ runsv cni

4822 ? Sl 0:00 | \_ calico-node -monitor-token

4814 ? Ss 0:00 \_ runsv bird6

4993 ? S 0:00 | \_ bird6 -R -s /var/run/calico/bird6.ctl -d -c /etc/calico/confd/config/bird6.cfg

4815 ? Ss 0:00 \_ runsv confd

4820 ? Sl 0:00 | \_ calico-node -confd

4816 ? Ss 0:00 \_ runsv felix

4824 ? Sl 0:54 | \_ calico-node -felix

4817 ? Ss 0:00 \_ runsv node-status-reporter

4823 ? Sl 0:00 | \_ calico-node -status-reporter

4818 ? Ss 0:00 \_ runsv monitor-addresses

4825 ? Sl 0:00 \_ calico-node -monitor-addresses

- felix (필릭스) : Host의 Network Inteface, Route Table, iptables을 관리함

# 칼리코 필릭스를 통한 IPTables 규칙 설정 확인

iptables -t filter -S | grep cali

...

iptables -t nat -S | grep cali

...

- bird (버드) : bird는 라우팅 프로토콜 동작을 하는 데몬이며, 각 노드들의 파드 네트워크 대역 정보를 전파 및 전달 받음- 링크

calico-node 정보 상세 확인

- calico-node 에 bird 데몬에 별도의 cli 입력 툴을 제공합니다 → birdcl

# calico-node 컨테이너 확인

crictl ps | grep calico

# bird 프로세스 확인

ps -ef | grep bird.cfg

root@k8s-w1:~# ps -ef | grep bird.cfg

root 3094 2950 0 03:30 ? 00:00:01 bird -R -s /var/run/calico/bird.ctl -d -c /etc/calico/confd/config/bird.cfg

root 131712 55657 0 06:37 pts/0 00:00:00 grep --color=auto bird.cfg

# bird.cfg 파일 확인

find / -name bird.cfg

cat /var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/61/fs/etc/calico/confd/config/bird.cfg

참고

Calico Docs - About , Product , Training , Install ( Requirements , EKS , On-premises , Helm , hard way , VPP dataplane …) , FAQ

Frequently asked questions | Calico Documentation

Common questions that users ask about Calico.

docs.tigera.io

'IT > Container&k8s' 카테고리의 다른 글

| Calico 기본 통신(Pod - 외부(인터넷) 통신) (1) | 2024.09.22 |

|---|---|

| Calico 기본 통신(동일 노드에서 Pod - Pod 간) (1) | 2024.09.22 |

| K8S Flannel CNI (9) | 2024.09.07 |

| POD & PAUSE Container (6) | 2024.09.01 |

| KIND(kubernetes in docker) (3) | 2024.09.01 |

- Total

- Today

- Yesterday

- AWS

- VPN

- 파이썬

- 혼공단

- PYTHON

- security

- NFT

- IaC

- k8s calico

- cni

- operator

- cloud

- k8s cni

- NW

- handson

- AI

- terraform

- 도서

- k8s

- CICD

- AI Engineering

- GKE

- autoscaling

- 혼공파

- S3

- 혼공챌린지

- SDWAN

- EKS

- GCP

- ai 엔지니어링

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |